Many quantum researchers are working toward building technologies that allow for the existence of a global quantum internet, in which any two users on Earth would be able to conduct large-scale quantum computing and communicate securely with the help of quantum entanglement. Although this requires many more technological advancements, a team of researchers at Shanghai Jiao Tong University in China have managed to merge two independent networks, bringing the world a bit closer to realizing a quantum internet.

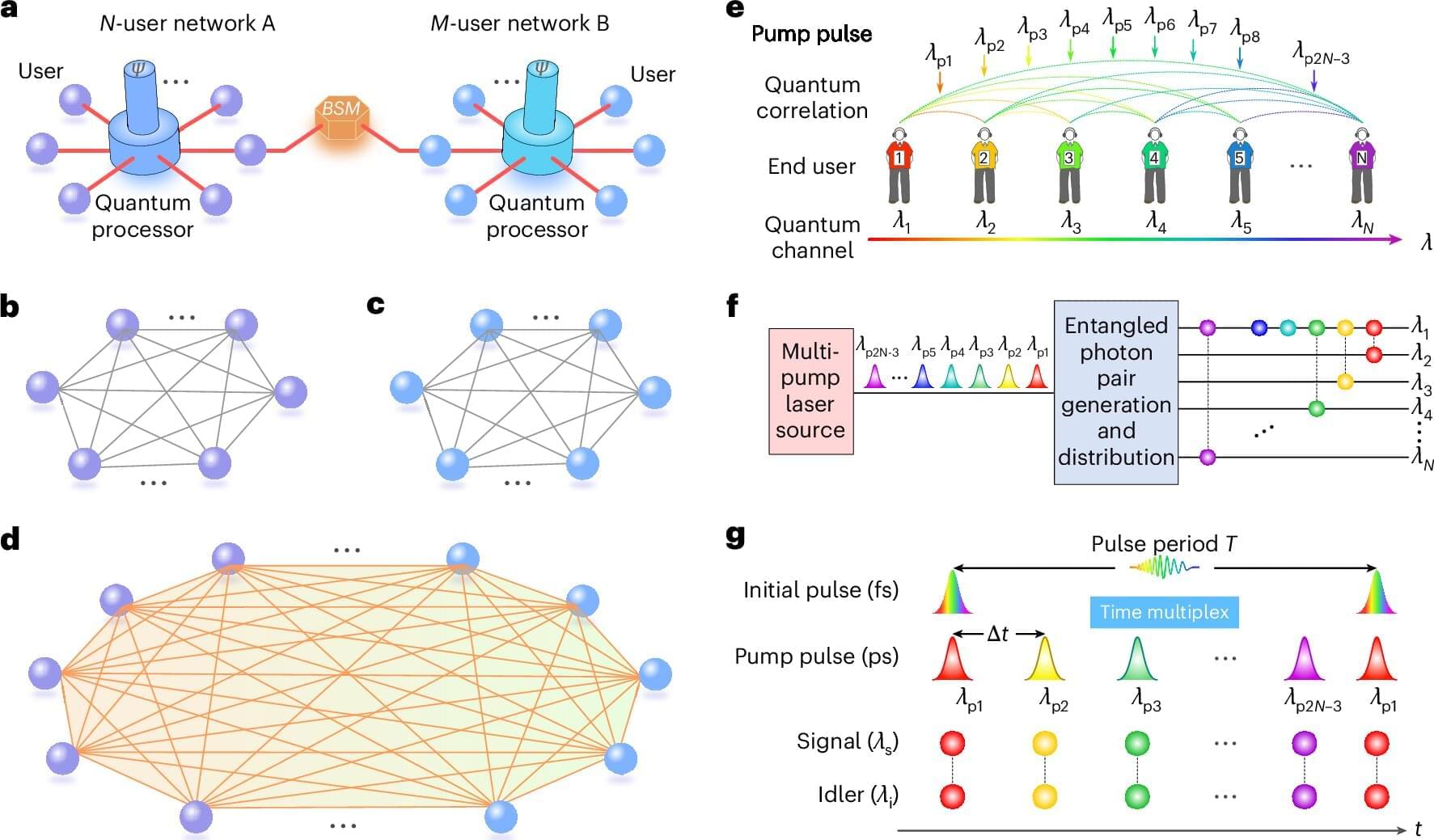

A true global quantum internet will require interconnectivity between many networks, and this has proven to be a much more difficult task for quantum networks than it is for classical networks. While researchers have demonstrated the ability to connect quantum computers within the same network, multi-user fusion remains a major challenge. Fully connected networks using dense wavelength division multiplexing (DWDM) have been achieved, but have scalability and complexity issues.

However, the research team involved in the new study, published in Nature Photonics, has merged two independent networks with 18 different users. All 18 users can communicate securely using entanglement-based protocols using this method. This represents the most complex multi-user quantum network to date.