A hand exoskeleton robot enhances the already well-honed skills of pianists, enabling them to overcome limits of motor expertise.

Creating mini mitochondria factories helped recharge damaged cells in a dish, providing proof-of-concept work that could pave the way to new regenerative medicine therapies

Wang and Wu et al. identify soluble interleukin-6 receptor (sIL-6R) as a key exerkine determining the efficacy of exercise in diabetes prevention, which is modulated by microbiome-dependent leucine through a gut-adipose tissue axis. Pharmacological or dietary interventions targeting adipocyte-secreted sIL-6R may help to improve the metabolic outcomes in those exercise non-responders.

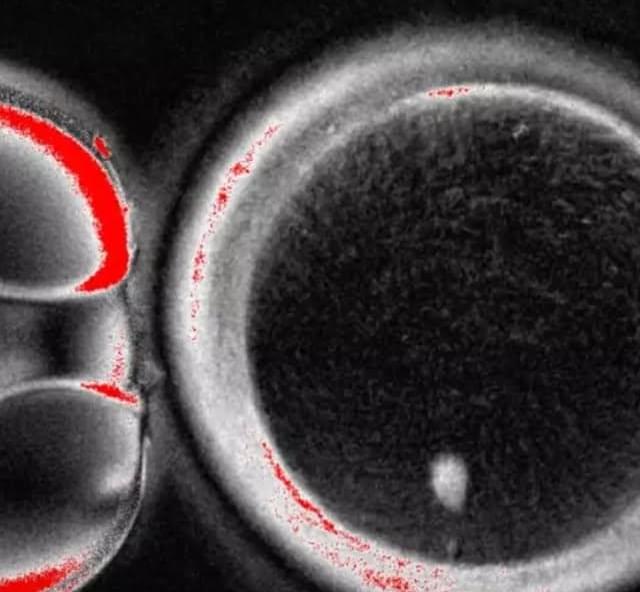

Marking a first in reproductive science. The process involved transferring skin cell nuclei into donated eggs and stimulating them to behave like natural oocytes, some of which developed into early embryos in the lab. While none progressed beyond a few days and major safety hurdles remain, the study suggests a future path that could one day help women with infertility and expand options in reproductive medicine.

#science #medicine #genetics #fertility #research

Researchers have created a protein that can detect the faint chemical signals neurons receive from other brain cells. By tracking glutamate in real time, scientists can finally see how neurons process incoming information before sending signals onward. This reveals a missing layer of brain communication that has been invisible until now. The discovery could reshape how scientists study learning, memory, and brain disease.