A company spokesperson for the oil drilling and fracking giant declined to name the executive overseeing cybersecurity, if any.

The reef of Tela Bay should be dead if anything we know about coral reefs is true. The harms it faces are manifold, from warm waters to boat traffic to agricultural runoff and murky water.

Not only can the Tela reef survive these hazards, it thrives—as no other reef in the Caribbean thrives. On any given day, live coral cover in Tela is around 65%, almost four times more than the average for the Caribbean (18%).

Scientists are now working hard and fast to try and solve the mystery of why the Tela Reef seems partially invincible, and whether its secret sauce can be applied to other reefs at peril in the Gulf of Mexico.

Get a 20% discount on The Economist’s annual digital subscriptions at https://www.economist.com/TOEProfessor Ivette Fuentes is a leading theoretical physicis…

Can a process as complex as science be automated?

Scientific discovery is one of the most sophisticated human activities. First, scientists must understand the existing knowledge and identify a significant gap.

Next, they must formulate a research question and design and conduct an experiment in pursuit of an answer.

Then, they must analyse and interpret the results of the experiment, which may raise yet another research question.

Two years on, most of those productivity gains haven’t materialized. And we’ve seen something peculiar and slightly unexpected happen: People have started forming relationships with AI systems. We talk to them, say please and thank you, and have started to invite AIs into our lives as friends, lovers, mentors, therapists, and teachers.

We’re seeing a giant, real-world experiment unfold, and it’s still uncertain what impact these AI companions will have either on us individually or on society as a whole, argue Robert Mahari, a joint JD-PhD candidate at the MIT Media Lab and Harvard Law School, and Pat Pataranutaporn, a researcher at the MIT Media Lab. They say we need to prepare for “addictive intelligence”, or AI companions that have dark patterns built into them to get us hooked. You can read their piece here. They look at how smart regulation can help us prevent some of the risks associated with AI chatbots that get deep inside our heads.

The idea that we’ll form bonds with AI companions is no longer just hypothetical. Chatbots with even more emotive voices, such as OpenAI’s GPT-4o, are likely to reel us in even deeper. During safety testing, OpenAI observed that users would use language that indicated they had formed connections with AI models, such as “This is our last day together.” The company itself admits that emotional reliance is one risk that might be heightened by its new voice-enabled chatbot.

This two-day event invites leading researchers, entrepreneurs, and funders to drive progress. Explore new opportunities, form lasting collaborations, and joi…

Join Randal Koene, a computational neuroscientist, as he dives into the intricate world of whole brain emulation and mind uploading, while touching on the ethical pillars of AI. In this episode, Koene discusses the importance of equal access to AI, data ownership, and the ethical impact of AI development. He explains the potential future of AGI, how current social and political systems might influence it, and touches on the scientific and philosophical aspects of creating a substrate-independent mind. Koene also elaborates on the differences between human cognition and artificial neural networks, the challenge of translating brain structure to function, and efforts to accelerate neuroscience research through structured challenges.

00:00 Introduction to Randal Koene and Whole Brain Emulation.

00:39 Ethical Considerations in AI Development.

02:20 Challenges of Equal Access and Data Ownership.

03:40 Impact of AGI on Society and Development.

05:58 Understanding Mind Uploading.

06:39 Randall’s Journey into Computational Neuroscience.

08:14 Scientific and Philosophical Aspects of Substrate Independent Minds.

13:07 Brain Function and Memory Processes.

25:34 Whole Brain Emulation: Current Techniques and Challenges.

32:12 The Future of Neuroscience and AI Collaboration.

SingularityNET is a decentralized marketplace for artificial intelligence. We aim to create the world’s global brain with a full-stack AI solution powered by a decentralized protocol.

We gathered the leading minds in machine learning and blockchain to democratize access to AI technology. Now anyone can take advantage of a global network of AI algorithms, services, and agents.

Website: https://singularitynet.io.

Discord: / discord.

Forum: https://community.singularitynet.io.

Telegram: https://t.me/singularitynet.

Twitter: / singularitynet.

Facebook: / singularitynet.io.

Instagram: / singularitynet.io.

Github: https://github.com/singnet.

Linkedin: / singularitynet.

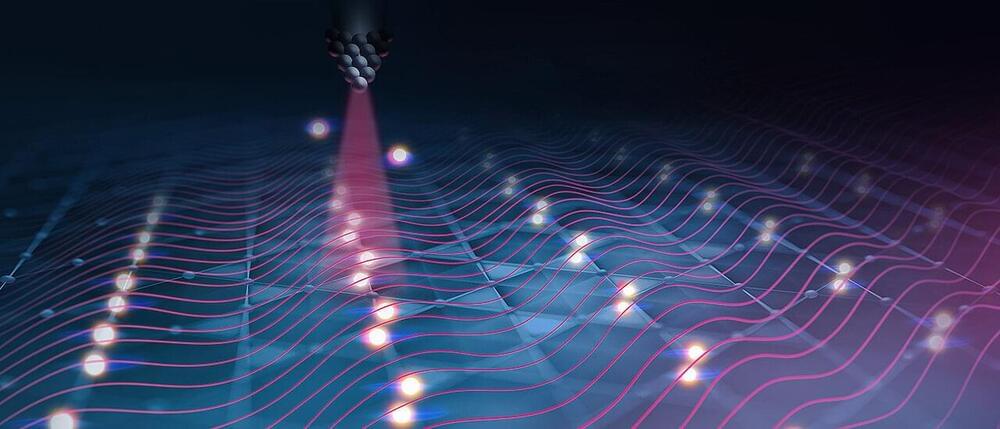

Have you ever wondered what it would be like to upload your mind to a computer? To have a digital copy of your personality, memories, and skills that could live on after your biological death? This is the idea behind whole brain emulation, a hypothetical process of scanning a brain and creating a software version of it that can run on any compatible hardware. In this video, we will explore the science and challenges of whole brain emulation, the ethical and social implications of creating digital minds, and the potential benefits and risks of this technology for humanity. Join us as we dive into the fascinating world of whole-brain emulation!

#wholebrainemulation.

#minduploading.

#digitalimmortality.

#artificialintelligence.

#neuroscience.

#braincomputerinterface.

#substrateindependentminds.

#transhumanism.

#futurism.

#mindcloning