It’s a van. Its seats up to 20 people, it’s autonomous, it can be configured for goods and yes it will look like this IRL. … #tesla #cybervan #autonomus #future #technology #ev

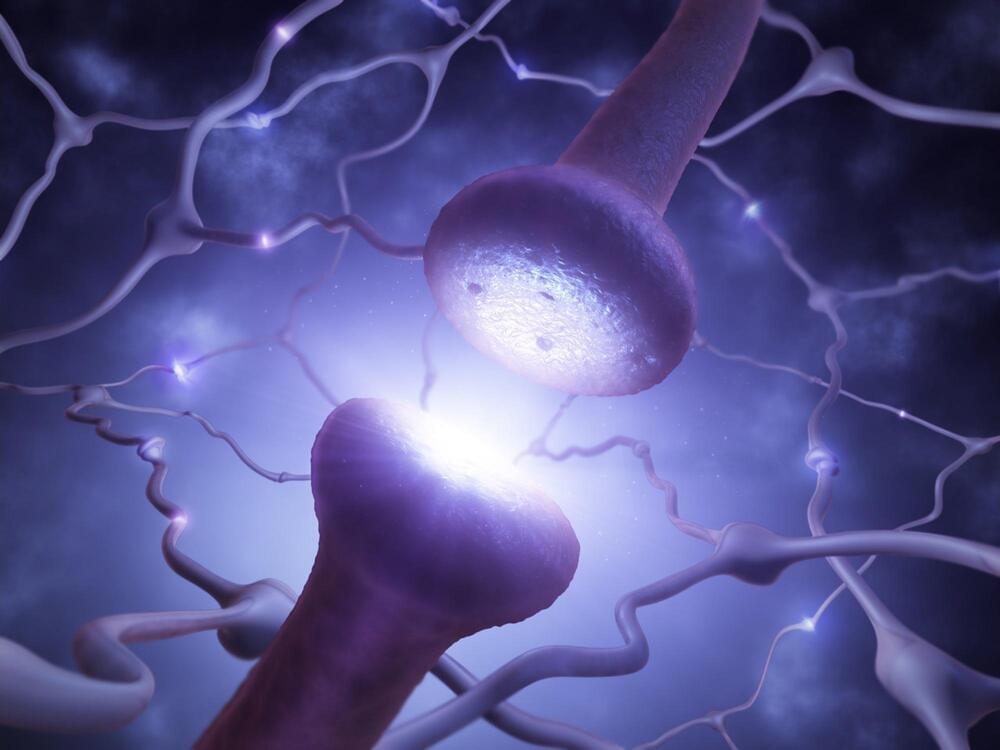

Scientists discovered that the molecule CaMKII helps neurons encode information over seconds, a key process in learning. This challenges previous beliefs about how CaMKII influences synapse-specific plasticity.

A recent study from the Max Planck Florida Institute for Neuroscience, published in Nature, has uncovered a crucial step in how neurons encode information on timescales aligned with the process of learning.

A timing mismatch.

MILAN — Vast Space unveiled the design of the space station it plans to propose to NASA in the next phase of the agency’s program to develop commercial successors to the International Space Station.

The company outlined its plans for the Haven-2 station in a release timed to the opening of the International Astronautical Congress here Oct. 14, describing how it will deploy the station in segments starting in the late 2020s.

Vast has to date focused on Haven-1, the single-module station it plans to launch in the second half of 2025 to be visited by up to four missions for short stays. However, the company has made clear its intent is to compete for the second phase of NASA’s Commercial Low Earth Orbit Destinations, or CLD, program as part of the agency’s ISS transition efforts.

“A Vision for a 100% Hydrogen-Fueled Future In their research and development testing facility, located at the headquarter office outside of Bergen, Norway, Bergen Engines is diligently working toward the development of a 100% hydrogen-fueled engine by the end of this year, and are on track to reach their goal.”

Bergen Engines now increase full natural gas engine range to run on 25% hydrogen in full operation without modification.

The interlocking bricks, which can be repurposed many times over, can withstand similar pressures as their concrete counterparts. Engineers developed a new kind of reconfigurable masonry made from 3D-printed, recycled glass. The bricks could be reused many times over in building facades and internal walls.

What if construction materials could be put together and taken apart as easily as LEGO bricks? Such reconfigurable masonry would be disassembled at the end of a building’s lifetime and reassembled into a new structure, in a sustainable cycle that could supply generations of buildings using the same physical building blocks.

That’s the idea behind circular construction, which aims to reuse and repurpose a building’s materials whenever possible, to minimize the manufacturing of new materials and reduce the construction industry’s “embodied carbon,” which refers to the greenhouse gas emissions associated with every process throughout a building’s construction, from manufacturing to demolition.

Fever temperatures rev up immune cell metabolism, proliferation and activity, but they also — in a particular subset of T cells — cause mitochondrial stress, DNA damage and cell death, Vanderbilt University Medical Center researchers have discovered.

The findings, published Sept. 20 in the journal Science Immunology, offer a mechanistic understanding for how cells respond to heat and could explain how chronic inflammation contributes to the development of cancer.

The impact of fever temperatures on cells is a relatively understudied area, said Jeff Rathmell, PhD, Cornelius Vanderbilt Professor of Immunobiology and corresponding author of the new study. Most of the existing temperature-related research relates to agriculture and how extreme temperatures impact crops and livestock, he noted. It’s challenging to change the temperature of animal models without causing stress, and cells in the laboratory are generally cultured in incubators that are set at human body temperature: 37 degrees Celsius (98.6 degrees Fahrenheit).

New research done at NASA’s Jet Propulsion Laboratory reveals potential signs of a rocky, volcanic moon orbiting an exoplanet 635 light-years from Earth. The biggest clue is a sodium cloud that the findings suggest is close to but slightly out of sync with the exoplanet, a Saturn-size gas giant named WASP-49 b, although additional research is needed to confirm the cloud’s behavior. Within our solar system, gas emissions from Jupiter’s volcanic moon Io create a similar phenomenon.

Research on superconductivity has taken a significant leap with Princeton Universitys exploration of edge supercurrents in topological superconductors like molybdenum telluride.

Initially elusive, these supercurrents have been observed and enhanced through experiments with niobium, leading to intriguing phenomena such as stochastic switching and anti-hysteresis, altering the understanding of electron behavior in superconductors.

Superconductivity and Topological Materials.