More than 100 sustainable homes, also known as “living vessels,” are built into the earth in Taos, New Mexico, and are not connected to any water or electricity.

According to the World Health Organization, antibiotic resistance is a top public health risk that was responsible for 1.27 million deaths across the globe in 2019. When repeatedly exposed to antibiotics, bacteria rapidly learn to adapt their genes to counteract the drugs—and share the genetic tweaks with their peers—rendering the drugs ineffective.

Superpowered bacteria also torpedo medical procedures—surgery, chemotherapy, C-sections—adding risk to life-saving therapies. With antibiotic resistance on the rise, there are very few new drugs in development. While studies in petri dishes have zeroed in on potent candidates, some of these also harm the body’s cells, leading to severe side effects.

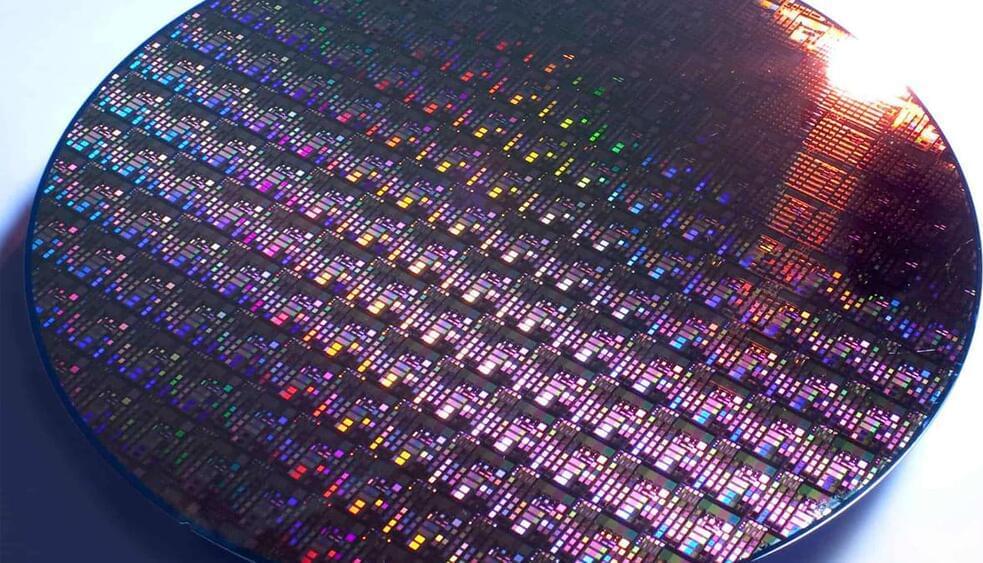

What if there’s a way to retain their bacteria-fighting ability, but with fewer side effects? This month, researchers used AI to reengineer a toxic antibiotic. They made thousands of variants and screened for the ones that maintained their bug-killing abilities without harming human cells.

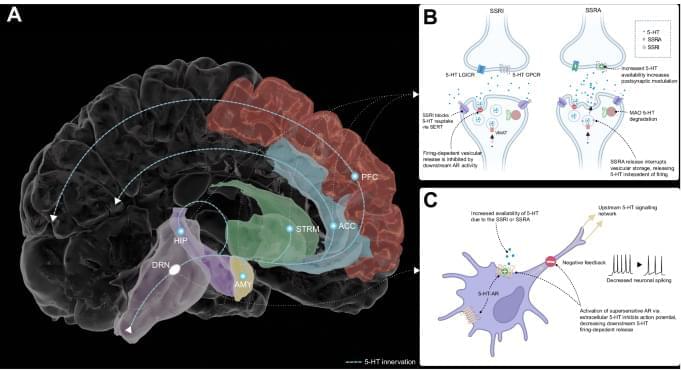

Increasing serotonin can change how people learn from negative information, as well as improving how they respond to it, according to a new study published in the leading journal Nature Communications.

The study by scientists at the University of Oxford’s Department of Psychiatry and the National…

Serotonin is involved in aversive processing, but how serotonin shapes behavior remains unclear. Here, the authors show that directly enhancing synaptic serotonin in humans reduces outcome sensitivity and increases behavioral inhibition in aversive contexts.

Terry Tao is one of the world’s leading mathematicians and winner of many awards including the Fields Medal. He is Professor of Mathematics at the University of California, Los Angeles (UCLA). Following his talk, Terry is in conversation with fellow mathematician Po-Shen Loh.

The Oxford Mathematics Public Lectures are generously supported by XTX Markets.

Many believe humanity’s climb upward may have been assisted by outsiders. Is this possible, and if so, what does that tell us about our own past… and future?

Watch my exclusive video Jupiter Brains \& Mega Minds: https://nebula.tv/videos/isaacarthur–…

Get Nebula using my link for 40% off an annual subscription: https://go.nebula.tv/isaacarthur.

Get a Lifetime Membership to Nebula for only $300: https://go.nebula.tv/lifetime?ref=isa…

Use the link gift.nebula.tv/isaacarthur to give a year of Nebula to a friend for just $30.

Join this channel to get access to perks:

/ @isaacarthursfia.

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: / isaacarthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-a…

Facebook Group: / 1583992725237264

Reddit: / isaacarthur.

Twitter: / isaac_a_arthur on Twitter and RT our future content.

SFIA Discord Server: / discord.

Credits:

Were Primitive Humans Uplifted?

Episode 459a; August 11, 2024

Produced, Narrated \& Written: Isaac Arthur.

Editor: Evan Schultheis.

Graphics: Jeremy Jozwik \& Ken York YD Visual.

Select imagery/video supplied by Getty Images.

Music Courtesy of Epidemic Sound http://epidemicsound.com/creator.

Sergey Cheremisinov, \

Main episode with Tom Campbell (Dec 2020): https://youtu.be/kko-hVA-8IU?list=PLZ7ikzmc6zlN6E8KrxcYCWQIHg2tfkqvR Listen on Spotify: https://open.spotify.com/show/4gL14b92xAErofYQA7bU4e

Nuclear power already has an energy density advantage over other sources of thermal electricity generation. But what if nuclear generation didn’t require a steam turbine? What if the radiation from a reactor was less a problem to be managed and more a source of energy? And what if an energy conversion technology could scale to fit nuclear power systems ranging from miniature batteries to the grid? The Defense Advanced Research Projects Agency (DARPA) Defense Sciences Office (DSO) is asking these types of questions in a request for information on High Power Direct Energy Conversion from Nuclear Power Systems, released August 1.

which is widely believed to be the root cause of many age-related diseases.

The study, published today in the journal npj Aging, raises the exciting possibility of a protective agent that could dampen age-related inflammation and restore normal immune function in older adults.

PEPITEM (Peptide Inhibitor of Trans-Endothelial Migration) was initially identified at the University of Birmingham in 2015. While the role of the PEPITEM pathway has already been demonstrated in immune-mediated diseases, this is the first data showing that PEPITEM has the potential to increase healthspan in an aging population.