The all-in-one optical fiber spectrometer offers a compact microscale design with performance on par with traditional laboratory-based systems.

Miniaturized spectroscopy systems capable of detecting trace concentrations at parts-per-billion (ppb) levels are critical for applications such as environmental monitoring, industrial process control, and biomedical diagnostics.

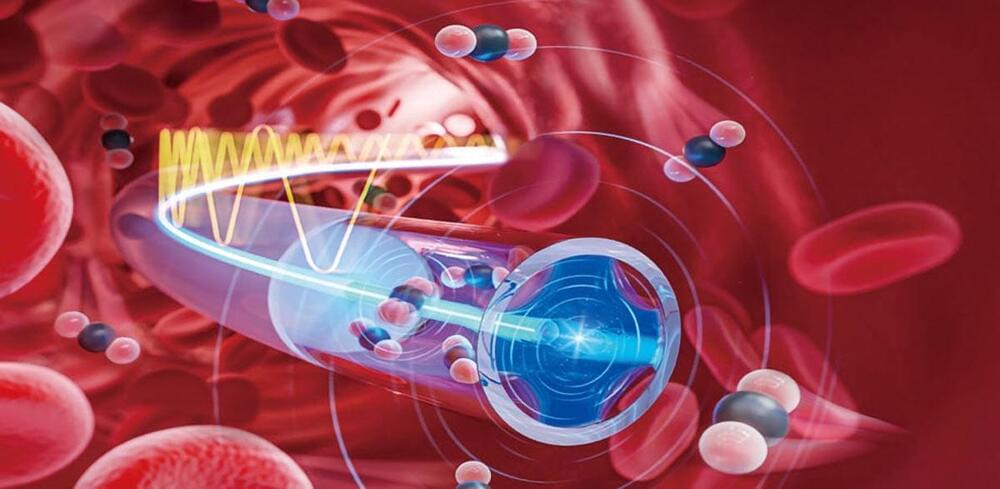

However, conventional bench-top spectroscopy systems are often too large, complex, and impractical for use in confined spaces. Traditional laser spectroscopy techniques rely on bulky components—including light sources, mirrors, detectors, and gas cells—to measure light absorption or scattering. This makes them unsuitable for minimally invasive applications, such as intravascular diagnostics, where compactness and precision are essential.