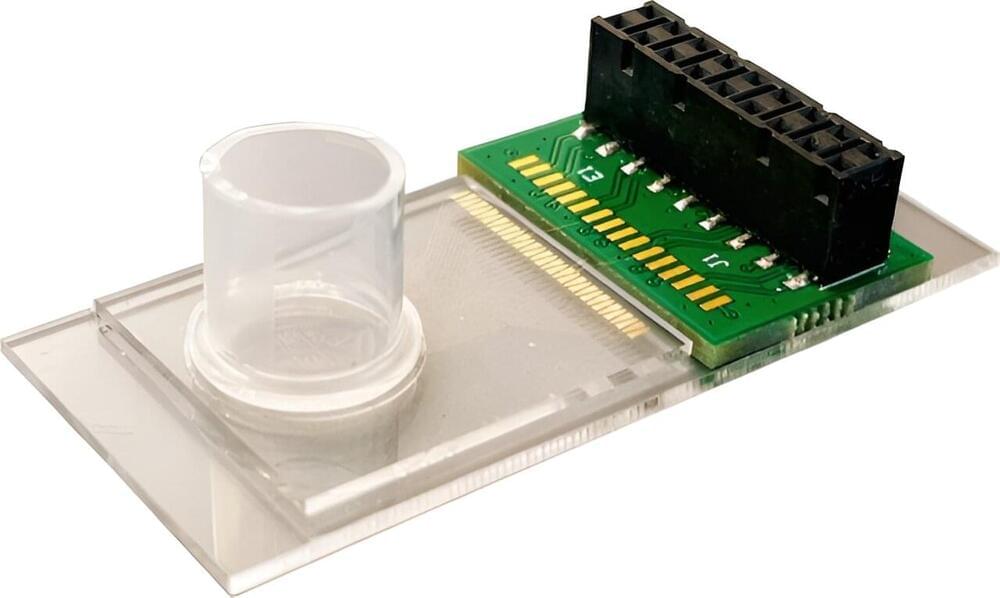

UMass Amherst researchers have pushed forward the boundaries of biomedical engineering one hundredfold with a new method for DNA detection with unprecedented sensitivity.

“DNA detection is in the center of bioengineering,” says Jinglei Ping, lead author of the paper that appeared in Proceedings of the National Academy of Sciences.

Ping is an assistant professor of mechanical and industrial engineering, an adjunct assistant professor in biomedical engineering and affiliated with the Center for Personalized Health Monitoring of the Institute for Applied Life Sciences. “Everyone wants to detect the DNA at a low concentration with a high sensitivity. And we just developed this method to improve the sensitivity by about 100 times with no cost.”