I have my own introduction quantum mechanics course that you can check out on Brilliant! First 30 days are free and 20% off the annual premium subscription when you use our link ➜ https://brilliant.org/sabine.

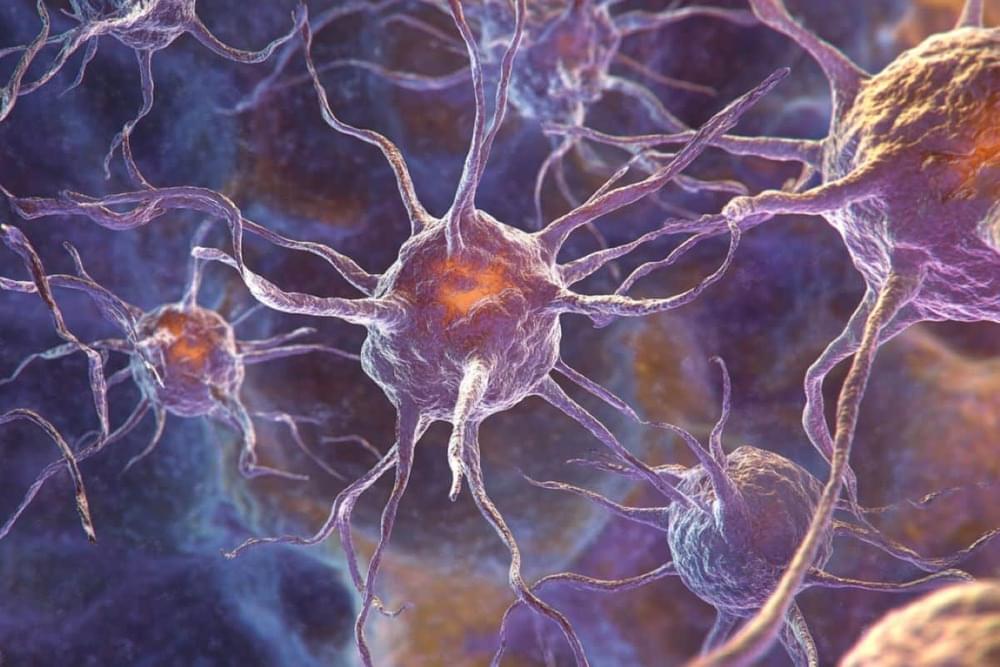

Physicists are obsessed with black holes, but we still don’t know what’s going on inside of them. One idea is that black holes do not truly exist, but instead they are big quantum objects that have been called fuzzballs or frozen stars. This idea has a big problem. Let’s take a look.

This video comes with a quiz which you can take here: https://quizwithit.com/start_thequiz/.…

Paper: https://journals.aps.org/prd/abstract…

🤓 Check out my new quiz app ➜ http://quizwithit.com/

💌 Support me on Donorbox ➜ https://donorbox.org/swtg.

📝 Transcripts and written news on Substack ➜ https://sciencewtg.substack.com/

👉 Transcript with links to references on Patreon ➜ / sabine.

📩 Free weekly science newsletter ➜ https://sabinehossenfelder.com/newsle…

👂 Audio only podcast ➜ https://open.spotify.com/show/0MkNfXl…

🔗 Join this channel to get access to perks ➜

/ @sabinehossenfelder.

🖼️ On instagram ➜ / sciencewtg.

#science #sciencenews #physics #space