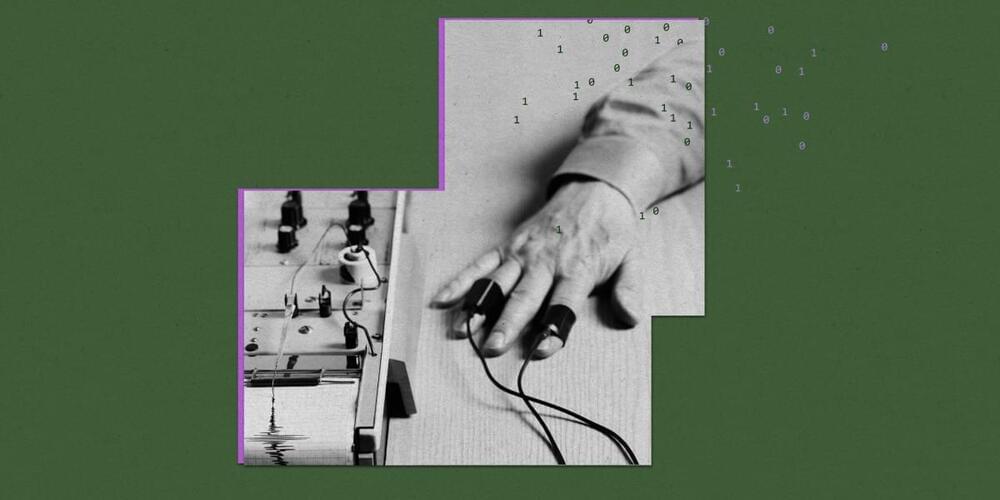

PRESS RELEASE — To perform quantum computations, quantum bits (qubits) must be cooled down to temperatures in the millikelvin range (close to-273 Celsius), to slow down atomic motion and minimize noise. However, the electronics used to manage these quantum circuits generate heat, which is difficult to remove at such low temperatures. Most current technologies must therefore separate quantum circuits from their electronic components, causing noise and inefficiencies that hinder the realization of larger quantum systems beyond the lab.

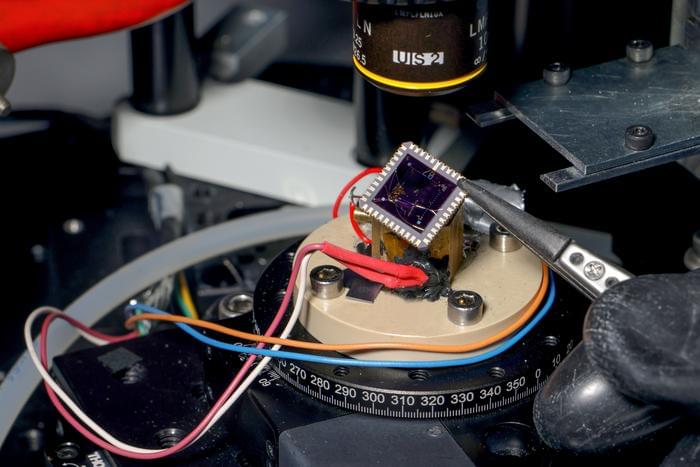

Researchers in EPFL’s Laboratory of Nanoscale Electronics and Structures (LANES), led by Andras Kis, in the School of Engineering have now fabricated a device that not only operates at extremely low temperatures, but does so with efficiency comparable to current technologies at room temperature.