Chaperone-mediated autophagy (CMA) is the lysosomal degradation of individually selected proteins, independent of vesicle fusion. CMA is a central part of the proteostasis network in vertebrate cells. However, CMA is also a negative regulator of anabolism, and it degrades enzymes required for glycolysis, de novo lipogenesis, and translation at the cytoplasmic ribosome. Recently, CMA has gained attention as a possible modulator of rodent aging. Two mechanistic models have been proposed to explain the relationship between CMA and aging in mice. Both of these models are backed by experimental data, and they are not mutually exclusionary. Model 1, the “Longevity Model,” states that lifespan-extending interventions that decrease signaling through the INS/IGF1 signaling axis also increase CMA, which degrades (and thereby reduces the abundance of) several proteins that negatively regulate vertebrate lifespan, such as MYC, NLRP3, ACLY, and ACSS2. Therefore, enhanced CMA, in early and midlife, is hypothesized to slow the aging process. Model 2, the “Aging Model,” states that changes in lysosomal membrane dynamics with age lead to age-related losses in the essential CMA component LAMP2A, which in turn reduces CMA, contributes to age-related proteostasis collapse, and leads to overaccumulation of proteins that contribute to age-related diseases, such as Alzheimer’s disease, Parkinson’s disease, cancer, atherosclerosis, and sterile inflammation. The objective of this review paper is to comprehensively describe the data in support of both of these explanatory models, and to discuss the strengths and limitations of each.

Chaperone-mediated autophagy (CMA) is a highly selective form of lysosomal proteolysis, where proteins bearing consensus motifs are individually selected for lysosomal degradation (Dice, 1990; Cuervo and Dice, 1996; Cuervo et al., 1997). CMA is mechanistically distinct from macroautophagy and microautophagy, which, along with CMA, are present in most mammalian cells types.

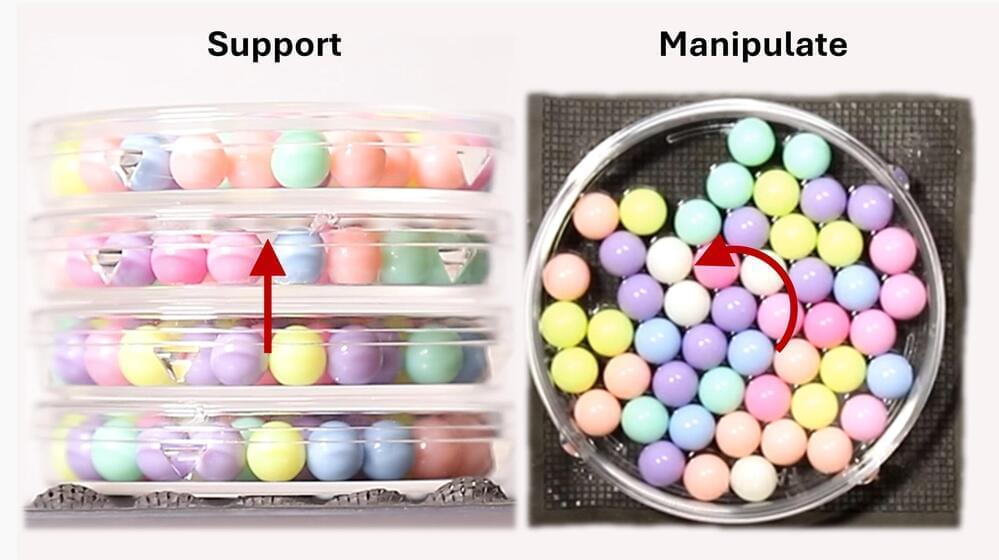

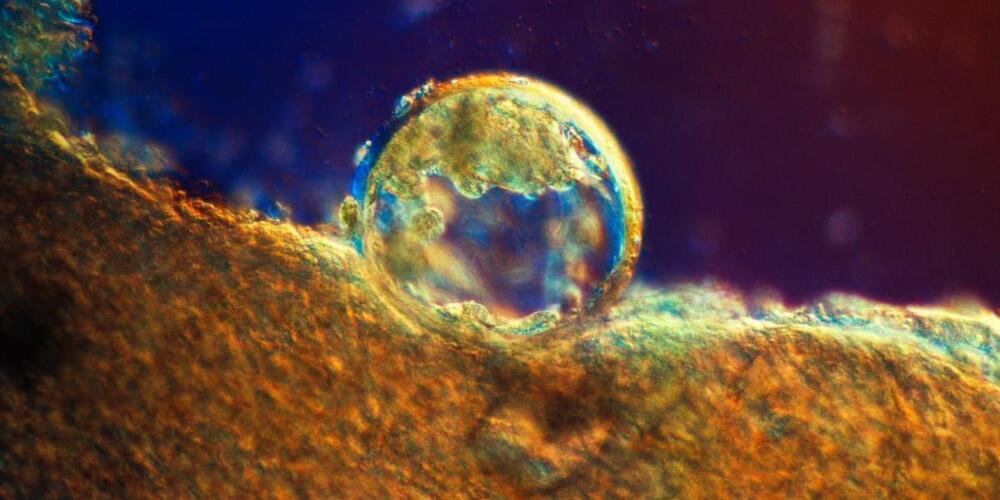

Macroautophagy (Figure 1 A) begins when inclusion membranes (phagophores) engulf large swaths of cytoplasm or organelles, and then seal to form double-membrane autophagosomes. Autophagosomes then fuse with lysosomes, delivering their contents for degradation by lysosomal hydrolases (Galluzzi et al., 2017). Macroautophagy was the first branch of autophagy to be discovered, and it is easily recognized in electron micrograms, based on the morphology of phagophores, autophagosomes, and lysosomes (Galluzzi et al., 2017).