Starlab Space, the designated successor to the International Space Station, is coming to the Innovation Park in Dübendorf ZH. The private US company is one of the big players in space travel.

📝 — Kono, et al.

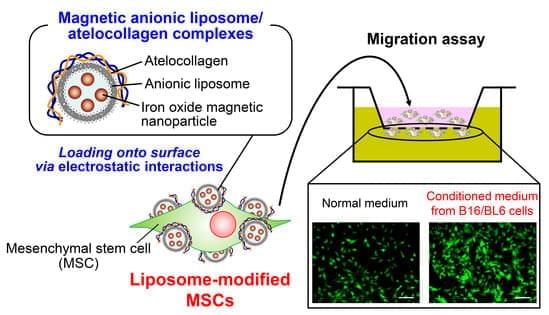

In this paper, the authors attempted to load liposomes on the surface of MSCs by using the magnetic anionic liposome/atelocollagen complexes that we previously developed and assessed the characters of liposome-loaded MSCs as drug carriers.

Full text is available 👇

Mesenchymal stem cells (MSCs) have a tumor-homing capacity; therefore, MSCs are a promising drug delivery carrier for cancer therapy. To maintain the viability and activity of MSCs, anti-cancer drugs are preferably loaded on the surface of MSCs, rather than directly introduced into MSCs. In this study, we attempted to load liposomes on the surface of MSCs by using the magnetic anionic liposome/atelocollagen complexes that we previously developed and assessed the characters of liposome-loaded MSCs as drug carriers. We observed that large-sized magnetic anionic liposome/atelocollagen complexes were abundantly associated with MSCs via electrostatic interactions under a magnetic field, and its cellular internalization was lower than that of the small-sized complexes.

New observations from the James Webb Space Telescope suggest that a new feature in the universe—not a flaw in telescope measurements—may be behind the decade-long mystery of why the universe is expanding faster today than it did in its infancy billions of years ago.

The new data confirms Hubble Space Telescope measurements of distances between nearby stars and galaxies, offering a crucial cross-check to address the mismatch in measurements of the universe’s mysterious expansion. Known as the Hubble tension, the discrepancy remains unexplained even by the best cosmology models.

“The discrepancy between the observed expansion rate of the universe and the predictions of the standard model suggests that our understanding of the universe may be incomplete. With two NASA flagship telescopes now confirming each other’s findings, we must take this [Hubble tension] problem very seriously—it’s a challenge but also an incredible opportunity to learn more about our universe,” said Nobel laureate and lead author Adam Riess, a Bloomberg Distinguished Professor and Thomas J. Barber Professor of Physics and Astronomy at Johns Hopkins University.

The larger challenge for hydrogen is sourcing it from green suppliers. Electrolyzers are used to harvest green hydrogen by splitting water into its component atoms. For the hydrogen to be green it has to either come from natural-occurring sources which are rare or from producing it using renewable energy generated by hydro, solar, onshore, and offshore wind turbines. Building an electrolyzer infrastructure would be key to creating hydrogen-powered vehicles for long-distance travel with quick refuelling turnarounds. The trucking industry is likely the best candidate for the use of this fuel and technology.

Making ICE-Powered Vehicles More Efficient.

About 99% of global transportation today runs on ICE with 95% of the energy coming from liquid fuels made from petroleum. Experts at Yanmar Replacements Parts, a diesel engine aftermarket supplier, state that, “while hydrogen-powered and electric vehicles will be on the rise, ICEs will continue to remain the norm and will be for the foreseeable future.” That’s why companies are reluctant to abandon ICE to make the technology more compatible to lower carbon emissions. By choosing different materials during manufacturing, automotive companies believe that production emissions can be abated by 66%.

Cancer cells have evolved sophisticated strategies to evade the immune system, prolonging their survival and growth. They have also developed survival tactics to resist immune checkpoint inhibition (ICI) treatments. Yet, our understanding of how cancer cells escape the immune response or immune activities perpetuated by anti-cancer immunotherapies remains incomplete. A recent study published in Cancer Discovery has shed light on one such mechanism, revealing how tumors develop ICI resistance and enhancing our understanding of cancer immunotherapy.

The researchers screened 208 metastatic castration-resistant prostate cancer (mCRPC) biopsies searching for genes commonly observed in tumors. Once they identified candidate genes, the investigators compared their expression to that of signatures related to cytotoxic T cells (CTLs), the immune cell subset responsible for identifying and killing cancer cells. The analysis identified an enzyme, ubiquitin-like modifier activating enzyme 1 (UBA1), which the researchers found particularly interesting. Tumors with high expression of UBA1 had low expression of CTL genes.

Further investigation revealed that elevated UBA1 expression predicted which tumors would develop resistance to ICI and which patients would experience the shortest survival outcomes.

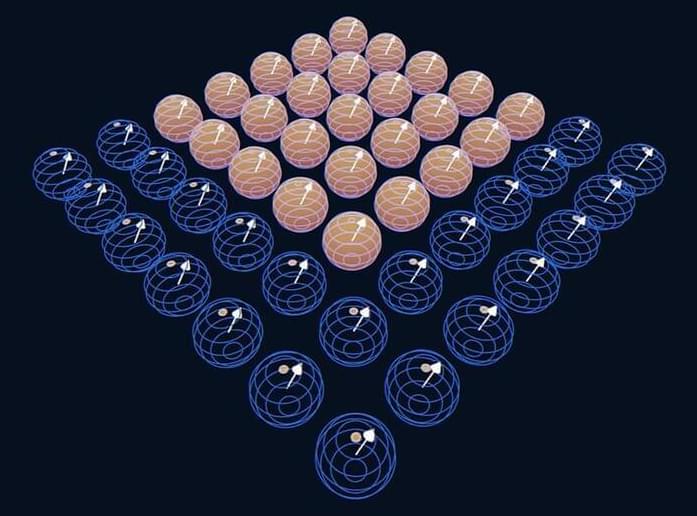

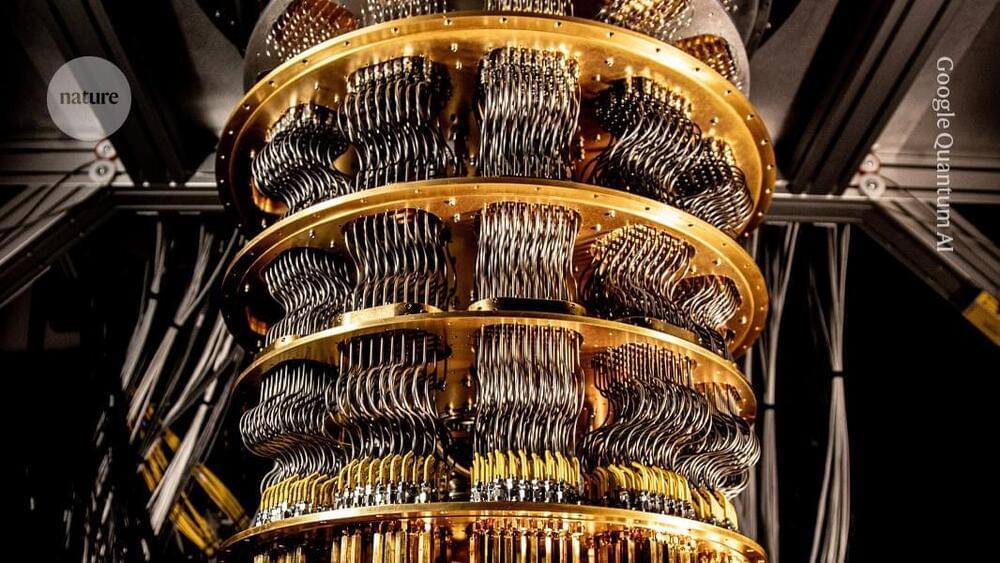

Researchers at Google have built a chip that has enabled them to demonstrate the first ‘below threshold’ quantum calculations — a key milestone in the quest to build quantum computers that are accurate enough to be useful.

The experiment, described on 9 December in Nature1, shows that with the right error-correction techniques, quantum computers can perform calculations with increasing accuracy as they are scaled up — with the rate of this improvement exceeding a crucial threshold. Current quantum computers are too small and too error-prone for most commercial or scientific applications.

Avalo, a crop development company based in North Carolina, is using machine learning models to accelerate the creation of new and resilient crop varieties.

The traditional way to select for favorable traits in crops is to identify individual plants that exhibit the trait – such as drought resistance – and use those plants to pollinate others, before planting those seeds in fields to see how they perform. But that process requires growing a plant through its entire life cycle to see the result, which can take many years.

Avalo uses an algorithm to identify the genetic basis of complex traits like drought, or pest resistance in hundreds of crop varieties. Plants are cross-pollinated in the conventional way, but the algorithm can predict the performance of a seed without needing to grow it – speeding up the process by as much as 70%, according to Avalo chief technology officer Mariano Alvarez.