For decades, creating human skin models with physiological relevance has been a persistent challenge in dermatological research. Conventional approaches, such as rodent models and two-dimensional skin cultures, fail to replicate the complexity and functionality of human skin, particularly in aspects like appendage development. These gaps hinder progress in translating laboratory findings into effective clinical treatments. The scientific community has long recognized the urgent need for advanced skin models that authentically emulate human skin’s structure and function.

On January 16, 2025, a pivotal study (DOI: 10.1093/burnst/tkae070) published in the journal Burns & Trauma made remarkable progress in skin regeneration. Researchers discovered that employing an air-liquid interface (ALI) culture method significantly enhances hair follicle formation within hiPSC-derived skin organoids compared to traditional floating culture techniques. This breakthrough holds immense potential for advancing therapies for skin disorders and crafting next-generation skin regeneration solutions.

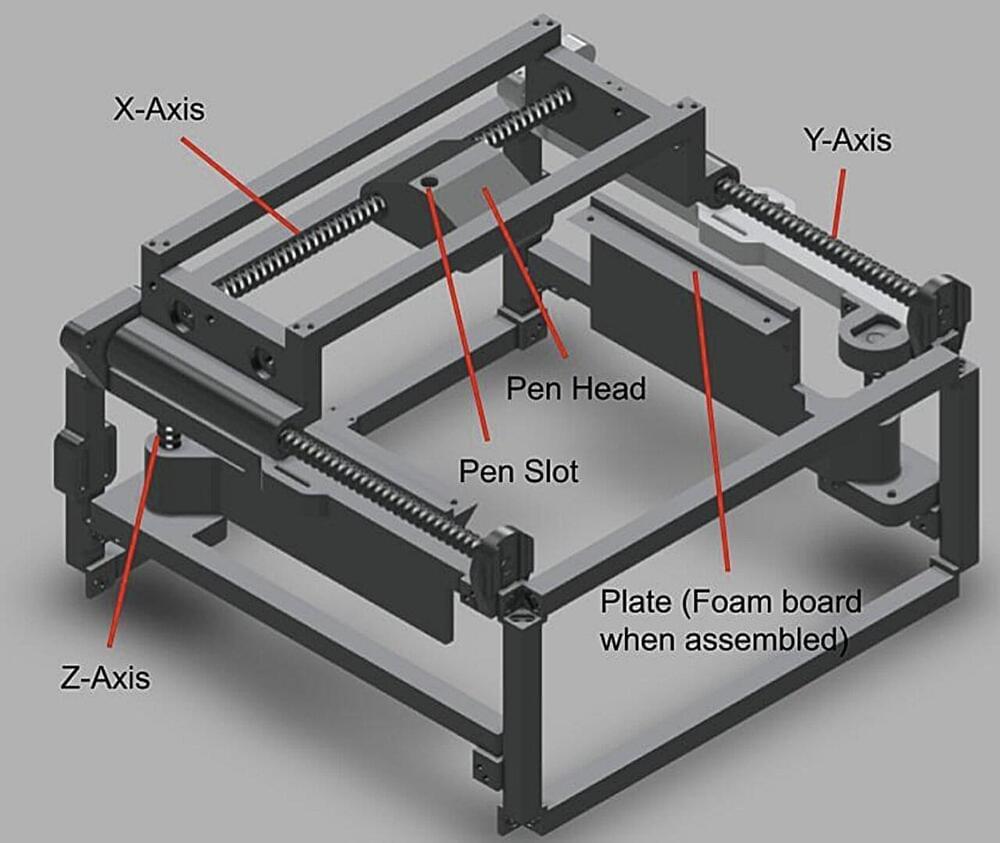

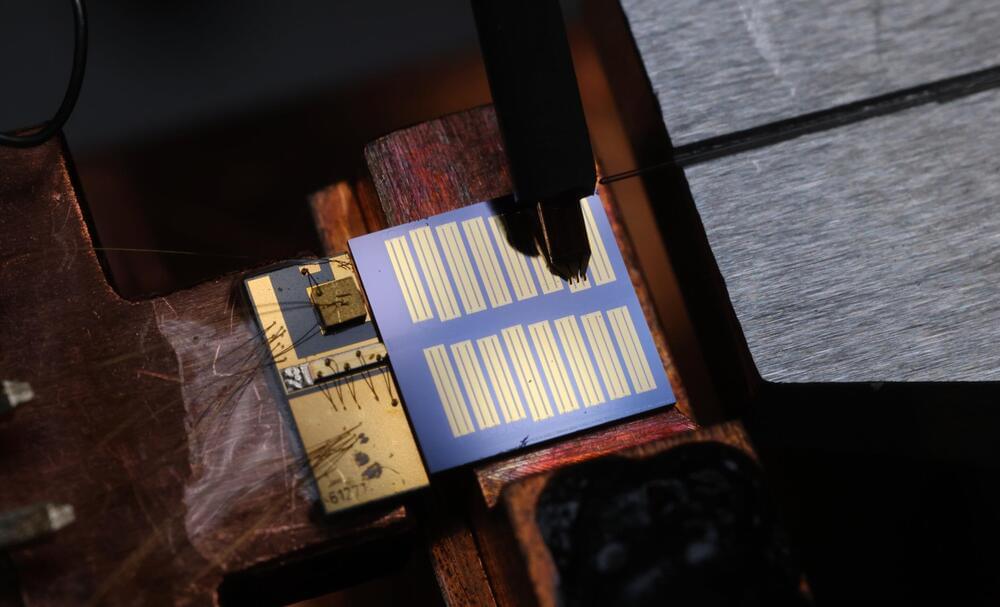

The research employed an ALI model with transwell membranes to cultivate hiPSC-derived skin organoids (SKOs), contrasting its efficacy with conventional floating culture methods. The results were striking—SKOs under ALI conditions exhibited superior hair follicle growth, both in quantity and structural complexity. These follicles were not only larger and more mature but also demonstrated features akin to natural hair shafts, closely mirroring in vivo hair follicle development. Moreover, ALI-cultured SKOs exhibited enhanced epidermal stratification and differentiation, signifying a more precise replication of human skin architecture. These findings underscore the promise of ALI culture in advancing skin organoid engineering, offering a sophisticated and functional platform for research and therapeutic development in dermatology.