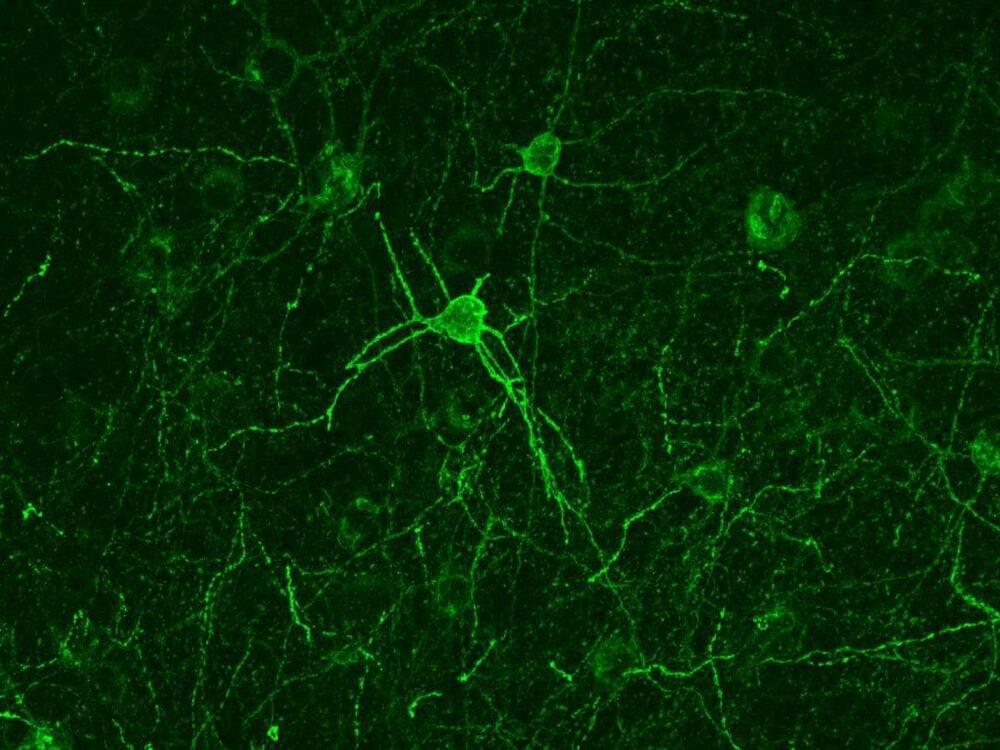

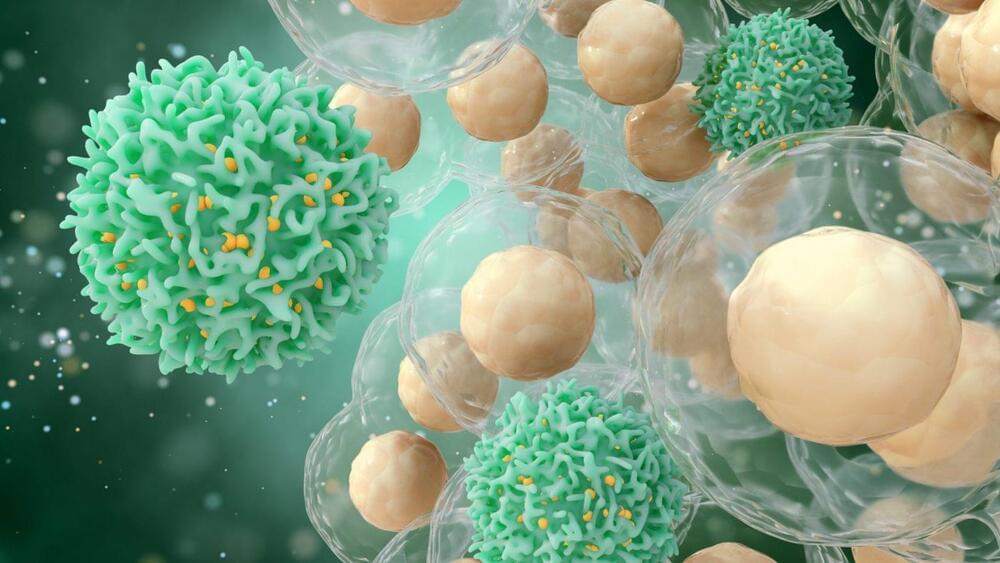

A specific brain mechanism modulates how animals respond empathetically to others’ emotions. This is the latest finding from the research unit Genetics of Cognition, led by Francesco Papaleo, Principal Investigator at the Istituto Italiano di Tecnologia (IIT – Italian Institute of Technology) and affiliated with IRCCS Ospedale Policlinico San Martino in Genova. The study, recently published in Nature Neuroscience, provides new insights into psychiatric conditions where this socio-cognitive skill is impaired, such as post-traumatic stress disorder (PTSD), autism, and schizophrenia.

Psychological studies have shown that the way humans respond to others’ emotions is strongly influenced by their own past emotional experiences. When a similar emotional situation—such as a past stressful event—is observed in another person, we can react in two different ways. On one hand, it may generate empathy, enhancing the ability to understand others’ problems and increasing sensitivity to others altered emotions. On the other hand, it may induce self-distress resulting into an avoidance towards others.

The research group at IIT has demonstrated that a similar phenomenon also occurs in animals: recalling a negative experience strongly influences how an individual responds to another who is experiencing that same altered emotional state. More specifically, animals exhibit different reactions only if the negative event they experienced in the past is identical to the one they observe in others. This indicates that even animals can specifically recognize an emotional state and react accordingly even without directly seeing the triggering stimuli.