Enjoy the videos and music you love, upload original content, and share it all with friends, family, and the world on YouTube.

Get the latest international news and world events from around the world.

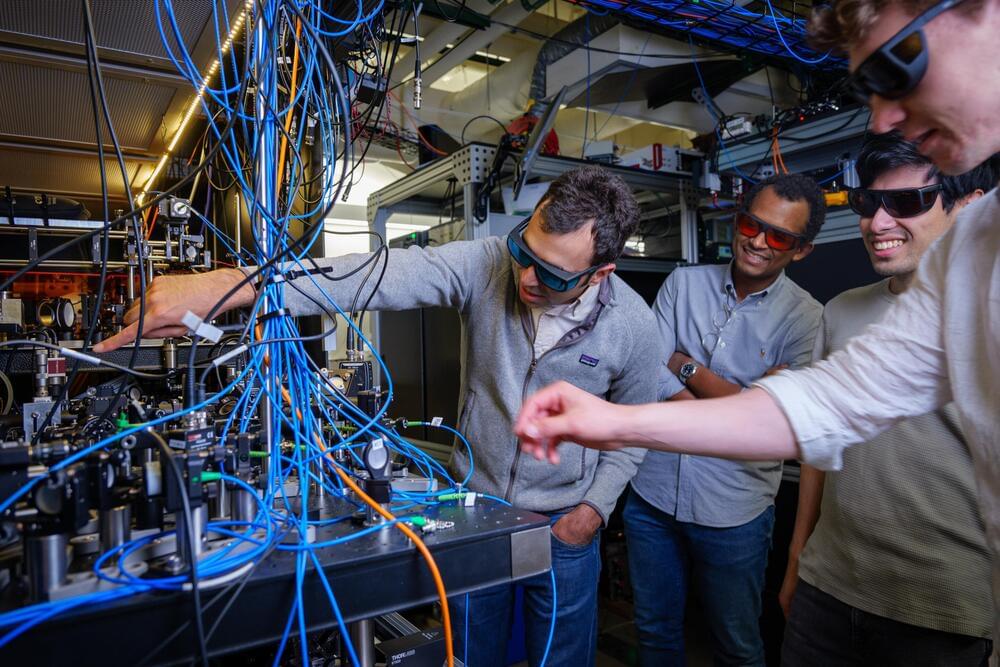

Quantum physicists tap into entanglement to improve the precision of optical atomic clocks

It’s not your ordinary pocket watch: The researchers showed that, at least under a narrow range of conditions, their clock could beat a benchmark for precision called the “standard quantum limit”—what physicist Adam Kaufman refers to as the “Holy Grail” for optical atomic clocks.

“What we’re able to do is divide the same length of time into smaller and smaller units,” said Kaufman, senior author of the new study and a fellow at JILA, a joint research institute between CU Boulder and NIST. “That acceleration could allow us to track time more precisely.”

The team’s advancements could lead to new quantum technologies. They include sensors that can measure subtle changes in the environment, such as how Earth’s gravity shifts with elevation.

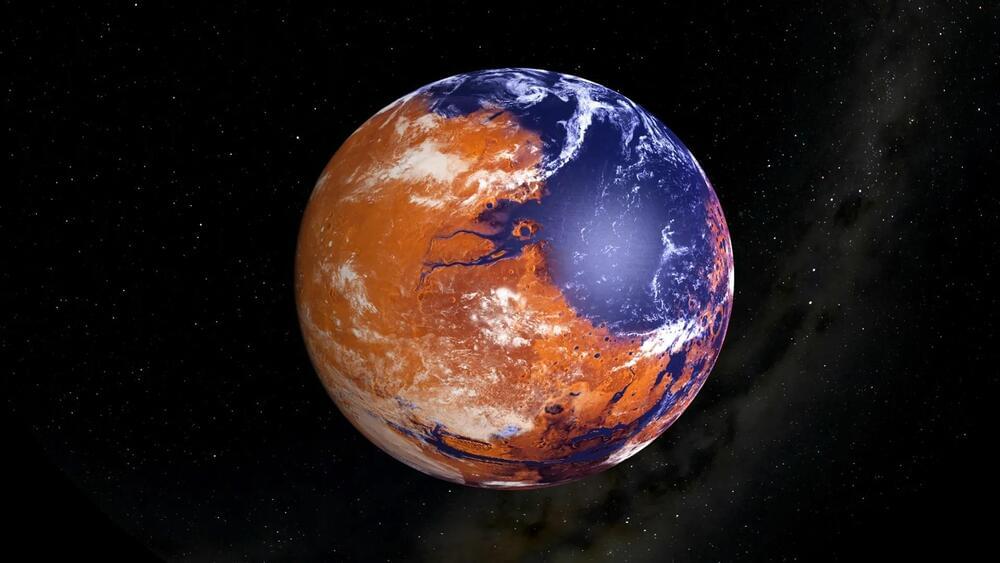

The Habitable Mars? Examining Isotopes in Gale Crater

“The isotope values of these carbonates point toward extreme amounts of evaporation, suggesting that these carbonates likely formed in a climate that could only support transient liquid water,” said Dr. David Burtt.

Was the planet Mars ever habitable and what conditions led to it becoming the uninhabitable world we see today? This is what a recent study published in the Proceedings of the National Academy of Sciences hopes to address as a team of researchers from the United States and Canada investigated how carbonate minerals found within Gale Crater on Mars could help paint a clearer picture of past conditions on the Red Planet and whether it was habitable. This study holds the potential to help scientists better understand the formation and evolution of Mars and whether it once had the necessary conditions to support life as we know it.

Studying carbonate minerals is important due to their ability to tell scientists how a climate formed and evolved over time, with these carbonate minerals containing large amounts of carbon and oxygen isotopes, specifically Carbon-13 and Oxygen-18, which the study notes is the highest amount of these isotopes identified on the Red Planet. Carbon-13 and Oxygen-18 are known as environmental isotopes, which are used to better understand the interactions between a planet’s ocean and atmosphere and how life could exist. While Earth is the only known planet to support life, studying these isotopes on Mars could help scientists better understand if life could have formed on Mars long ago.

Meet Geoffrey Hinton: Winner of the 2024 Nobel Prize in Physics

University of Toronto professor Geoffrey Hinton has been awarded the Nobel Prize in Physics for his work in AI. Adrian Ghobrial has more.

Connect with CTV News:

For live updates and latest headlines visit: http://www.ctvnews.ca/

For breaking news, fast, download the CTV News App: https://www.ctvnews.ca/app.

Must-watch stories and full programs at http://www.ctvnews.ca/video.

CTV News on TikTok: https://www.tiktok.com/discover/CTV-News.

CTV News on X (formerly Twitter): / ctvnews.

CTV News on Reddit: / ctvnews.

CTV News on LinkedIn: / ctv-news.

–

CTV News is Canada’s most-watched news organization both locally and nationally, and has a network of national, international, and local news operations.

Scientists develop revolutionary material that could unlock next-level efficiency for existing engines: ‘It opens the door for new possibilities’

Hydrogen fuel, which produces no heat-trapping air pollution at the point of use, could be the future of clean energy. But first, some of the technology around still has to be improved, and researchers at the University of Alberta believe they have made an important step in that direction, AL Circle reported.

The breakthrough out of the University of Alberta is a new alloy material — dubbed AlCrTiVNi5 — that consists of metals such as aluminum and nickel. The alloy has great potential for coating surfaces that have to endure extremely high temperatures, such as gas turbines, power stations, airplane engines, and hydrogen combustion engines.

Hydrogen combustion engines are different from fuel cells, which also run on hydrogen. They are being used to develop cars that run on clean energy. While fuel cells rely on a chemical process to convert hydrogen into electricity, hydrogen combustion engines burn hydrogen fuel, creating energy via combustion, just like a traditional gas-powered car (but without all the pollution).

‘Severe’ geomagnetic storm could blow power grid with satellite, radio blackouts possible during Milton

“Satellite navigation (GPS) degraded or inoperable for hours,” the SWPC warned.” Radio – HF (high frequency) radio propagation sporadic or blacked out.”

This severe geomagnetic storm is forecast at the same time Hurricane Milton is forecast to strike Florida. While the SWPC did not note if satellite issues could hinder hurricane monitoring, radio blackouts are expected.

Breaking up big tech: US wants to separate Android, Play, and Chrome from Google

The US Department of Justice (DoJ) has submitted a new “Proposed Remedy Framework” to correct Google’s violation of antitrust antitrust laws in the country (h/t Mishaal Rahman). This framework seeks to remedy the harm caused by Google’s search distribution and revenue sharing, generation and display for search results, advertising scale and monetization, and accumulation and use of data.

The most drastic of the proposed solutions includes preventing Google from using its products, such as Chrome, Play, and Android, to advantage Google Search and related products. Other solutions include allowing websites to opt-out of training or appearing in Google-owned AI products, such as in AI Overviews in Google Search.

Google responded to this by asserting that “DOJ’s radical and sweeping proposals risk hurting consumers, businesses, and developers.” While the company intends to respond in detail to DoJ’s final proposals, it says that the DoJ is “already signaling requests that go far beyond the specific legal issues in this case.”

Radian Single Stage to Orbit Space Plane

Radian Aerospace has $31 million of funding to develop a single stage to orbit spaceplane. It will use a 3,000 meter long sled to get it up to launch speed. It will deliver 2.27 tons to anywhere on Earth in under one hour. It can land on a regular runway.

The Spaceplane will be about as long as a Boeing 787 and as wide as a 737.

The spaceplane is designed to be fully reusable for up to 100 missions. They plan a 48-hour turnaround time between flights and a 90-minute on-demand launch capability, significantly reducing costs and increasing mission flexibility.