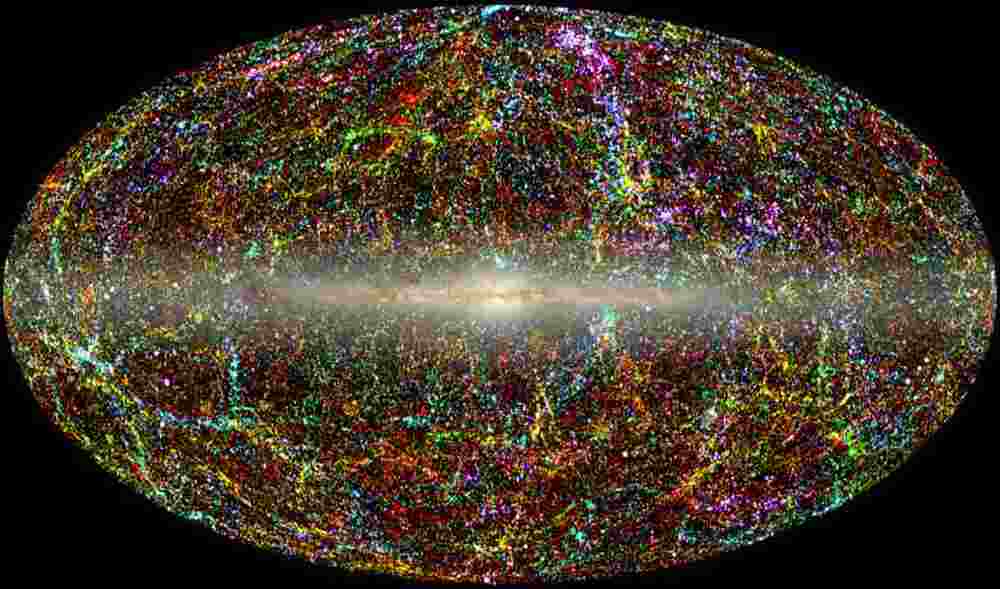

As the universe evolves, scientists expect large cosmic structures to grow at a certain rate: dense regions such as galaxy clusters would grow denser, while the void of space would grow emptier.

But University of Michigan researchers have discovered that the rate at which these large structures grow is slower than predicted by Einstein’s Theory of General Relativity.

They also showed that as dark energy accelerates the universe’s global expansion, the suppression of the cosmic structure growth that the researchers see in their data is even more prominent than what the theory predicts. Their results are published in Physical Review Letters.