Yay face_with_colon_three

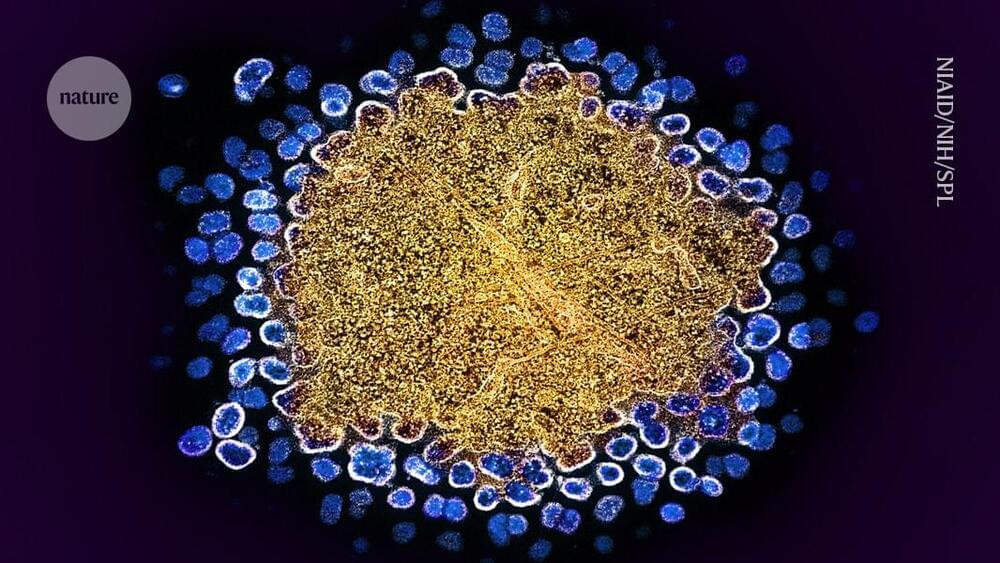

A 60-year-old man in Germany has become at least the seventh person with HIV to be announced free of the virus after receiving a stem-cell transplant1. But the man, who has been virus-free for close to six years, is only the second person to receive stem cells that are not resistant to the virus.

“I am quite surprised that it worked,” says Ravindra Gupta, a microbiologist at the University of Cambridge, UK, who led a team that treated one of the other people who is now free of HIV2,3. “It’s a big deal.”

The first person found to be HIV-free after a bone-marrow transplant to treat blood cancer4 was Timothy Ray Brown, who is known as the Berlin patient. Brown and a handful of others received special donor stem cells2,3. These carried a mutation in the gene that encodes a receptor called CCR5, which is used by most HIV virus strains to enter immune cells. To many scientists, these cases suggested that CCR5 was the best target for an HIV cure.