IBM has just unveiled its boldest quantum computing roadmap yet: Starling, the first large-scale, fault-tolerant quantum computer—coming in 2029. Capable of running 20,000X more operations than today’s quantum machines, Starling could unlock breakthroughs in chemistry, materials science, and optimization.

According to IBM, this is not just a pie-in-the-sky roadmap: they actually have the ability to make Starling happen.

In this exclusive conversation, I speak with Jerry Chow, IBM Fellow and Director of Quantum Systems, about the engineering breakthroughs that are making this possible… especially a radically more efficient error correction code and new multi-layered qubit architectures.

We cover:

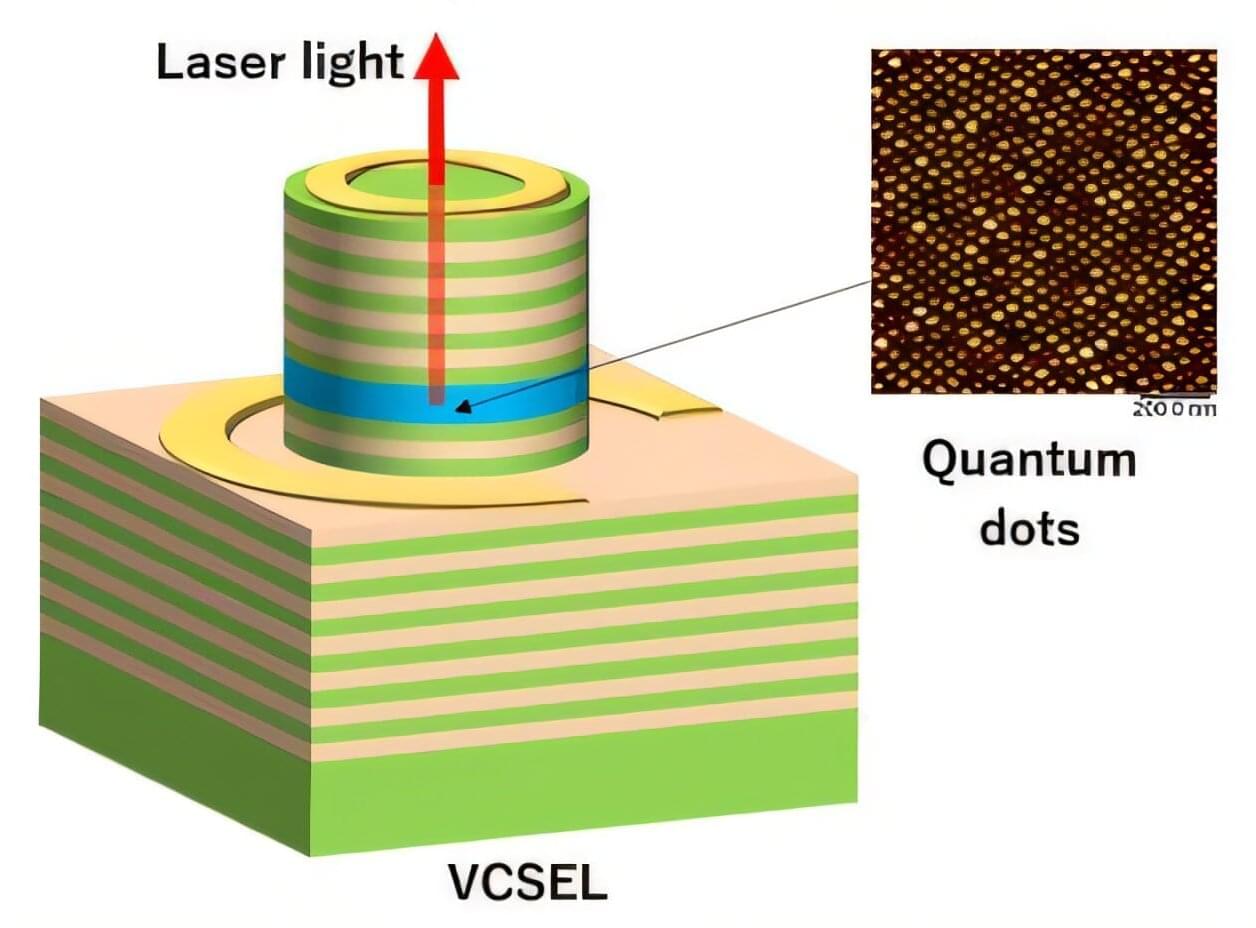

- The shift from millions of physical qubits to manageable logical qubits.

- Why IBM is using quantum low-density parity check (qLDPC) codes.

- How modular quantum systems (like Kookaburra and Cockatoo) will scale the technology.

- Real-world quantum-classical hybrid applications already happening today.

- Why now is the time for developers to start building quantum-native algorithms.

00:00 Introduction to the Future of Computing.

01:04 IBM’s Jerry Chow.

01:49 Quantum Supremacy.

02:47 IBM’s Quantum Roadmap.

04:03 Technological Innovations in Quantum Computing.

05:59 Challenges and Solutions in Quantum Computing.

09:40 Quantum Processor Development.

14:04 Quantum Computing Applications and Future Prospects.

20:41 Personal Journey in Quantum Computing.

24:03 Conclusion and Final Thoughts.