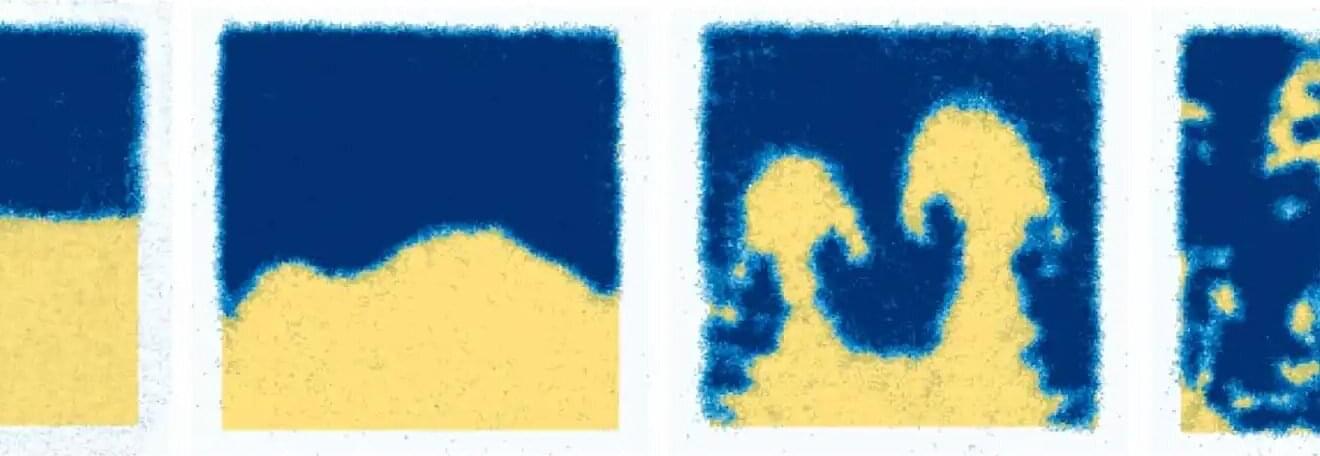

Temporarily anesthetizing the retina briefly reverts the activity of the visual system to that observed in early development and enables growth of responses to the amblyopic eye, new research shows.

In the common vision disorder amblyopia, impaired vision in one eye during development causes neural connections in the brain’s visual system to shift toward supporting the other eye, leaving the amblyopic eye less capable even after the original impairment is corrected. Current interventions are only effective during infancy and early childhood while the neural connections are still being formed.

But a new study in mice by neuroscientists in The Picower Institute for Learning and Memory at MIT shows that if the retina of the amblyopic eye is temporarily and reversibly anesthetized just for a couple of days, the brain’s visual response to the eye can be restored even in adulthood.