In 2014, a team of Googlers (many of whom were former educators) launched Google Classroom as a “mission control” for teachers. With a central place to bring Google’s collaboration tools together, and a constant feedback loop with schools through the Google for Education Pilot Program, Classroom has evolved from a simple assignment distribution tool to a destination for everything a school needs to deliver real learning impact.

Get the latest international news and world events from around the world.

Frontiers: Introduction: The integration of ChatGPT, an advanced AI-powered chatbot, into educational settings, has caused mixed reactions among educators

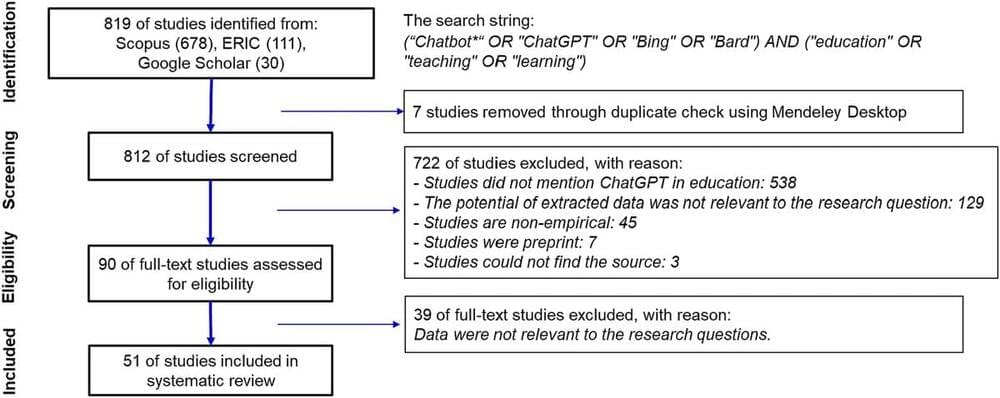

Introduction: The integration of ChatGPT, an advanced AI-powered chatbot, into educational settings, has caused mixed reactions among educators. Therefore, we conducted a systematic review to explore the strengths and weaknesses of using ChatGPT and discuss the opportunities and threats of using ChatGPT in teaching and learning.

Methods: Following the PRISMA flowchart guidelines, 51 articles were selected among 819 studies collected from Scopus, ERIC and Google Scholar databases in the period from 2022–2023.

Results: The synthesis of data extracted from the 51 included articles revealed 32 topics including 13 strengths, 10 weaknesses, 5 opportunities and 4 threats of using ChatGPT in teaching and learning. We used Biggs’s Presage-Process-Product (3P) model of teaching and learning to categorize topics into three components of the 3P model.

How to Prompt ChatGPT to Teach You Anything

Basically chat gpt, gemini, and apple intelligence all can be a great teaching tool that can teach oneself nearly anything. Essentially college even can be quickly solved with AI like chat gpt 4 because it can do more advanced thinking processing than even humans can in any subject. The way to think of this is that chat gpt 4 is like having a neuralink without even needing a physical device inside the brain. Essentially AI can augmented us to become god like just by being able to farm out computer AI instead needing to use our brains for hard mental labor.

I created a prompt chain that enables you to learn any complex concept from ChatGPT.

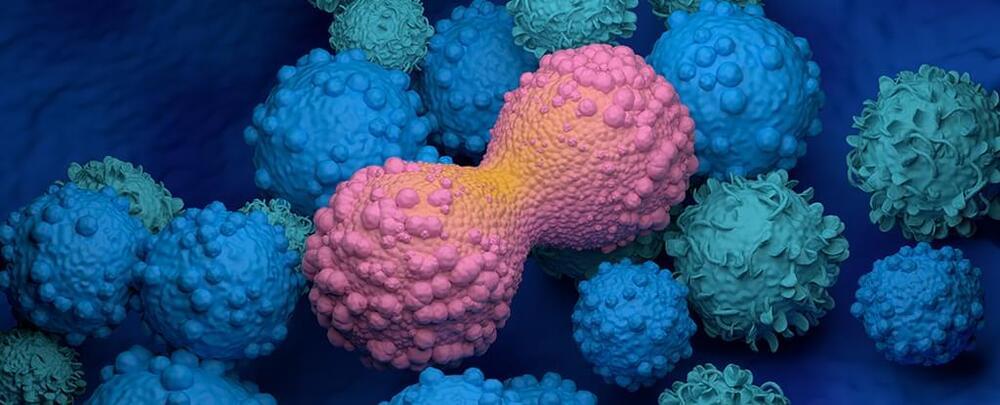

Mysterious Finding Shows Healthy Tissue Can Resemble Invasive Cancer

How we classify cancer and spot it in its earliest stages could need an urgent rethink: researchers have found that even some healthy women carry cells with the key hallmarks of breast cancer.

These cells are known as aneuploid cells, and have an abnormal number of chromosomes. They’re common in invasive breast cancer, and it’s thought the chromosome imbalance enables cancer to spread and evade the body’s immune defenses.

Now it appears aneuploid cells might also be present even when there’s no cancer in sight. The researchers, from the University of Texas and the Baylor College of Medicine in Texas, found them in breast tissue samples from 49 healthy women.

Neural networks unlock potential of high-entropy carbonitrides in extreme environments

The melting point is one of the most important measurements of material properties, which informs potential applications of materials in various fields. Experimental measurement of the melting point is complex and expensive, but computational methods could help achieve an equally accurate result more quickly and easily.

A research group from Skoltech conducted a study to calculate the maximum melting point of a high-entropy carbonitrides—a compound of titanium, zirconium, tantalum, hafnium, and niobium with carbon and nitrogen.

The results published in the Scientific Reports journal indicate that high-entropy carbonitrides can be used as promising materials for protective coatings of equipment operating under extreme conditions —high temperature, thermal shock, and chemical corrosion.

Engineers achieve quantum teleportation over active internet cables

Engineers at Northwestern University have demonstrated quantum teleportation over a fiber optic cable already carrying Internet traffic. This feat, published in the journal Optica, opens up new possibilities for combining quantum communication with existing Internet infrastructure. It also has major implications for the field of advanced sensing technologies and quantum computing applications.

Quantum teleportation, a process that harnesses the power of quantum entanglement, enables an ultra-fast and secure method of information sharing between distant network users. Unlike traditional communication methods, quantum teleportation does not require the physical transmission of particles. Instead, it relies on entangled particles exchanging information over great distances.

Nobody thought it would be possible to achieve this, according to Professor Prem Kumar, who led the study. “Our work shows a path towards next-generation quantum and classical networks sharing a unified fiber optic infrastructure. Basically, it opens the door to pushing quantum communications to the next level.”

Scientists push boundaries with high-tech device that turns heat source into readily available energy — here’s how it works

The International Renewable Energy Agency says breakthroughs like this, along with others such as solar panels that work at night or China’s flywheel energy storage project, are key to cutting back on dirty energy use and creating stronger and more reliable power systems.

“Further international cooperation is vital to deliver fit-for-purpose grids, sufficient energy storage and faster electrification, which are integral to move clean energy transitions quickly and securely,” Executive Director of the International Energy Agency Fatih Birol said in an IEA report.

This new way of storing energy could deliver cleaner, more affordable energy to cities, businesses, and homes. Researchers at Rice University believe it could be widely available in five to 10 years, making renewable energy more practical and accessible.