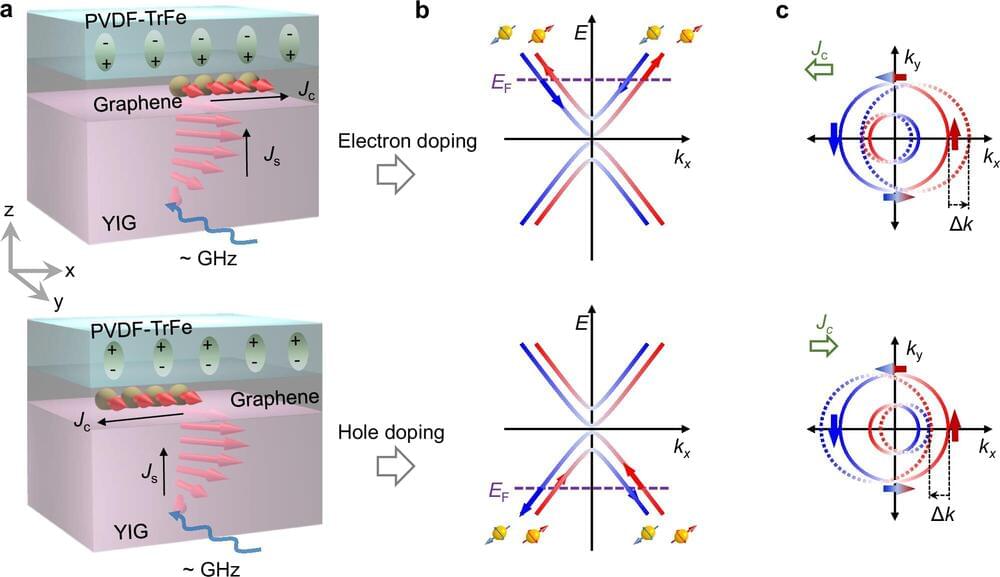

A research team, led by Professor Jung-Woo Yoo from the Department of Materials Science and Engineering at UNIST has unveiled a new type of magnetic memory device, designed to reduce power consumption and heat generation in MRAM semiconductors. The work was published in Nature Communications on October 10, 2024.

Magnetic random access memory (MRAM) represents the next generation of memory technology, combining the strengths of NAND flash and DRAM. It is a non-volatile storage solution, meaning data is preserved even when the device is powered off, while also achieving speeds comparable to DRAM. MRAM has already seen commercialization in sectors requiring fast and reliable data access.

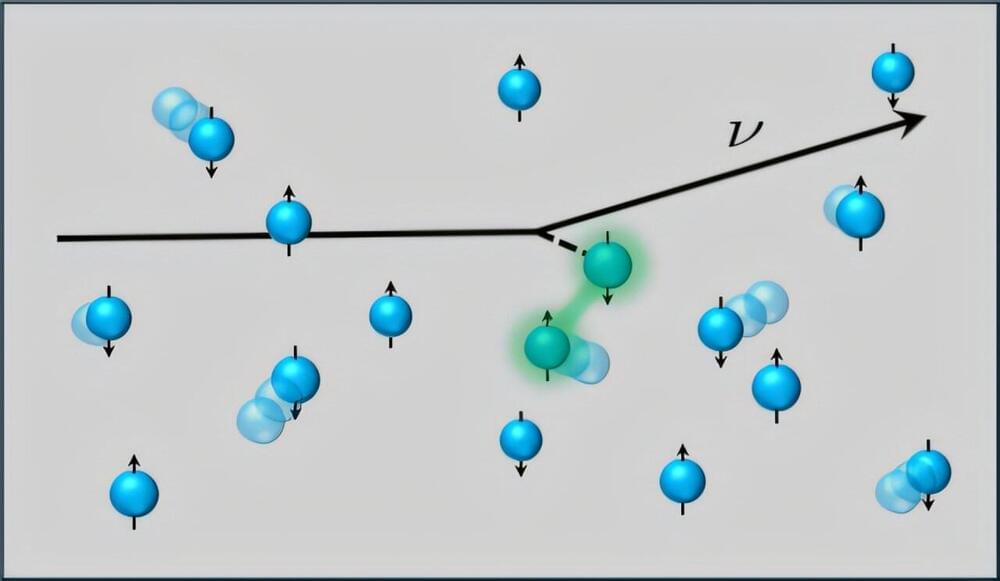

Traditional MRAM devices rely on electric current to write and erase data. In these devices, when the magnetization directions of the two magnetic layers are aligned (parallel), the resistance is low; when they are opposite (antiparallel), the resistance is high. Data is then represented as binary states (0 and 1) based on these configurations. However, changing the magnetization direction necessitates a current exceeding a critical threshold, which leads to significant power consumption and heat generation.