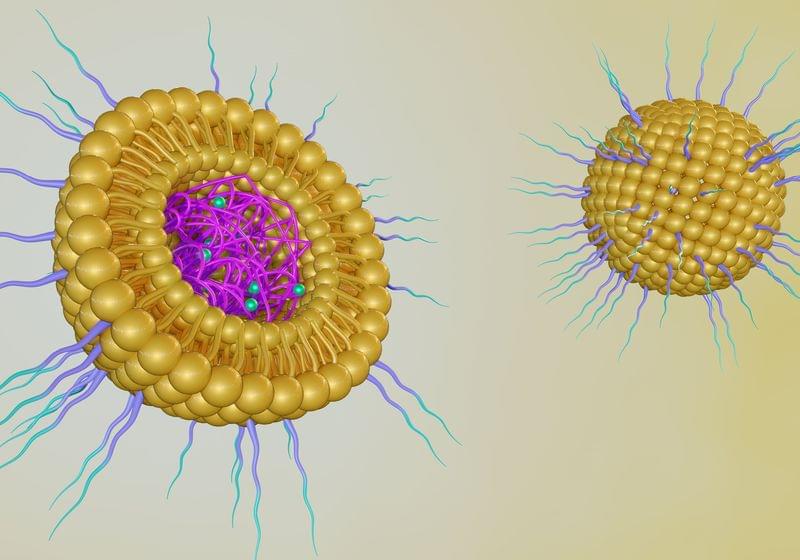

A reimagined lipid vehicle for nucleic acids could overcome the limitations of current vectors.

Researchers develop targeted polymersomes to enhance methotrexate delivery, offering a promising new approach for treating aggressive choriocarcinoma.

Study: ENT-1-Targeted Polymersomes to Enhance the Efficacy of Methotrexate in Choriocarcinoma Treatment. Image Credit: Shutterstock AI Generator / Shutterstock.com.

In a recent study published in Small Science, researchers develop targeted polymersomes loaded with methotrexate for the treatment of gestational choriocarcinoma, a rare and aggressive malignancy originating from the placenta.

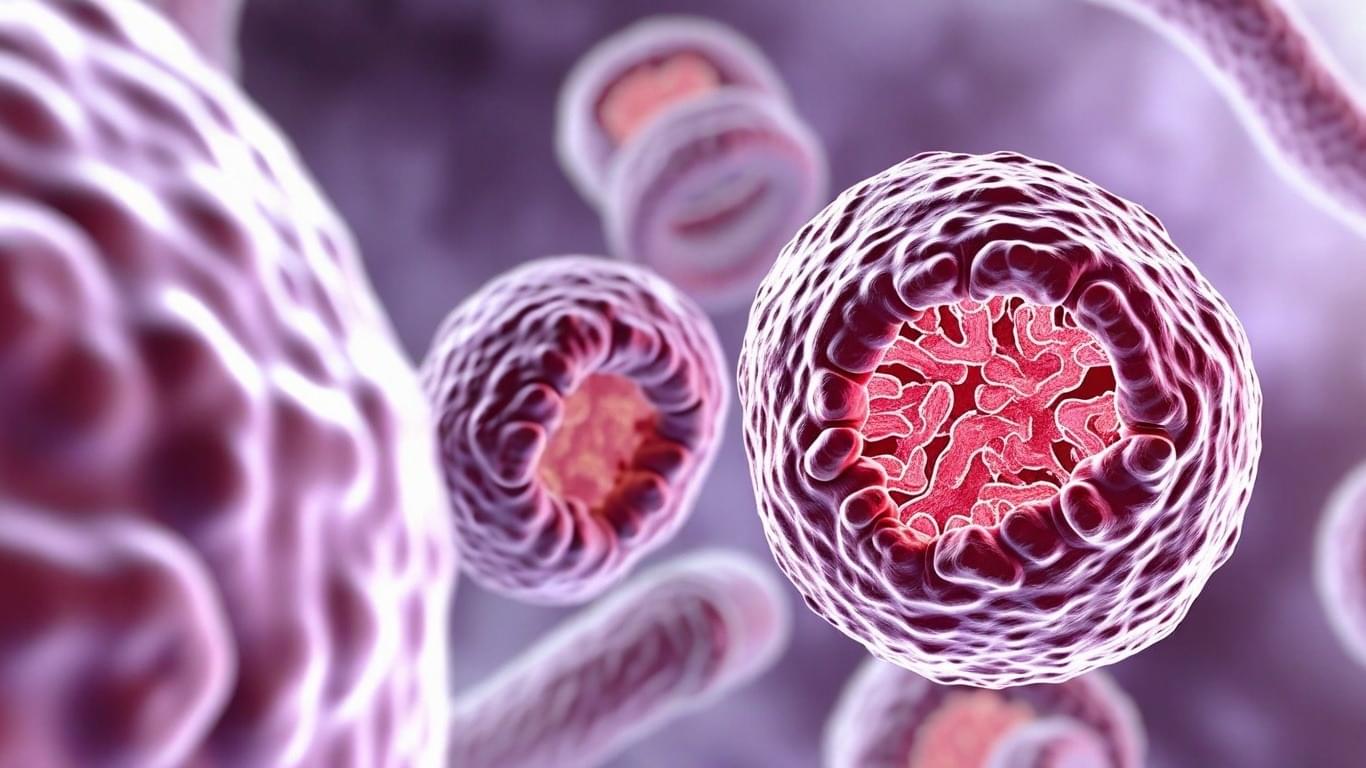

Imagine a world where your thoughts aren’t confined to the boundaries of your skull, where your consciousness is intimately connected to the universe around you, and where the neurons in your brain communicate instantly across vast distances.

This isn’t science fiction — it’s the intriguing possibility suggested by applying the principle of quantum entanglement to the realm of consciousness.

Quantum entanglement, often described as the “spooky action at a distance,” is a phenomenon that baffled even Einstein. In essence, it describes a scenario where two particles become so deeply linked that they share the same fate, regardless of the distance separating them. Measuring the state of one instantly reveals the state of its partner, even if they are light-years apart.

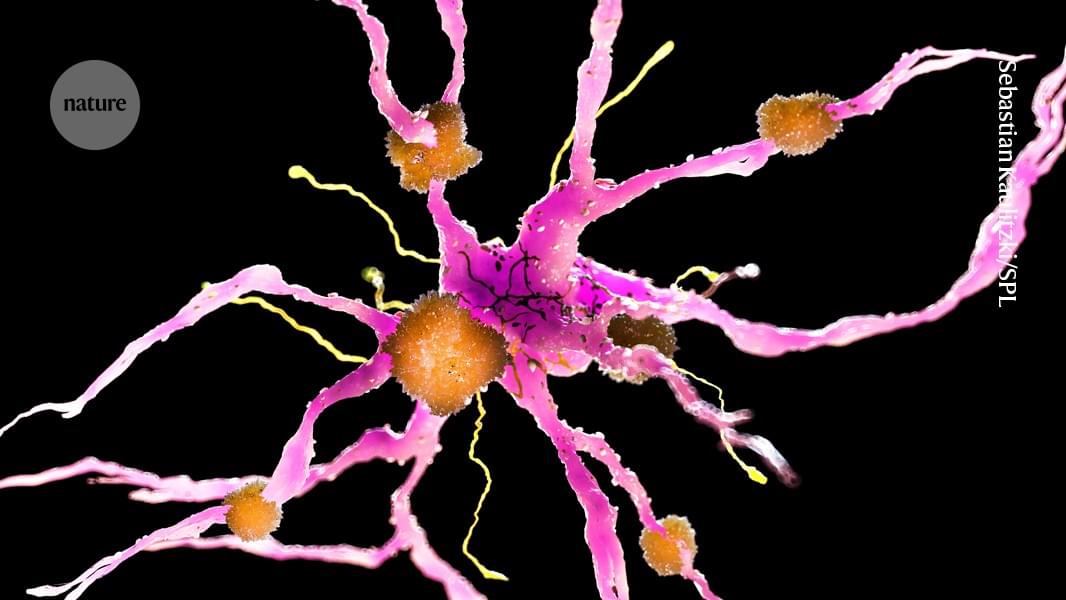

Qubits—the fundamental units of quantum information—drive entire tech sectors. Among them, superconducting qubits could be instrumental in building a large-scale quantum computer, but they rely on electrical signals and are difficult to scale.

In a breakthrough, a team of physicists at the Institute of Science and Technology Austria (ISTA) has achieved a fully optical readout of superconducting qubits, pushing the technology beyond its current limitations. Their findings are published in Nature Physics.

Following a year-long rally, quantum computing stocks were brought to a standstill barely a few days into the International Year of Quantum Science and Technology. The reason for this sudden setback was Nvidia CEO Jensen Huang’s keynote at the CES 2025 tech trade show, where he predicted that “very useful quantum computers” were still two decades down the road.

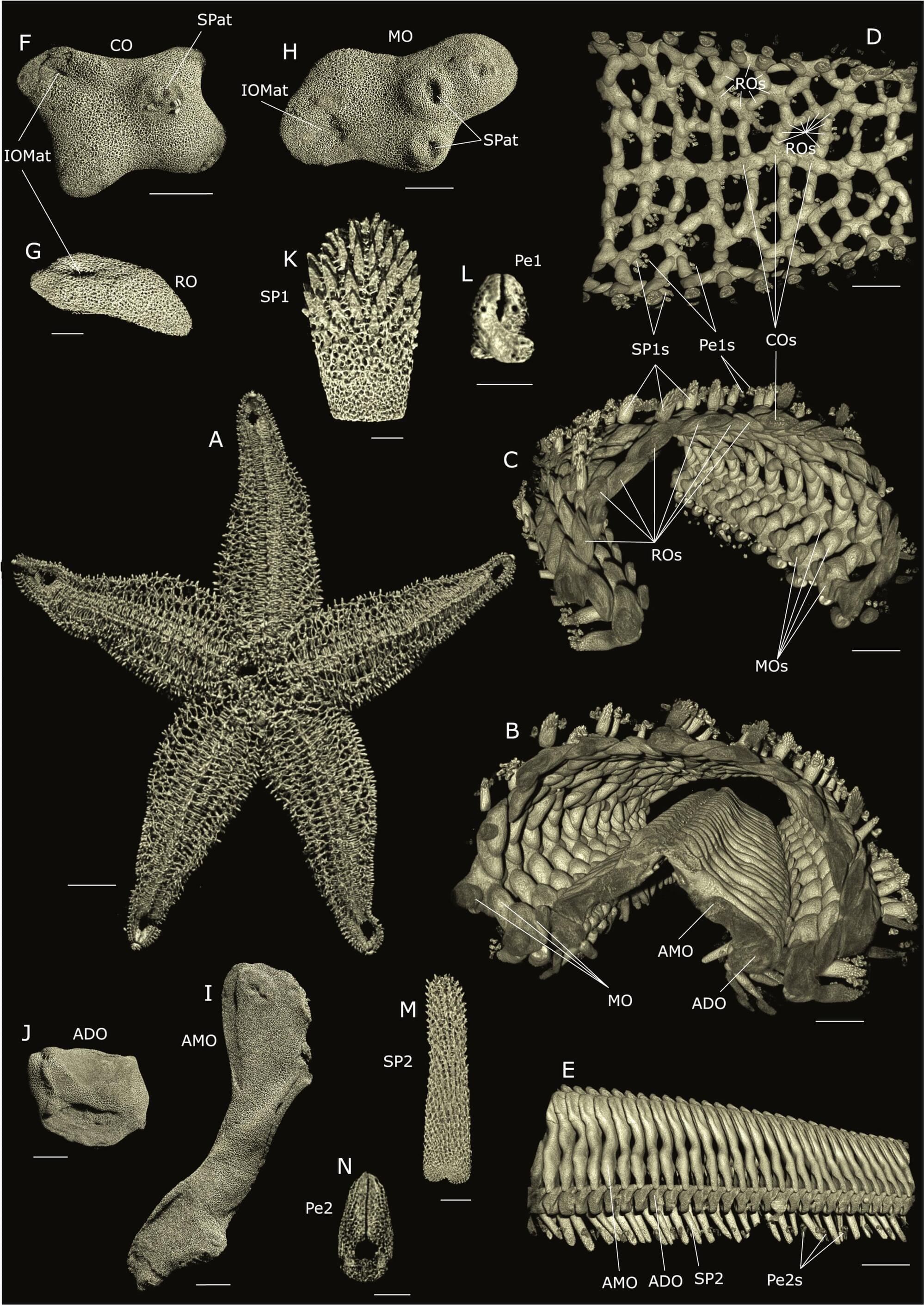

Researchers at the Biomimetics-Innovation-Center, Hochschule Bremen—City University of Applied Sciences, have made pioneering discoveries about how mechanical stress shapes the ultrastructure of starfish skeletons. Published in Acta Biomaterialia, their study delivers the first in-depth analysis of how starfish skeletons respond to varying stress conditions, revealing new insights into the evolutionary mechanisms that drive skeletal adaptation.

While starfish are widely recognized—especially thanks to pop-culture icons like Patrick Star in SpongeBob SquarePants—their remarkable internal structure often goes unnoticed. Sharing an evolutionary lineage with vertebrates, starfish serve as powerful models for studying the development of endoskeletons.

Their skeletons consist of thousands of small, bone-like elements called ossicles, which feature a complex, porous structure strikingly similar to human and other vertebrate bones. According to lead author Raman and colleagues, these ossicles exhibit microstructural adaptations that mirror the mechanical loads they experience, demonstrating a universal principle of stress adaptation.

In today’s AI news, a consortium of investors led by Elon Musk is offering $97.4 billion to buy the nonprofit that controls OpenAI. The unsolicited offer adds a complication to Altman’s carefully laid plans for OpenAI’s future, including converting it to a for-profit company and spending up to $500 billion on AI infrastructure through a JV called Stargate.

In other advancements, all eyes were on French President Emmanuel Macron Sunday at the end of the first day of the AI Action Summit in Paris after he announced a €109 billion investment package. “For me, this summit is not just the announcement of a lot of investment in France. It’s a wake-up call for a European strategy,” he said.

And, Current AI, a “public interest” initiative focused on fostering and steering development of artificial intelligence in societally beneficial directions, was announced at the French AI Action summit on Monday. It’s kicking off with an initial $400 million in pledges from backers and a plan to pull in $2.5 billion more over the next five years.

Then, ZDNET contributor Jack Wallen reports his local AI of choice is the open-source Ollama. He recently wrote a piece on how to make using this local LLM easier with the help of a browser extension, which he uses on Linux. But on MacOS devices, Jack turns to an easy-to-use, free app called Msty.

In videos, At the AI Action Summit in Paris, Yann LeCun underscored a fundamental shift in artificial intelligence—one that moves beyond the brute-force approach of large language models in his presentation, “The Next AI Revolution”. The future of AI hinges on *world models*—structured, adaptive representations that can infer, reason, and plan.

And, DeepSeek is not a threat to OpenAI says, Sridhar Ramaswamy, CEO Snowflake, the $60BN public company with $3.5BN in revenue growing 30% per year. Sridhar joined Snowflake following his company, Neeva, being acquired by them for $150M. Mr. Ramaswamy spent 15 years growing Google’s AdWords from $1.5B to over $100B.

Then, former Google CEO Eric Schmidt with Alliant founder Craig Mundie talk with David Rubenstein about the promise, and potential peril, of a new frontier in artificial intelligence — and their collaboration with the late Henry Kissinger on his final book, Genesis. Recorded January 26, 2025 at The 92nd Street Y, in New York City, New York.