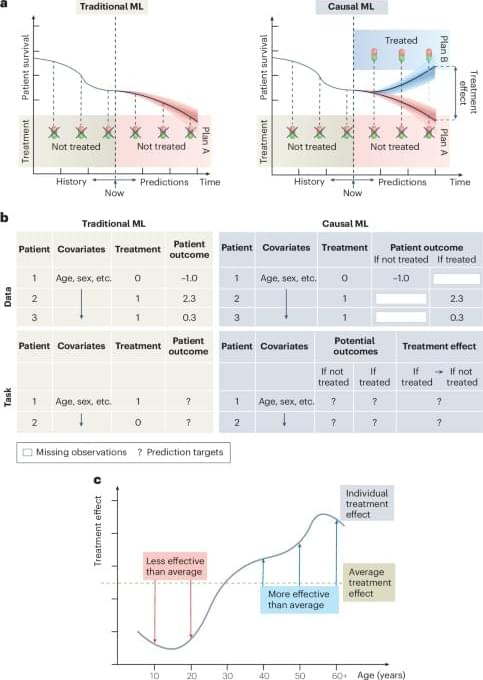

Causal machine learning methods could be used to predict treatment outcomes for subgroups and even individual patients; this Perspective outlines the potential benefits and limitations of the approach, offering practical guidance for appropriate clinical use.

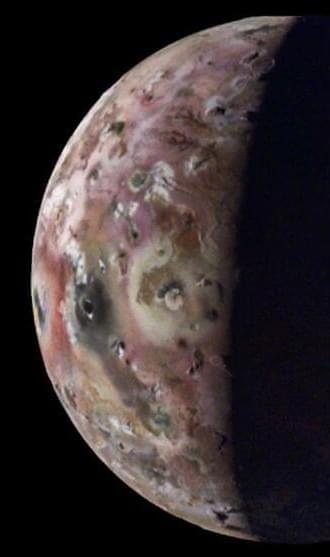

Here’s what the recent measurements suggest — and why it’s too soon to update models of the Universe’s distant future.

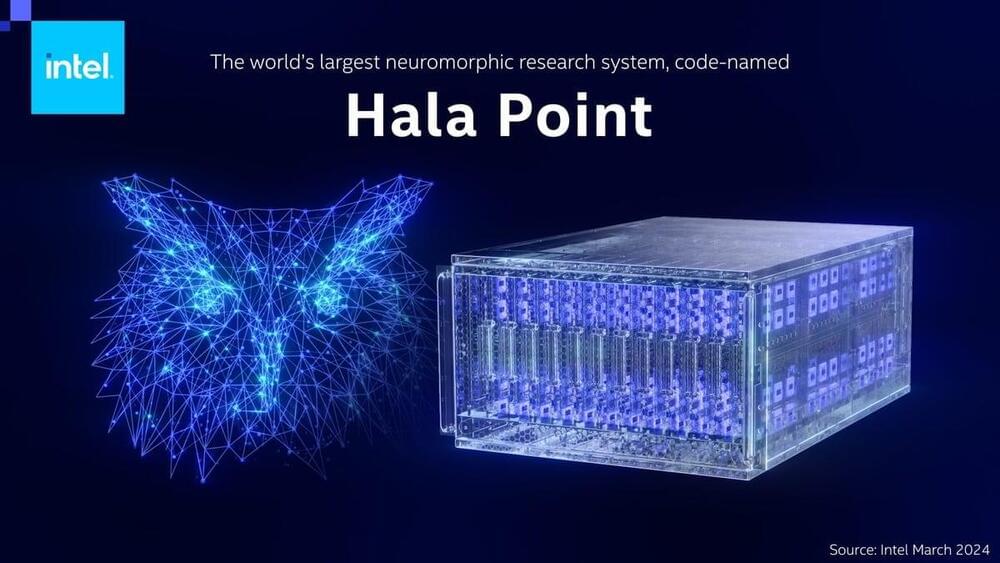

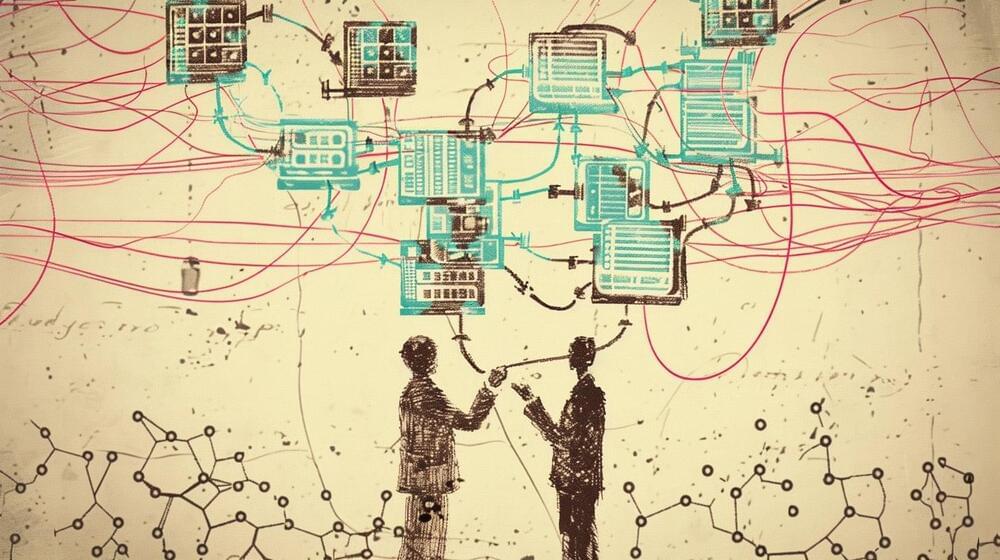

Three years after introducing its second-generation “neuromorphic” computer chip, Intel on Wednesday announced the company has assembled 1,152 of the parts into a single, parallel-processing system called Hala Point, in partnership with the US Department of Energy’s Sandia National Laboratories.

The Hala Point system’s 1,152 Loihi 2 chips enable a total of 1.15 billion artificial neurons, Intel said, “and 128 billion synapses distributed over 140,544 neuromorphic processing cores.” That is an increase from the previous Intel multi-chip Loihi system, debuted in 2020, called Pohoiki Springs, which used just 768 Loihi 1 chips.

Sandia Labs intends to use the system for what it calls “brain-scale computing research,” to solve problems in areas of device physics, computer architecture, computer science, and informatics.

CWI senior researcher Sander Bohté started working on neuromorphic computing already in 1998 as a PhD-student, when the subject was barely on the map. In recent years, Bohté and his CWI-colleagues have realized a number of algorithmic breakthroughs in spiking neural networks (SNNs) that make neuromorphic computing finally practical: in theory many AI-applications can become a factor of a hundred to a thousand more energy-efficient. This means that it will be possible to put much more AI into chips, allowing applications to run on a smartwatch or a smartphone. Examples are speech recognition, gesture recognition and the classification of electrocardiograms (ECG).

“I am really grateful that CWI, and former group leader Han La Poutré in particular, gave me the opportunity to follow my interest, even though at the end of the 1990s neural networks and neuromorphic computing were quite unpopular”, says Bohté. “It was high-risk work for the long haul that is now bearing fruit.”

Spiking neural networks (SNNs) more closely resemble the biology of the brain. They process pulses instead of the continuous signals in classical neural networks. Unfortunately, that also makes them mathematically much more difficult to handle. For many years SNNs were therefore very limited in the number of neurons they could handle. But thanks to clever algorithmic solutions Bohté and his colleagues have managed to scale up the number of trainable spiking neurons first to thousands in 2021, and then to tens of millions in 2023.

The telescope’s studies could help end a long-standing disagreement over the rate of cosmic expansion. But scientists say more measurements are needed.

A veteran NASA scientist says his company has tested a propellantless propulsion drive technology that produced one Earth gravity of thrust.

Bostrom’s Deep Utopia

Posted in futurism, robotics/AI

Robin Hanson comments on Nick Bostrom’s new tome … has a great cover with a number of interesting questions and a subtitle that hints that it might address the meaning of life in a future where AI and robots can do everything. But alas, after much build up and anticipation, he leaves that question unanswered, with an abrupt oops, out of time on page 427. … He tries to address meaty topics like, what keeps life interesting? What is our purpose and meaning when the struggle is gone? Can fulfillment get full? But in each case, the pedagogy is more of a survey of all possible answers versus the much more difficult task of making specific predictions. (More)

👉 Researchers have developed a method called Selective Language Modeling (SLM), which trains language models more efficiently by focusing on the most relevant tokens.

Researchers introduce a new method called “Selective Language Modeling” that trains language models more efficiently by focusing on the most relevant tokens.

The method leads to significant performance improvements in mathematical tasks, according to a new paper from researchers at Microsoft, Xiamen University, and Tsinghua University. Instead of considering all tokens in a text corpus equally during training as before, Selective Language Modeling (SLM) focuses specifically on the most relevant tokens.

The researchers first analyzed the training dynamics at the token level. They found that the loss for different token types develops very differently during training. Some tokens are learned quickly, others hardly at all.