An abstract is unavailable.

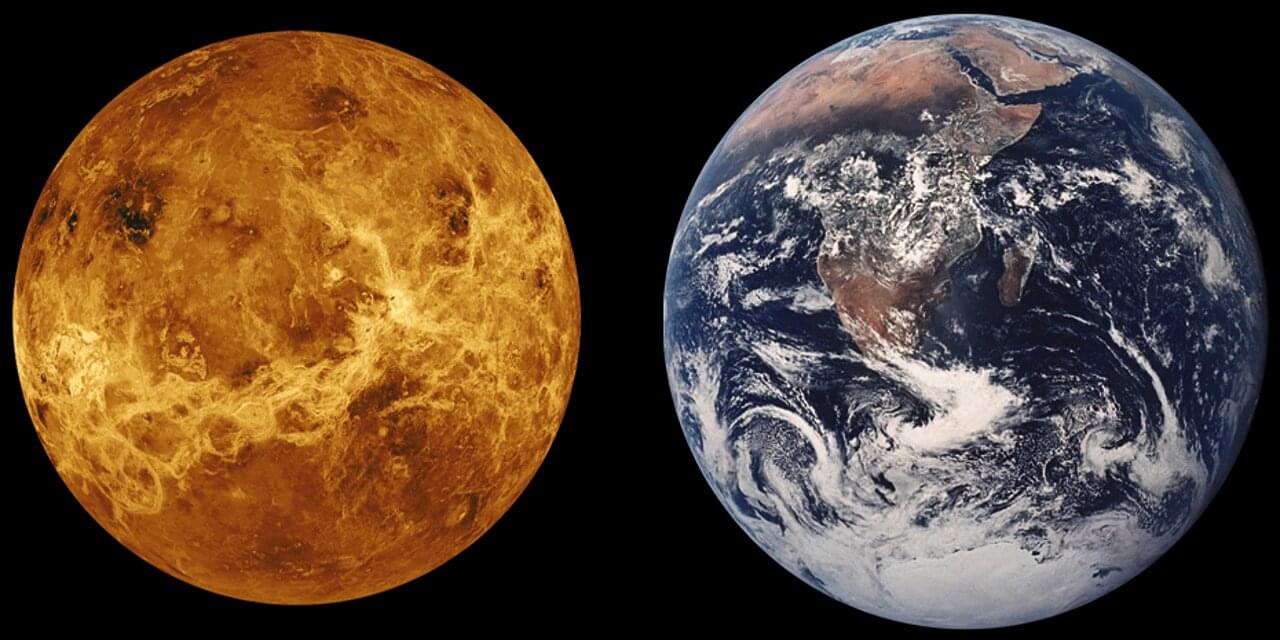

A new study led by UNLV scientists sheds light on how planets, including Earth, formed in our galaxy—and why the life and death of nearby stars are an important piece of the puzzle.

In a paper published in the Astrophysical Journal Letters, researchers at UNLV, in collaboration with scientists from the Open University of Israel, for the first time, modeled details about how the timing of planet formation in the history of the galaxy affects planetary composition and density. The paper is titled “Effect of Galactic Chemical Evolution on Exoplanet Properties.”

“Materials that go into making planets are formed inside of stars that have different lifetimes,” says Jason Steffen, associate professor with the UNLV Department of Physics and Astronomy and the paper’s lead author.

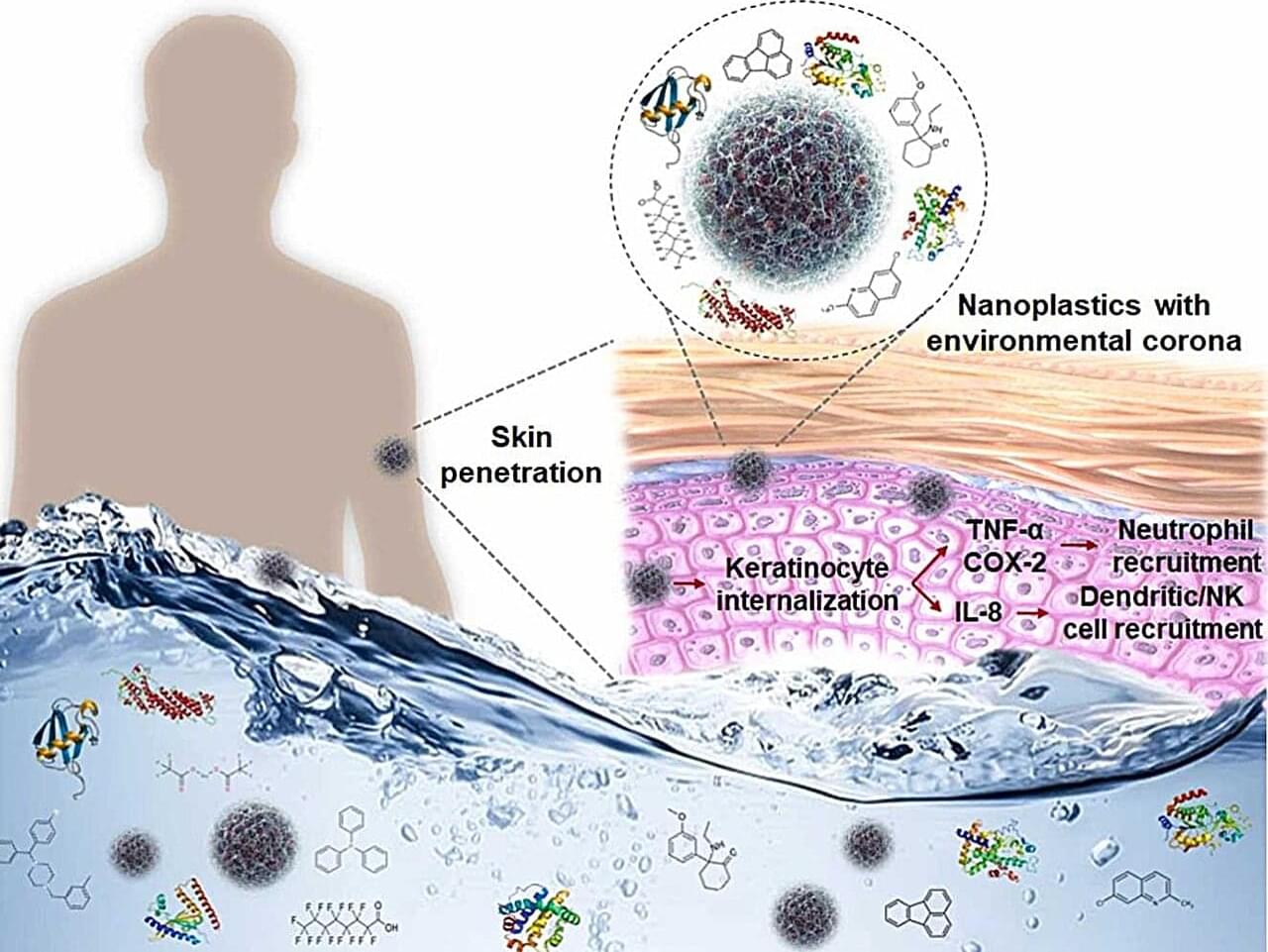

Plastic is ubiquitous in the modern world, and it’s notorious for taking a long time to completely break down in the environment—if it ever does.

But even without breaking down completely, plastic can shed tiny particles —called nanoplastics because of their extremely small size—that scientists are just now starting to consider in long-term health studies.

One of those scientists is Dr. Wei Xu, an associate professor in the Texas A&M College of Veterinary Medicine and Biomedical Sciences’ Department of Veterinary Physiology & Pharmacology. Xu’s current work is focused on what happens when nanoplastics interact with seawater, where they can pick up some curious hitchhikers in the form of chemicals and organic components.

IonQ and D-Wave, two publicly traded U.S. quantum computing companies, are joining as founding members of Q-Alliance, a new initiative in Lombardy described by organizers as the foundation of “the world’s most powerful quantum hub.”

The alliance, formalized in Como with a memorandum of understanding, is designed to accelerate quantum research and industrial applications as part of Italy’s broader digital transformation agenda, according to a news release. It is backed by the Italian government’s Interministerial Committee for Digital Transition and supported by Undersecretary of State Senator Alessio Butti.

Q-Alliance will serve as an open platform connecting universities, research institutions, and private industry. The program aims to train young researchers through scholarships and internships, promote collaboration across scientific disciplines, and position Italy as a European center for quantum development.

She said that the new framework represents a “landmark step,” and that the measure could allow conservationists to consider new ways to address the risks of climate change or test new methods of suppressing disease.

The IUCN — a large group of conservation organizations, governments and Indigenous groups with more than 1,400 members from about 160 countries — meets once every four years. It is the world’s largest network of environmental groups and the authority behind the red list, which tracks threatened species and global biodiversity.

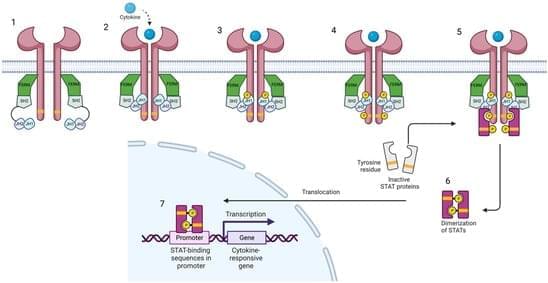

The Janus kinase (Jak)/signal transducer and activating protein (STAT) pathways mediate the intracellular signaling of cytokines in a wide spectrum of cellular processes. They participate in physiologic and inflammatory cascades and have become a major focus of research, yielding novel therapies for immune-mediated inflammatory diseases (IMID). Genetic linkage has related dysfunction of Tyrosine kinase 2 (Tyk2)—the first member of the Jak family that was described—to protection from psoriasis. Furthermore, Tyk2 dysfunction has been related to IMID prevention, without increasing the risk of serious infections; thus, Tyk2 inhibition has been established as a promising therapeutic target, with multiple Tyk2 inhibitors under development.

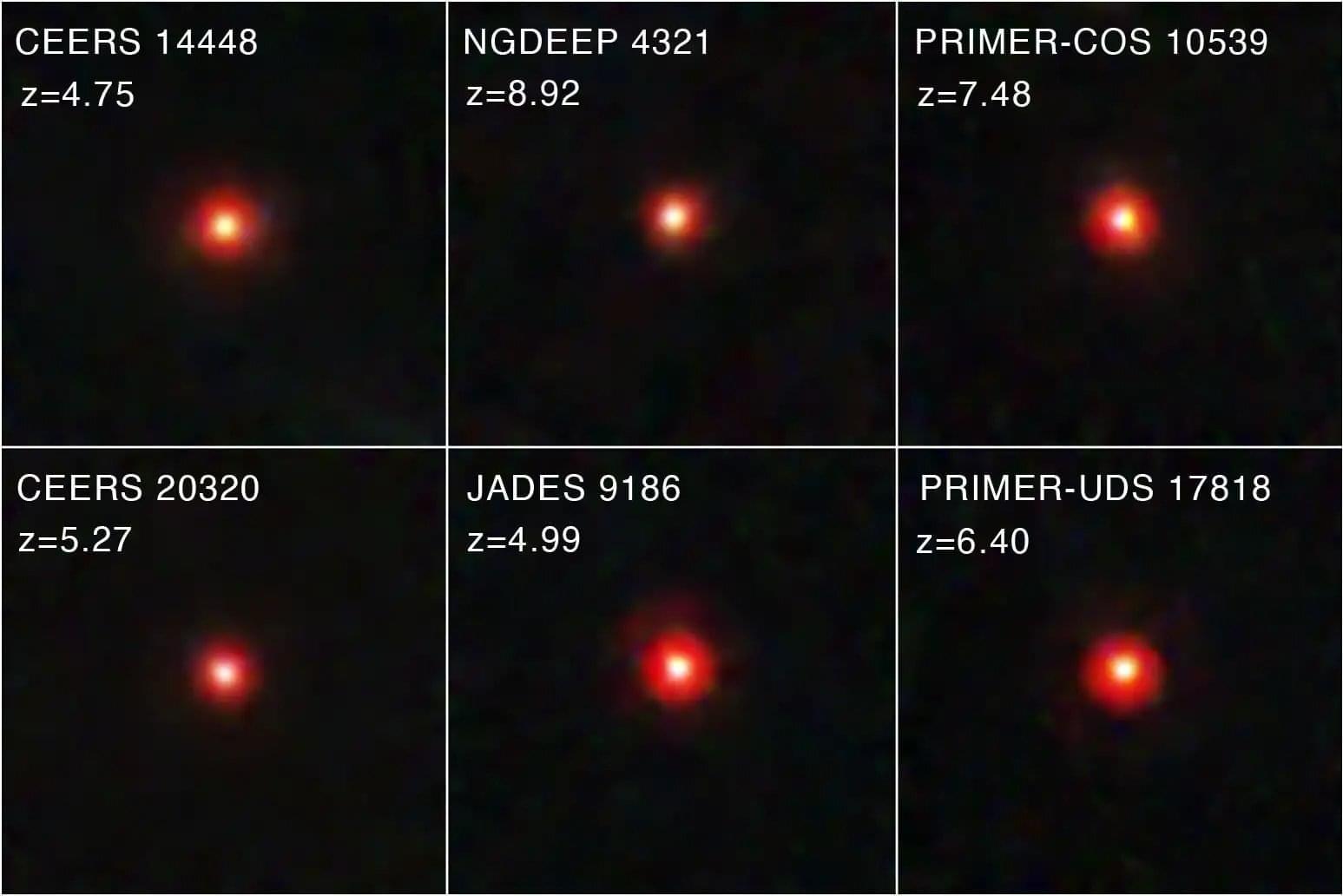

When the James Webb Space Telescope (JWST) began operations, one of its earliest surveys was of galaxies that existed during the very early universe. In December 2022, these observations revealed multiple objects that appeared as “little red dots” (LRDs), fueling speculation as to what they might be. While the current consensus is that these objects are compact, early galaxies, there is still debate over their composition and what makes them so red. On the one hand, there is the “stellar-only” hypothesis, which states that LRDs are red because they are packed with stars and dust.

This means that they could be similar to “dusty galaxies” that are observed in the universe today. On the other hand, there is the” MBH and galaxy” theory, which posits that LRDs are early examples of active galactic nuclei (AGNs) that exist throughout the universe in modern times. Each model has significant implications for how these galaxies subsequently evolved to become the types of galaxies observed more recently.

In a recent paper posted to the arXiv preprint server, an international team of astronomers considered the different scenarios. They concluded that LRDs began as “stellar only” galaxies that eventually formed the seeds of the supermassive black holes (SMBHs) at the center of galaxies today.

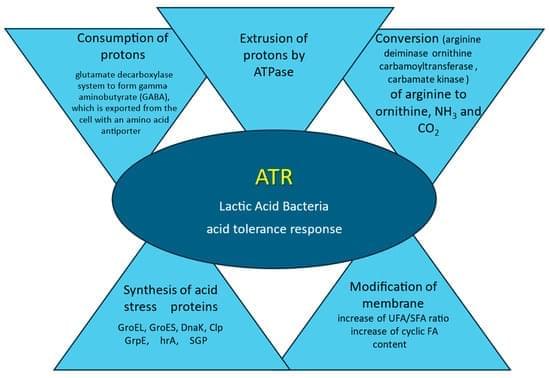

Lactic acid bacteria (LAB), due to their many advantageous features, have been utilized in food manufacturing for centuries. Spontaneous fermentation, in which LAB play a fundamental role, is one of the oldest methods of food preservation. LAB survival and viability in various food products are of great importance. During technological processes, external physicochemical stressors appear often in combinations. To ensure the survival of LAB, adjustment of optimal physicochemical conditions should be considered. LAB strains should be carefully selected for particular food matrices and the technological processes involved. The LAB’s robustness to different environmental stressors includes different defense mechanisms against stress, including the phenomenon of adaptation, and cross-protection.