China’s researchers stabilized a 100 MW microgrid, integrating a small modular reactor (SMR) and solar power.

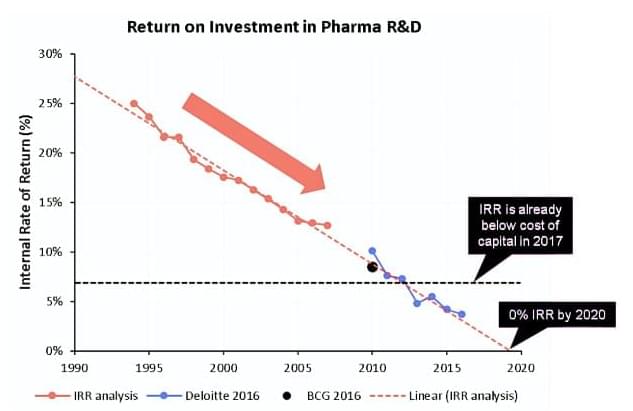

Of the many trends people chase in biotech, the only one that proves sure and consistent is declining returns. Even after adjusting for inflation, the number of new drugs approved per $1 billion of R&D spending has halved approximately every nine years since 1950. Deloitte’s forecast R&D IRR for the top 20 pharmas fell below the industry’s cost of capital (~7–8%) between 2019 and 2022. In other words, while the industry remained profitable overall, the incremental economics of R&D investment were value-eroding rather than value-creating. So, while other industries have a reason to treat the current market downturn as transient, the business of developing medicine has a more fundamental problem to deal with — it is quite literally shrinking out of existence.

A large-scale laboratory screening of human-made chemicals has identified 168 chemicals that are toxic to bacteria found in the healthy human gut. These chemicals stifle the growth of gut bacteria thought to be vital for health. The research, including the new machine learning model, is published in the journal Nature Microbiology.

Most of these chemicals, likely to enter our bodies through food, water, and environmental exposure, were not previously thought to have any effect on bacteria.

As the bacteria alter their function to try and resist the chemical pollutants, some also become resistant to antibiotics such as ciprofloxacin. If this happens in the human gut, it could make infections harder to treat.

Every day, our brains transform quick impressions, flashes of inspiration, and painful moments into enduring memories that underpin our sense of self and inform how we navigate the world. But how does the brain decide which bits of information are worth keeping—and how long to hold on?

Now, new research demonstrates that long-term memory is formed by a cascade of molecular “timers” unfolding across brain regions. With a virtual reality-based behavioral model in mice, the scientists discovered that long-term memory is orchestrated by key regulators that either promote memories into progressively more lasting forms or demote them until they are forgotten.

The findings, published in Nature, highlight the roles of multiple brain regions in gradually reorganizing memories into more enduring forms, with gates along the way to assess salience and promote durability.

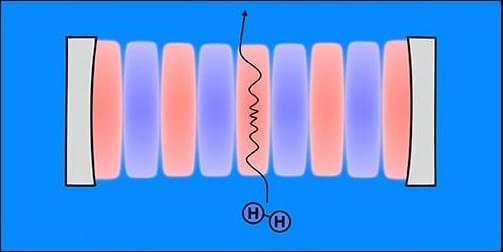

In spaces smaller than a wavelength of light, electric currents jump from point to point and magnetic fields corkscrew through atomic lattices in ways that defy intuition. Scientists have only ever dreamed of observing these marvels directly.

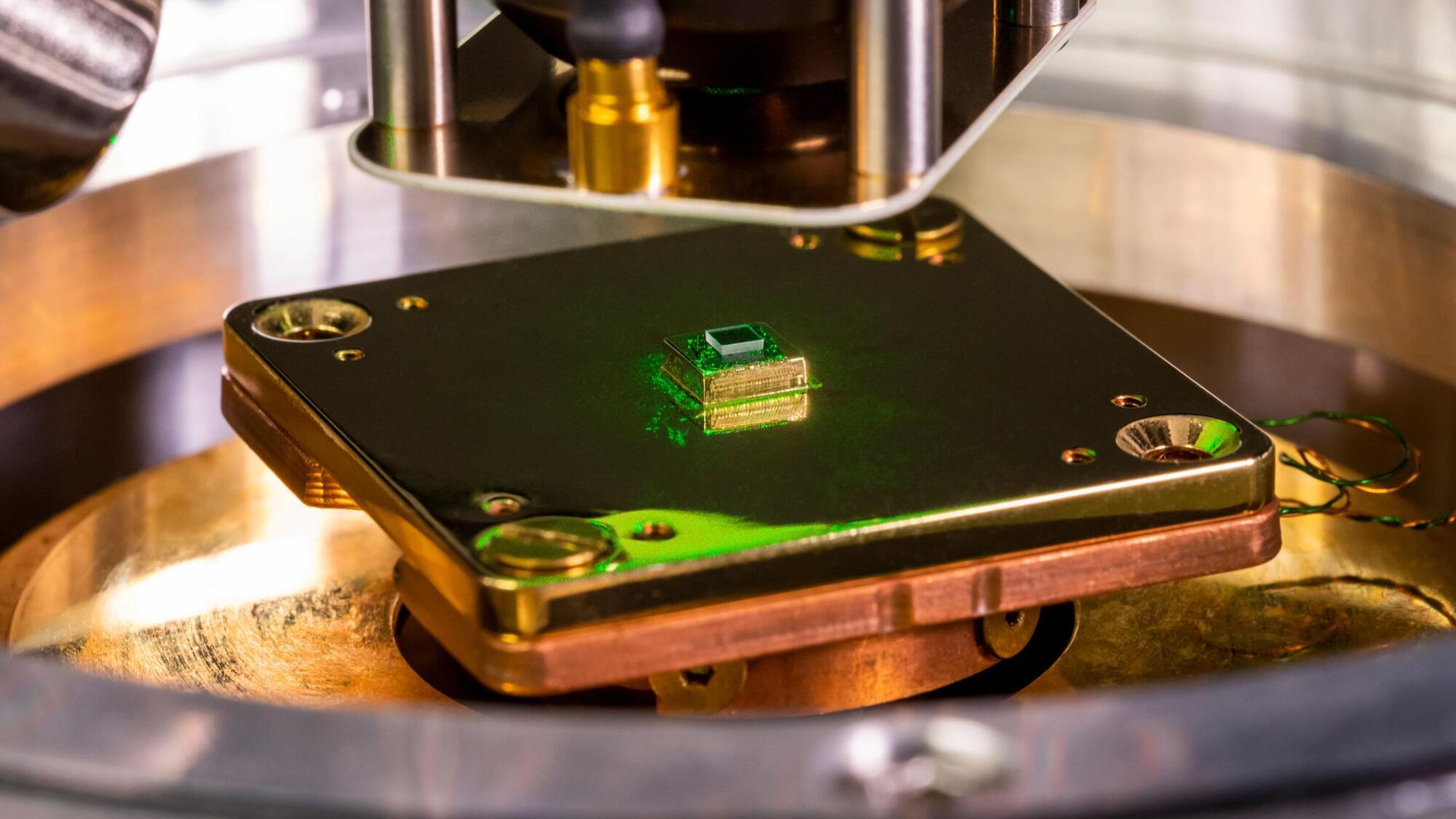

Now Princeton researchers have developed a diamond-based quantum sensor that reveals rich new information about magnetic phenomena at this minute scale. The technique uncovers fluctuations that are beyond the reach of existing instruments and provides key insight into materials such as graphene and superconductors. Superconductors have enabled today’s most advanced medical imaging tools and form the basis of hoped-for technologies like lossless powerlines and levitating trains.

The underlying diamond-based sensing methods have been under development for half a decade. But in a Nov. 27 paper in Nature, the team reported roughly 40 times greater sensitivity than previous techniques.

For decades, physicists have dreamed of a quantum internet: a planetary web of ultrasecure communications and super-powered computation built not from electrical signals, but from the ghostly connections between particles of light.

Now, scientists in Edinburgh say they’ve taken a major step toward turning that vision into something real.

Researchers at Heriot-Watt University have unveiled a prototype quantum network that links two smaller networks into one reconfigurable, eight-user system capable of routing and even teleporting entanglement on demand.

A telescope in Chile has captured a stunning new picture of a grand and graceful cosmic butterfly.

The National Science Foundation’s NoirLab released the picture Wednesday.

Snapped last month by the Gemini South telescope, the aptly named Butterfly Nebula is 2,500 to 3,800 light-years away in the constellation Scorpius. A single light-year is 6 trillion miles.