The future of space-based UV/optical/IR astronomy requires ever larger telescopes. The highest priority astrophysics targets, including Earth-like exoplanets, first generation stars, and early galaxies, are all extremely faint, which presents an ongoing challenge for current missions and is the opportunity space for next generation telescopes: larger telescopes are the primary way to address this issue.

With mission costs depending strongly on aperture diameter, scaling current space telescope technologies to aperture sizes beyond 10 m does not appear economically viable. Without a breakthrough in scalable technologies for large telescopes, future advances in astrophysics may slow down or even completely stall. Thus, there is a need for cost-effective solutions to scale space telescopes to larger sizes.

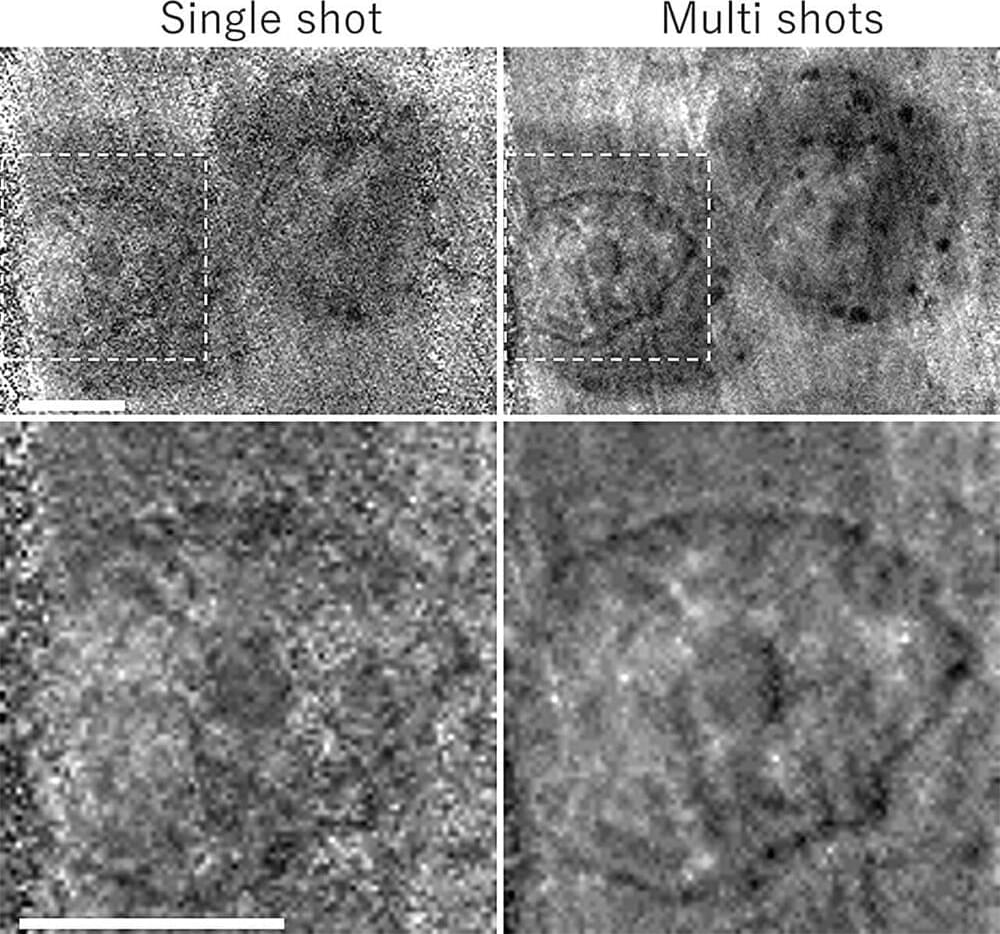

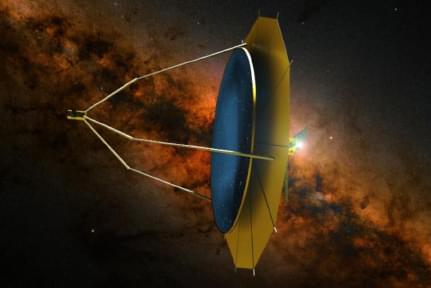

The FLUTE project aims to overcome the limitations of current approaches by paving a path towards space observatories with large aperture, unsegmented liquid primary mirrors, suitable for a variety of astronomical applications. Such mirrors would be created in space via a novel approach based on fluidic shaping in microgravity, which has already been successfully demonstrated in a laboratory neutral buoyancy environment, in parabolic microgravity flights, and aboard the International Space Station (ISS).