Still agi could be a big problem because it could have 1 million iq and we barely have 140 iq maximum.

AI researcher Geoffrey Hinton, widely regarded as the “Godfather of AI,” is worried that AI might take over the world one day.

Within a tumultuous week of November 21 for OpenAI—a series of uncontrolled outcomes, each with its own significance—one would not have predicted the outcome that was to be the reinstatement of Sam Altman as CEO of OpenAI, with a new board in tow —all in five days.

While it’s still unclear the official grounds of Sam Altman’s lack of transparency to the board, and the ultimate distrust that led to his ousting, what was apparent was Microsoft’s complete backing of Altman, and the ensuing lack of support for the original board and its decision. It now leaves everyone to question why a board that had control of the company was unable to effectively oust an executive given its members legitimate safety concerns? And, why was a structure that was put in place to mitigate the risk of unilateral control over artificial general intelligence usurped by an investor—the very entity the structure was designed to guard against?

Sky News Australia investigates the dangers artificial intelligence poses against humans and the impending battle humanity faces.

Has OpenAI invented an AI technology with the potential to “threaten humanity”? From some of the recent headlines, you might be inclined to think so.

Reuters and The Information first reported last week that several OpenAI staff members had, in a letter to the AI startup’s board of directors, flagged the “prowess” and “potential danger” of an internal research project known as “Q*.” This AI project, according to the reporting, could solve certain math problems — albeit only at grade-school level — but had in the researchers’ opinion a chance of building toward an elusive technical breakthrough.

There’s now debate as to whether OpenAI’s board ever received such a letter — The Verge cites a source suggesting that it didn’t. But the framing of Q* aside, Q* in actuality might not be as monumental — or threatening — as it sounds. It might not even be new.

At the most recent H+ Academy discussion and debate on the topic of “anti-transhumanist” writings (video below), several transhumanists suggested that we reclaim “transhumanism” from the obfuscations and misrepresentations of the original meaning of transhumanism as a philosophy and worldview.

How did this happen? Over the years, there have been many “opinions” about what transhumanism is or isn’t. These opinions could be personal interpretations of the philosophy, some of which may or may not be true. While diversity is welcome and new ideas are highly valued, the philosophy of transhumanism does have principles that form the core of its meaning. With a lot of leeway for opinion, interpretations can go in directions that are counter to transhumanism. Some of the more extreme opinions have caused a flood of anti-transhumanist bias and even hate. This is not what we want.

What can we do? We need to make it very clear what transhumanism is and is not. And we need to state this publicly and make it available to everyone.

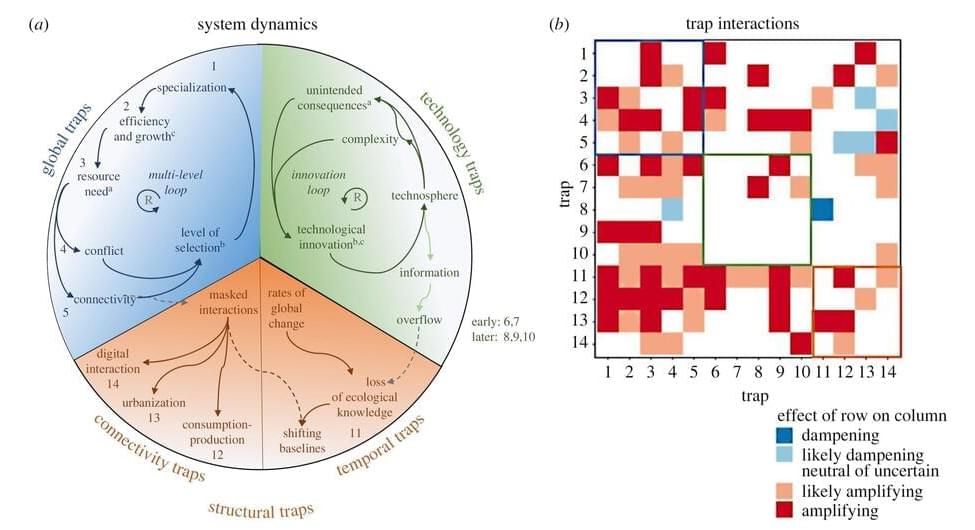

Humankind on the verge of evolutionary traps, a new study: …For the first time, scientists have used the concept of evolutionary traps on human societies at large.

For the first time, scientists have used the concept of evolutionary traps on human societies at large. They find that humankind risks getting stuck in 14 evolutionary dead ends, ranging from global climate tipping points to misaligned artificial intelligence, chemical pollution, and accelerating infectious diseases.

The evolution of humankind has been an extraordinary success story. But the Anthropocene—the proposed geological epoch shaped by us humans—is showing more and more cracks. Multiple global crises, such as the COVID-19 pandemic, climate change, food insecurity, financial crises, and conflicts have started to occur simultaneously in something which scientists refer to as a polycrisis.

Humans are incredibly creative as a species. We are able to innovate and adapt to many circumstances and can cooperate on surprisingly large scales. But these capabilities turn out to have unintentional consequences. Simply speaking, you could say that the human species has been too successful and, in some ways, too smart for its own future good, says Peter Søgaard Jørgensen, researcher at the Stockholm Resilience Center at Stockholm University and at the Royal Swedish Academy of Sciences’ Global Economic Dynamics and the Biosphere program and Anthropocene laboratory.

Misaligned AI is not the one you should worry most about (yet).

For the first time, scientists have used the concept of evolutionary traps on human societies at large. They find that humankind risks getting stuck in 14 evolutionary dead ends, ranging from global climate tipping points to misaligned artificial intelligence, chemical pollution, and accelerating infectious diseases.

The anthropocene era: success and challenges.

Musk cofounded OpenAI—the parent company of the viral chatbot ChatGPT—in 2015 alongside Altman and others. But when Musk proposed that he take control of the startup to catch up with tech giants like Google, Altman and the other cofounders rejected the proposal. Musk walked away in February 2018 and changed his mind about a “massive planned donation.”

Now Musk’s new company, xAI, is on a mission to create an AGI, or artificial general intelligence, that can “understand the universe,” the billionaire said in a nearly two-hour-long Twitter Spaces talk on Friday. An AGI is a theoretical type of A.I. with human-like cognitive abilities and is expected to take at least another decade to develop.

Musk’s new company debuted only days after OpenAI announced in a July 5 blog post that it was forming a team to create its own superintelligent A.I. Musk said xAI is “definitely in competition” with OpenAI.