A one-way trip to Mars with conventional chemical rockets could take up to nine months. It’s a long time for humans to be in a spaceship exposed to radiation and other hazards. That’s one reason NASA, space agencies, universities, and private industry are pursuing different rocket technologies. Here’s a look at them.

Get the latest international news and world events from around the world.

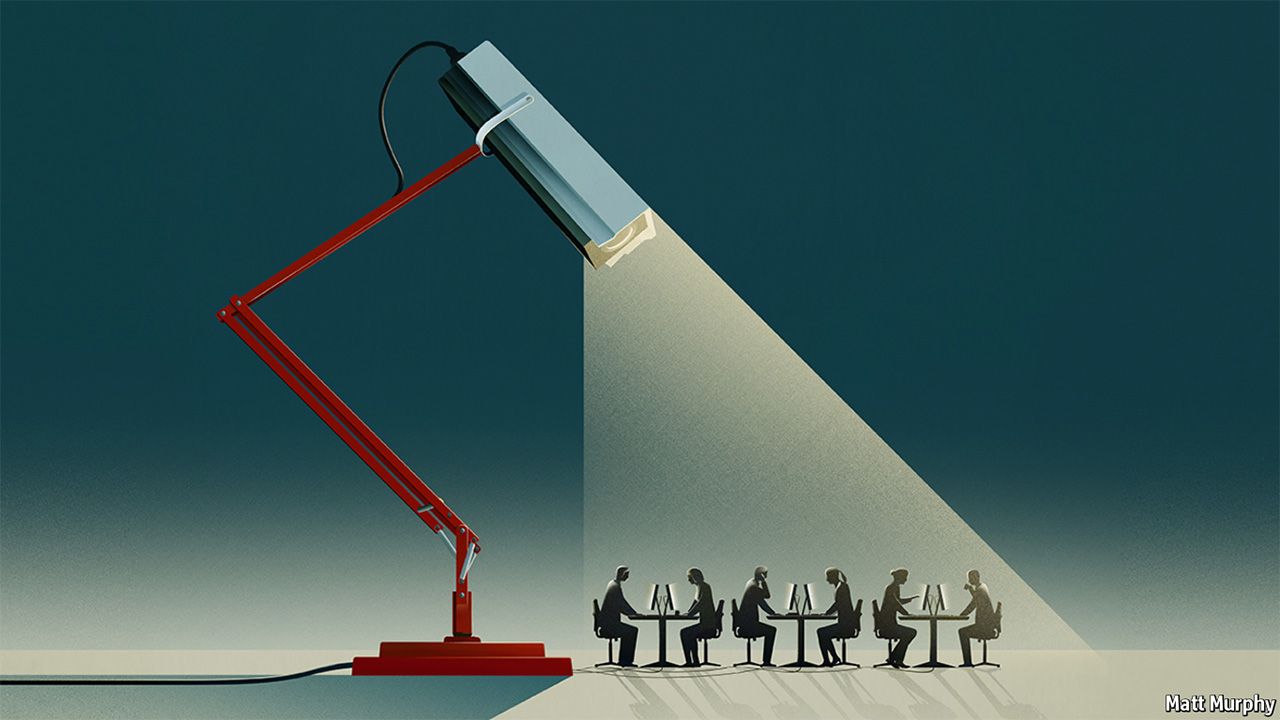

The workplace of the future

The march of AI into the workplace calls for trade-offs between privacy and performance. A fairer, more productive workforce is a prize worth having, but not if it shackles and dehumanises employees. Striking a balance will require thought, a willingness for both employers and employees to adapt, and a strong dose of humanity.

ARTIFICIAL intelligence (AI) is barging its way into business. As our special report this week explains, firms of all types are harnessing AI to forecast demand, hire workers and deal with customers. In 2017 companies spent around $22bn on AI-related mergers and acquisitions, about 26 times more than in 2015. The McKinsey Global Institute, a think-tank within a consultancy, reckons that just applying AI to marketing, sales and supply chains could create economic value, including profits and efficiencies, of $2.7trn over the next 20 years. Google’s boss has gone so far as to declare that AI will do more for humanity than fire or electricity.

Such grandiose forecasts kindle anxiety as well as hope. Many fret that AI could destroy jobs faster than it creates them. Barriers to entry from owning and generating data could lead to a handful of dominant firms in every industry.

Get our daily newsletter

Upgrade your inbox and get our Daily Dispatch and Editor’s Picks.

Tiangong-1: How to follow the space lab’s decaying orbit and reentry

With the space station likely to fall on April Fool’s Day, it’s important to know whom to follow for reliable information.

Earth Likely Had Water Before Moon-Forming Smashup

New research suggests that the moon-forming impact of another body and Earth, early in the solar system’s history, fully scrambled the two objects, and that water was already present before the smash-up.

Asteroids to serve as refuelling stations for space exploration

Many asteroids are rich in minerals, metals and water, making them potential life support systems for humans venturing deep into the solar system.

“Asteroids contain all the materials necessary to enable human activity,” says Peter Stibrany, chief business developer and strategist of California-based Deep Space Industries (DSI). “Just those near Earth could sustain more than 10bn people.”

Moreover, their relatively small mass means their gravitational field is weak, so in this respect, at least, they are much easier than larger bodies such as the moon to land on and leave, he argues.

Hormone Replacement: Can It Treat Aging Skin?

Aging skin is a major concern for menopausal women – who experience hormone changes – as it affects self-confidence, psychological wellbeing, intimate relationships, and is the one life challenge where women don’t budget. The anti-aging industry, which is worth $50 billion a year, knows this very well!

When women notice more wrinkles, increased sagging, dullness, dryness and feeling less attractive, they usually opt for fillers, Botox, threads, chemical peels, etc. However, the question remains: how long will all of these sustain rapidly aging skin? Scientific evidence suggests that women lose 30% of their collagen content in the first five menopausal years. Recently, it has been proposed that using bioidentical HRT during this critical time of rapid skin aging can hugely prevent menopausal skin aging.

Furthermore, in the European anti-aging market, “skin HRT” is already being used to prevent rapid changes in menopausal skin by targeting oestrogen receptors. “Skin HRT” is used as an anti-aging cosmeceutical, not just to prevent rapid skin aging, but it can be safely used in women where HRT is contraindicated, as it does not absorb systemically.

Rare galaxy found without any dark matter

Millions of light-years from Earth, there’s a galaxy that is completely devoid of dark matter — the mysterious, unseen material that is thought to permeate the Universe. Instead, the galaxy seems to be made up of just regular ol’ matter, the kind that comprises stars, planets, and dust. That makes this galaxy a rare find, and its discovery opens up new possibilities for how dark matter is distributed throughout the cosmos.

No one knows what dark matter is. True to its name, the material doesn’t emit light, so we’ve never detected it directly. All scientists know is that it’s out there based on their observations of how galaxies and stars move. Some unseen substance is affecting these deep-space objects, filling up the space between stars and clusters of galaxies. And there seems to be a lot of it. Dark matter is thought to make up 27 percent of all the mass and energy of the Universe. The matter we can see — the atoms that make up you and me — accounts for just 5 percent.

This Supposed Dark Matter Evidence Won’t Seem to Go Away

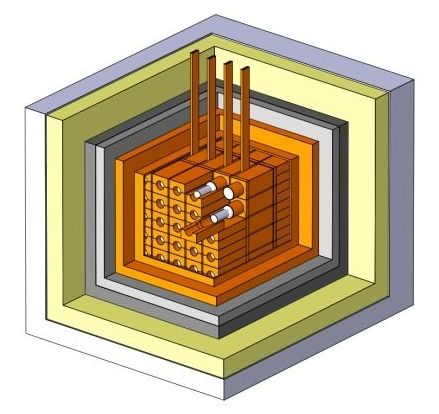

This past June, 500 pounds of a specially fabricated crystal buried in an Italian mountain seemed to glow just a little brighter. It wasn’t the first time, nor the last—every year, the signal seems to increase and decrease like clockwork as the Earth orbits the Sun.

Some people think the crystal has spotted a signature of elusive dark matter particles.

Scientists from an Italian experiment called DAMA/LIBRA announced at the XLIX meeting of the Gran Sasso Scientific Committee that after another six years observing, the annual modulation of their crystal’s signal is still present. This experiment was specially built to detect dark matter, and indeed, DAMA/LIBRA’s scientists are convinced they’ve spotted the elusive dark matter particle. Others are more skeptical.

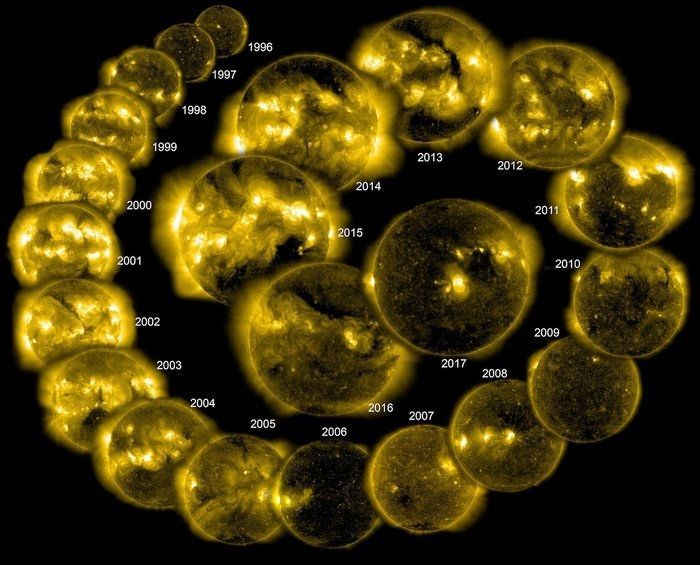

New image shows how the Sun changes over a 22-year cycle

Out of billions of stars in the Milky Way galaxy, there’s one in particular, orbiting 25,000 light-years from the galactic core, that affects Earth day by day, moment by moment. That star, of course, is the sun. While the sun’s activity cycle has been tracked for about two and a half centuries, the use of space-based telescopes offers a new and unique perspective of our nearest star.

The Solar and Heliospheric Observatory (SOHO), a collaboration between NASA and the European Space Agency (ESA), has been in space for more than 22 years — the average length of one completed solar magnetic cycle, according to an image caption from ESA. In the new image, SOHO researchers pulled together 22 images of the sun, taken each spring over the course of a full solar cycle. When the sun is at its most active, strong magnetic fields show up as bright spots in the sun’s outer atmosphere, called the corona; black sunspots appear as concentrations of magnetic fields reduce the sun’s surface temperature during active periods as well.

Throughout the sun’s magnetic cycles, the polarity of the sun’s magnetic field gradually flips. This initial phase takes 11 years, and after another 11 years, the magnetic field’s orientation returns to where it began. Monitoring the entire 22-year cycle provided significant data regarding the interaction between the sun’s activity and Earth, improved space-weather forecasting capabilities and more, ESA officials said in the caption. SOHO has revealed much about the sun itself, capturing “sunquakes,” discovering waves traveling through the corona and collecting details about the charged particles it propels into space, called the solar wind.