To me; it’s all common sense. If you step back look at the technology landscape as a whole along with AI; you start to see the barriers that truly spolights where we have way too much hype around AI.

Example, hacking. If we had truly advance AI at the level that it has been promoted; wouldn’t make sense that researchers would want to solve the $120 billion dollar money pit issue around Cyber Security and make billions to throw at their emerging AI tech plus ensure their AI investment wouldn’t incur pushback by consumers due to lack of trust that AI would not be hacked? So, I usually tread litely on over hype technologies.

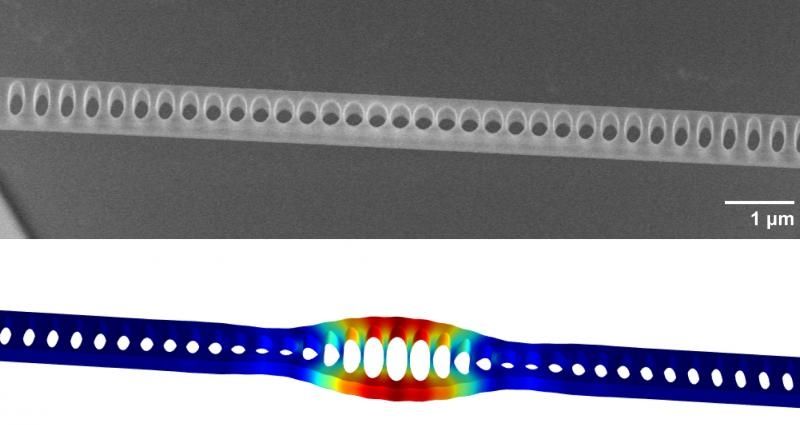

I do see great possiblities and seen some amazing things and promise from Quantum Computing; however, we will not truly realize its impact and full potential until another 7 years; I will admit I see more promise with it than the existing AI landscape that is built off of existing traditional digital technology that has been proven to be broken by hackers.

Do you “believe” in AI?