Deals get remote islanders online, but fears linger over stranded investment, data security.

SHAUN TURTON and RURIKA IMAHASHI.

Scientists have tapped into the Summit supercomputer to study an elaborate molecular pathway called nucleotide excision repair (NER). This research reveals how damaged strands of DNA are repaired through this molecular pathway, nucleotide excision repair.

NER’s protein components can change shape to perform different functions of repair on broken strands of DNA.

A team of scientists from Georgia State University built a computer model of a critical NER component called the pre-incision complex (PInC) that plays a key role in regulating DNA repair processes in the latter stages of the NER pathway.

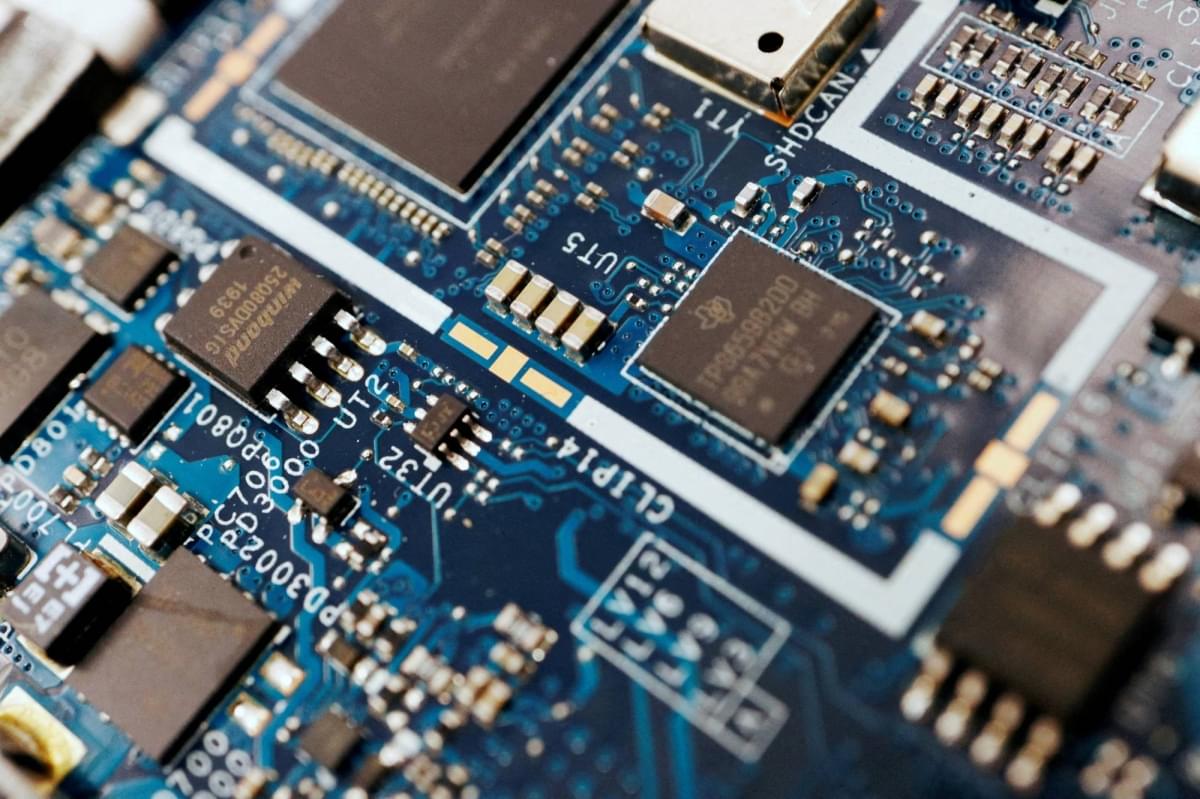

The battle for artificial intelligence supremacy hinges on microchips. But the semiconductor sector that produces them has a dirty secret: It’s a major source of chemicals linked to cancer and other health problems.

Global chip sales surged more than 19% to roughly $628 billion last year, according to the Semiconductor Industry Association, which forecasts double-digit growth again in 2025. That’s adding urgency to reducing the impacts of so-called “forever chemicals” — which are also used to make firefighting foam, nonstick pans, raincoats and other everyday items — as are regulators in the U.S. and Europe who are beginning to enforce pollution limits for municipal water supplies. In response to this growing demand, a wave of startups are offering potential solutions that won’t cut the chemicals out of the supply chain but can destroy them.

Per-and polyfluoroalkyl substances, or PFAS, have been detected in every corner of the planet from rainwater in the Himalayas to whales off the Faroe Islands and in the blood of almost every human tested. Known as forever chemicals because the properties that make them so useful also make them persistent in the environment, scientists have increasingly linked PFAS to health issues including obesity, infertility and cancer.

One belief underlying the power-hungry approach to machine learning advanced by OpenAI and Mistral AI is that an artificial intelligence model must review its entire dataset before spitting out new insights.

Sepp Hochreiter, an early pioneer of the technology who runs an AI lab at Johannes Kepler University in Linz, Austria, has a different view, one that requires far less cash and computing power. He’s interested in teaching AI models how to efficiently forget.

Hochreiter holds a special place in the world of artificial intelligence, having scaled the technology’s highest peaks long before most computer scientists. As a university student in Munich during the 1990s, he came up with the conceptual framework that underpinned the first generation of nimble AI models used by Alphabet, Apple and Amazon.

The European Commission is raising $20 billion to construct four “AI gigafactories” as part of Europe’s strategy to catch up with the U.S. and China on artificial intelligence, but some industry experts question whether it makes sense to build them.

The plan for the large public access data centers, unveiled by European Commission President Ursula von der Leyen last month, will face challenges ranging from obtaining chips to finding suitable sites and electricity.

“Even if we would build such a big computing factory in Europe, and even if we would train a model on that infrastructure, once it’s ready, what do we do with it?” said Bertin Martens, of economic think tank Bruegel. It’s a chicken and egg problem. The hope is that new local firms such as France’s Nvidia-backed Mistral startup will grow and use them to create AI models that operate in line with EU AI safety and data protection rules, which are stricter than those in the U.S. or China.

Japan is home to a wide variety of train stations, from tiny countryside sheds to sprawling urban complexes, stations with their own wineries and ones with giant ancient relics whose eyes glow. It’s gotten to the point where it’s really hard to be “the first” anything when it comes to train stations, but JR West has managed it with the first-ever 3D-printed station building.

This new structure is scheduled to replace the current one at Hatsushima Station on the JR Kisei Main Line in Arida City, Wakayama Prefecture. Like many relatively rural stations in Japan, the wooden structures are aging and in need of replacements.

The new building will be roughly the same size, covering 10 square meters (108 square feet) and made from a more durable reinforced concrete. The foundation and exterior of the building will be printed off-site by Osaka-based 3D-printer housing company Serendix.

Since ChatGPT burst onto the scene in late 2022, generative artificial intelligence (GenAI) models have been vying for the lead — with the U.S. and China hotbeds for the technology.

GenAI tools are able to create images, videos, or written works as well as answer questions or tend to online tasks based on simple prompts.

These AI assistants stand out for their popularity and sophistication.