Could there be billions of Earth-like planets in our galaxy? A groundbreaking study by researchers from the University of British Columbia (UBC) estimates that the Milky Way might host as many as 6 billion planets similar to Earth. This calculation is based on data collected during NASA’s Kepler mission, which observed over 200,000 stars from 2009 to 2018.

A doctoral candidate’s skill, patience and dedication resulted in a groundbreaking spider silk discovery.

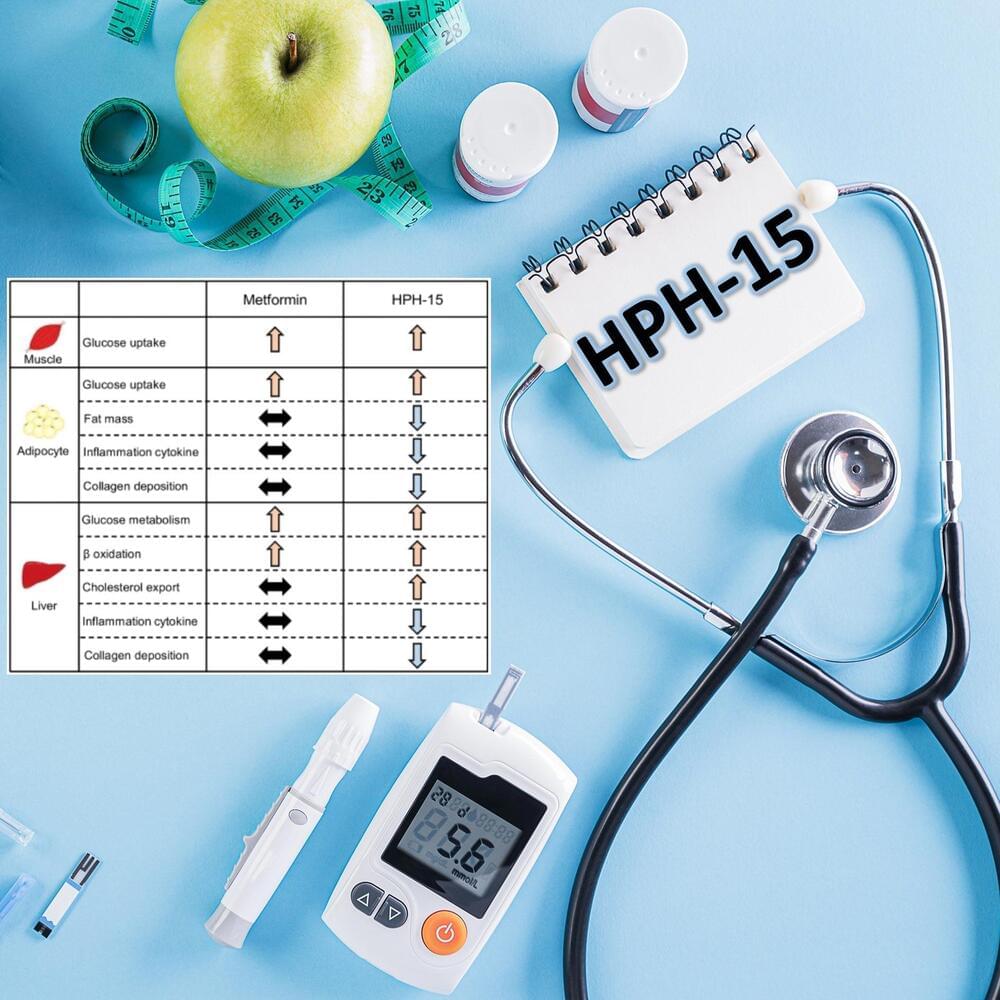

HPH-15, a compound developed by Kumamoto University, reduces blood glucose and fat accumulation more effectively than metformin, with added benefits like antifibrotic properties and a safer profile. This innovation may revolutionize diabetes treatment.

Scientists at Kumamoto University have unveiled a novel compound, HPH-15, which has dual effects: reducing blood glucose levels and combating fat accumulation. This breakthrough represents a significant advancement in diabetes treatment innovation.

Type 2 diabetes, a condition affecting millions worldwide, is often accompanied by complications such as fatty liver and insulin resistance, posing challenges for current treatment methods. The research team, led by Visiting Associate Professor Hiroshi Tateishi and Professor Eiichi Araki, has identified HPH-15 as a promising alternative to existing medications like metformin.

#excerpt — Explore

Posted in computing, quantum physics

In the future we can envision FASQ* machines, Fault-Tolerant Application-Scale Quantum computers that can run a wide variety of useful applications, but that is still a rather distant goal. What term captures the path along the road from NISQ to FASQ? Various terms retaining the ISQ format of NISQ have been proposed[here, here, here], but I would prefer to leave ISQ behind as we move forward, so I’ll speak instead of a megaquop or gigaquop machine and so on meaning one capable of executing a million or a billion quantum operations, but with the understanding that mega means not precisely a million but somewhere in the vicinity of a million.

Naively, a megaquop machine would have an error rate per logical gate of order 10^{-6}, which we don’t expect to achieve anytime soon without using error correction and fault-tolerant operation. Or maybe the logical error rate could be somewhat larger, as we expect to be able to boost the simulable circuit volume using various error mitigation techniques in the megaquop era just as we do in the NISQ era. Importantly, the megaquop machine would be capable of achieving some tasks beyond the reach of classical, NISQ, or analog quantum devices, for example by executing circuits with of order 100 logical qubits and circuit depth of order 10,000.

- John Preskill.

[#excerpt](https://www.facebook.com/hashtag/excerpt?__eep__=6&__cft__[0]=AZXa9ueYXttmfVEwzQ4GVekAZVQop7Zhgkor5jA_vB_hwHN4tj73lg-rThDgKBiPSpLhF7zjAlitfcoy74S8m0I2_VTeMl5LfR2Iy9fAsd5Y9hsrZvFvD0zaYNMgiSqjej22oVy1MJZdG12EXGSwzpMBCIeIJ52AotdeXkKOIklHyEUqwFUxAFf8GQfiarLm4odTgsHClmDYc7kUFL3A6AZ-&__tn__=*NK-R) transcript of his talk at the [#Q2B](https://www.facebook.com/hashtag/q2b?__eep__=6&__cft__[0]=AZXa9ueYXttmfVEwzQ4GVekAZVQop7Zhgkor5jA_vB_hwHN4tj73lg-rThDgKBiPSpLhF7zjAlitfcoy74S8m0I2_VTeMl5LfR2Iy9fAsd5Y9hsrZvFvD0zaYNMgiSqjej22oVy1MJZdG12EXGSwzpMBCIeIJ52AotdeXkKOIklHyEUqwFUxAFf8GQfiarLm4odTgsHClmDYc7kUFL3A6AZ-&__tn__=*NK-R) Conference.

Explore #excerpt at Facebook.

High overhead, there is a layer of the atmosphere called the mesosphere. It is located roughly 31 to 55 miles above ground.

The mesosphere might seem pretty far removed from everyday concerns. Still, it can be disturbed by severe weather far below.

On the day Helene hit, NASA’s instruments captured signs of a type of atmospheric wave, not related to the space-time ones Einstein predicted, but rather ones formed by events like hurricanes.

Trees and other kinds of vegetation have proven to be remarkably resilient to the intense radiation around the nuclear disaster zone.

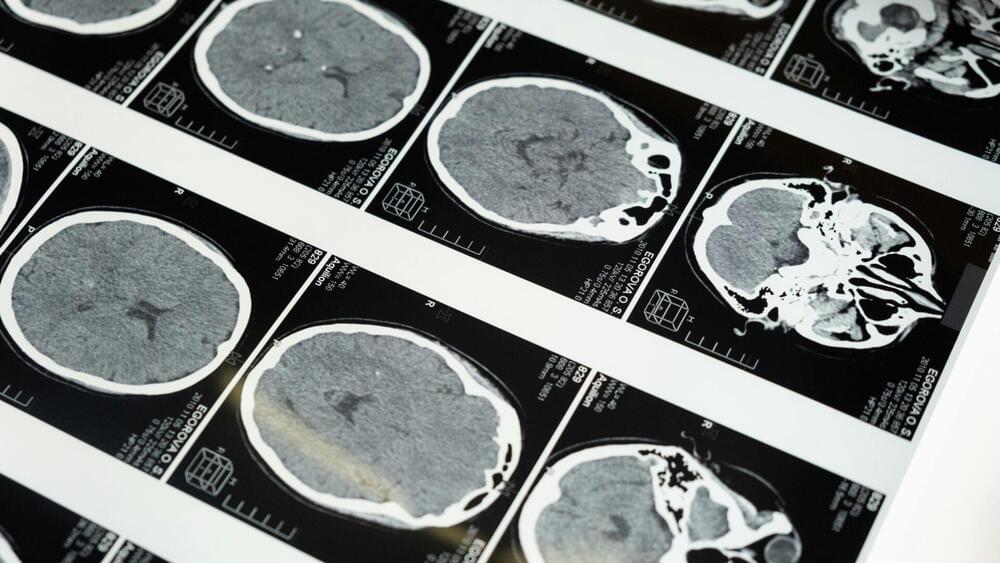

Researchers at UCLA have developed a new AI model that can expertly analyze 3D medical images of diseases in a fraction of the time it would otherwise take a human clinical specialist.

The deep-learning framework, named SLIViT (SLice Integration by Vision Transformer), analyzes images from different imagery modalities, including retinal scans, ultrasound videos, CTs, MRIs, and others, identifying potential disease-risk biomarkers.

Dr. Eran Halperin, a computational medicine expert and professor at UCLA who led the study, said the model is highly accurate across a wide variety of diseases, outperforming many existing, disease-specific foundation models. It uses a novel pre-training and fine-tuning method that relies on large, accessible public data sets. As a result, Halperin believes that the model can be deployed—at relatively low costs—to identify different disease biomarkers, democratizing expert-level medical imaging analysis.

Summary: SUMO proteins play a key role in activating dormant neural stem cells, vital for brain repair and regeneration. This finding, centered on a process called SUMOylation, reveals how neural stem cells can be “woken up” to aid in brain recovery, offering potential treatments for neurodegenerative diseases.

SUMO proteins regulate neural stem cell reactivation by modifying the Hippo pathway, crucial for cell growth and repair. The study’s insights lay foundational groundwork for developing regenerative therapies to combat conditions like Alzheimer’s and Parkinson’s disease.

3,000-year-old clay tablets show that some associations between emotion and parts of the body have remained the same for millennia.