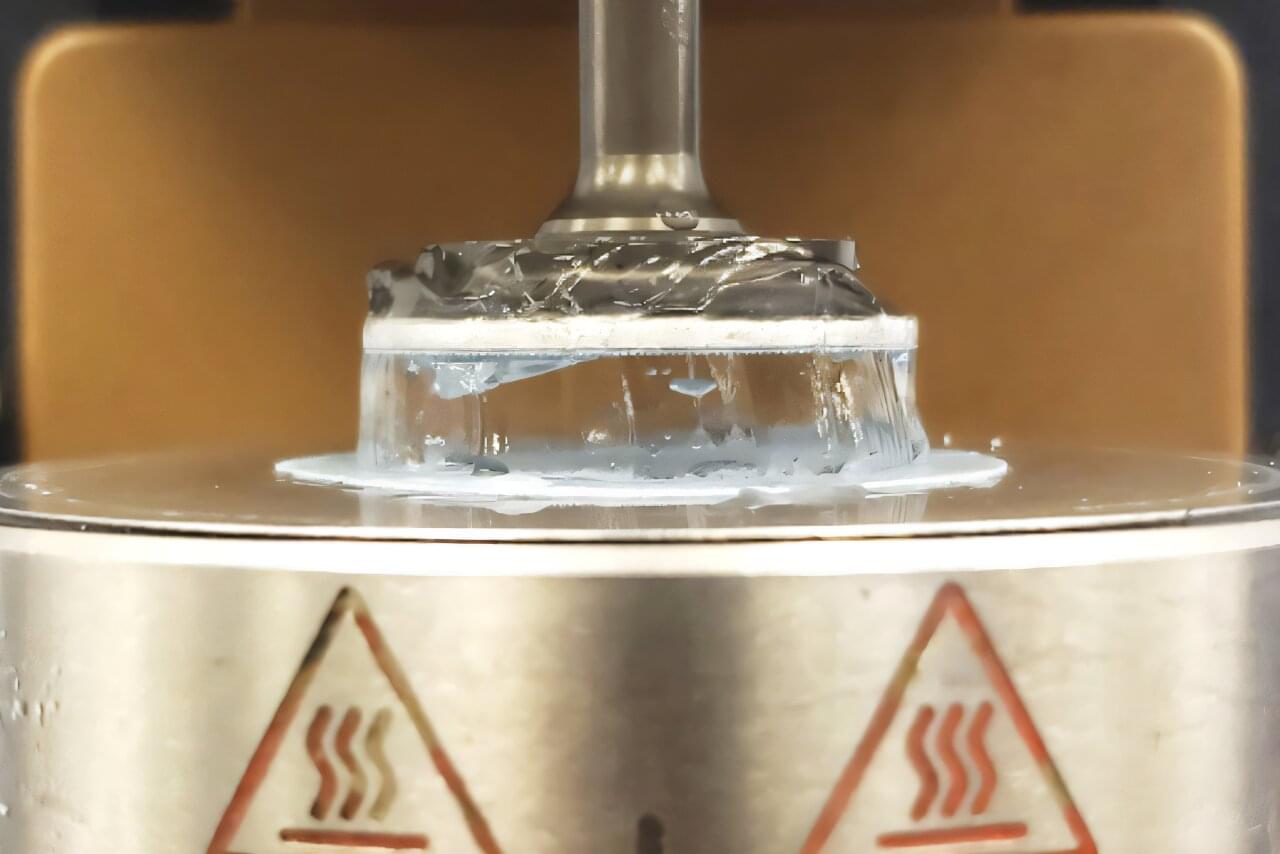

A research team from Aarhus University, Denmark, has measured and explained the exceptionally low thermal conductivity of the crystalline material AgGaGe3Se8. Despite its ordered structure, the material behaves like a glass in terms of heat transport—making it one of the least heat-conductive crystalline solids known to date.

At room temperature, AgGaGe3Se8 exhibits a thermal conductivity of just 0.2 watts per meter-Kelvin—which is three times lower than water and five times lower than typical silica glass. The material is composed of silver (Ag), gallium (Ga), germanium (Ge), and selenium (Se), and has previously been studied for its optical properties.

Now, for the first time, researchers from iMAT—the Aarhus University Center for Integrated Materials Research—have measured its thermal transport properties and identified the structural origin of its unusually low thermal conductivity.