Yoga and meditation isn’t just good for your mind and body, it’s good for your DNA, too!

Get the latest international news and world events from around the world.

Carbon nanotubes found in children’s lungs for the first time

By Sam Wong

Carbon nanotubes have turned up in the lungs of children living in Paris – the first time they have been detected in humans.

Incredibly strong, light and conductive, nanotubes have shown great potential in areas such as computing, clothing and healthcare technology. Nevertheless, there has been some concern over their use after mouse studies showed that injected nanotubes can cause immune reactions similar to those produced by asbestos.

DARPA’s New Project Is Investing Millions in Brain-Machine Interface Tech

When Elon Musk and DARPA both hop aboard the cyborg hypetrain, you know brain-machine interfaces (BMIs) are about to achieve the impossible.

BMIs, already the stuff of science fiction, facilitate crosstalk between biological wetware with external computers, turning human users into literal cyborgs. Yet mind-controlled robotic arms, microelectrode “nerve patches”, or “memory Band-AIDS” are still purely experimental medical treatments for those with nervous system impairments.

With the Next-Generation Nonsurgical Neurotechnology (N3) program, DARPA is looking to expand BMIs to the military. This month, the project tapped six academic teams to engineer radically different BMIs to hook up machines to the brains of able-bodied soldiers. The goal is to ditch surgery altogether—while minimizing any biological interventions—to link up brain and machine.

Chinese scientists make breakthrough in injectable cartilage

(ECNS) — The Chinese team that constructed the world’s first ear in a lab and grafted it onto a patient last year has made new progress by developing injectable cartilage that can be used in human tissue repair and plastic surgery.

The regeneration technique involves taking a small part of cartilage tissue from behind the ear of a patient, culturing seed cells in the lab and reproducing cells in a sufficient amount to fill a biodegradable mould made by 3D-printing.

Professor Cao Yilin, director of National Tissue Engineering Research Center, said it marks a breakthrough from previous technology as the cultured cells can be injected into a patient’s body parts like the nose and chin where they continue to develop into normal tissue, a minimally invasive treatment similar to natural growth.

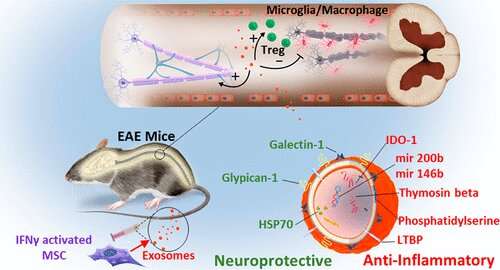

Supercharging adoptive T cell therapy to overcome solid tumor–induced immunosuppression

The development of new cancer immunotherapies including checkpoint blockade and chimeric antigen receptor (CAR) T cell therapy has revolutionized cancer treatment. CAR T cells have shown tremendous success in certain B cell malignancies, resulting in U.S. Food and Drug Administration (FDA) approval of this approach for certain types of leukemia and lymphoma. However, response rates against solid cancer have been less successful to date. Approaches to modulate the immunosuppressive tumor microenvironment including targeting checkpoint pathways, modulating metabolic pathways, and generating cytokine-producing T cells have led to considerable enhancement of adoptive T cell immunotherapy, first in preclinical models and now in patients.

Intuitions Is The Highest Form Of Intelligence, According To Psychologist

According to Gerd Gigerenzer, who works at the Max Planck Institute for Human Development, true intuitiveness is having the necessary instinct to understand what knowledge we need to focus on and what we can afford to forget.

In his work ‘Gut Feelings: The Intelligence of the Unconscious’, Gerd explains how intuition and rationality can go hand in hand by using himself as an example. While immersed in his research, he often gets a hunch which he usually takes forward because he just knows that it will give him the answer. But he also double checks using scientific formulae to actually figure out the reasons behind his hunches. But when it comes to personal matters, he goes solely by what his intuition tells him.