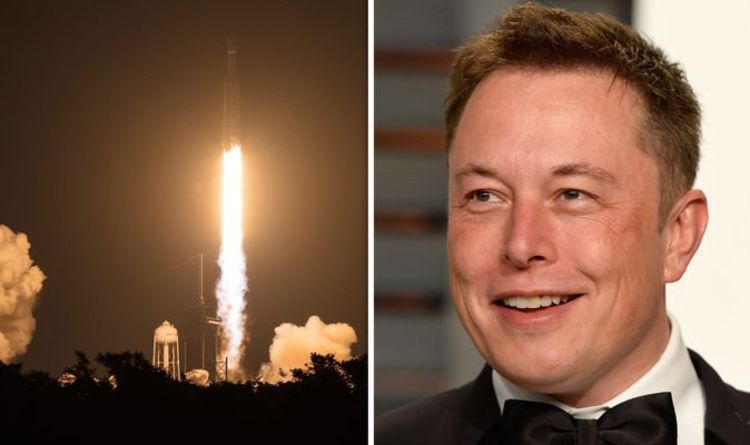

Elon Musk is a lot of things to a lot of people, but there’s something very interesting about him that drives most others: If he thinks something is worth improving, there’s more than a coin’s toss of a chance he’s going to make a go of it.

Now, Musk is a fantastically creative guy and all, but I’m not here to shower him with accolades (today anyhow). I’m setting the stage to discuss the next so-called improbable thing he might take on in the near future.