Category: space – Page 544

First Images of the James Webb Space Telescope (Official NASA Broadcast)

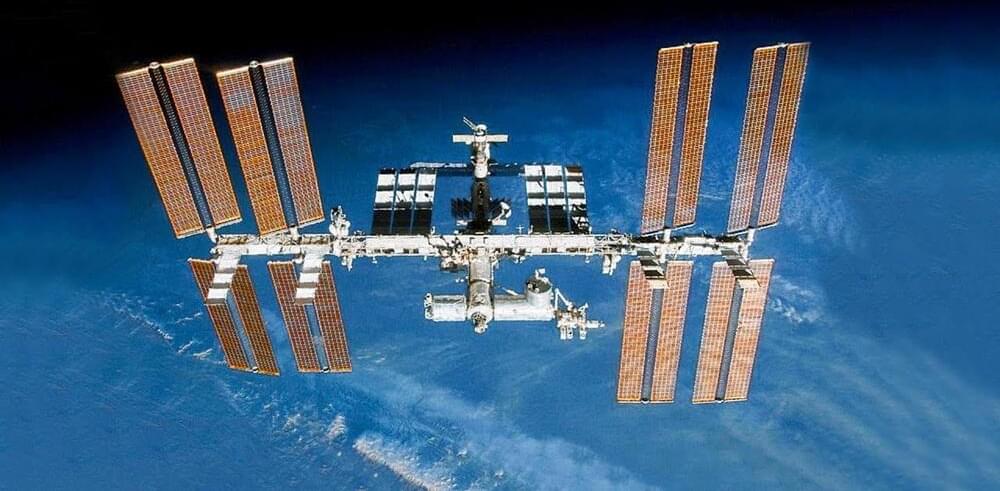

It’s time to #UnfoldTheUniverse. Watch as the mission team reveals the long-awaited first images from the James Webb Space Telescope. Webb, an international collaboration led by NASA with our partners the European Space Agency and the Canadian Space Agency, is the biggest telescope ever launched into space. It will unlock mysteries in our solar system, look beyond to distant worlds around other stars, and probe the mysterious structures and origins of our universe and our place in it.

All about Webb: https://webb.nasa.gov

Tetraneutron — An Exotic State of Matter discovered

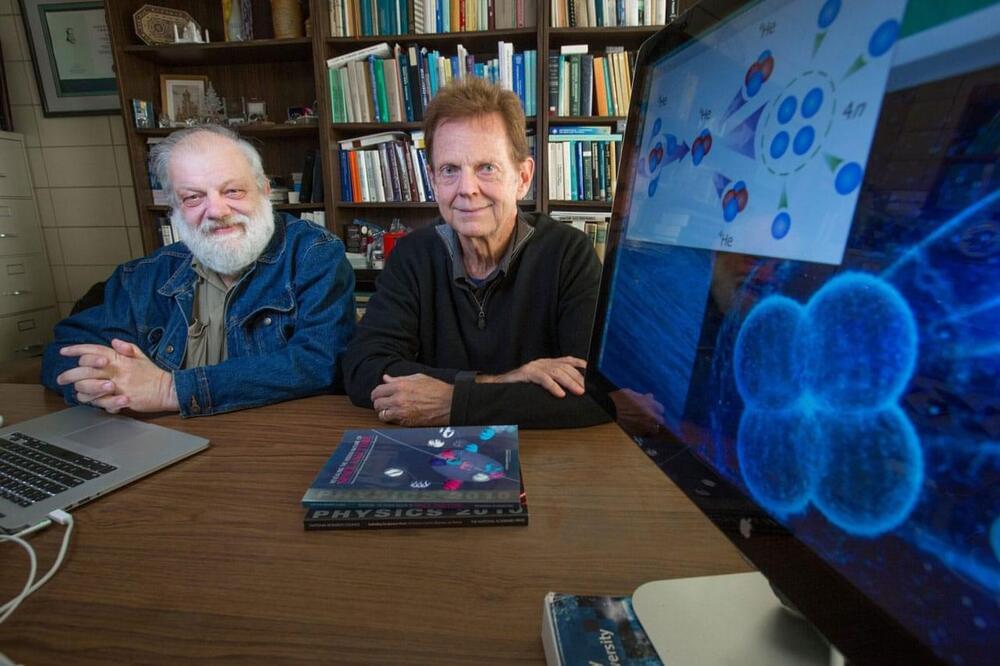

A long-standing question in nuclear physics is whether chargeless nuclear systems can exist. Only neutron stars represent near-pure neutron systems, where neutrons are squeezed together by the gravitational force to very high densities. The experimental search for isolated multi-neutron systems has been an ongoing quest for several decades, with a particular focus on the four-neutron system called the tetraneutron, resulting in only a few indications of its existence so far, leaving the tetraneutron an elusive nuclear system for six decades.

A recently announced experimental discovery of a tetraneutron by an international group led by scientists from Germany’s Technical University of Darmstadt opens doors for new research and could lead to a better understanding of how the universe is put together. This new and exotic state of matter could also have properties that are useful in existing or emerging technologies.

The first announcement of tetraneutron was done by theoretical physicist James Vary during a presentation in the summer of 2014, followed by a research paper in the fall of 2016. He has been waiting to confirm reality through nuclear physics experiments.

Amazon’s Alexa Will Soon be Able to Use a Dead Person’s Voice

Amazon introduced the technology at Amazon re: MARS 2022, its annual AI event centered around machine learning, automation, robotics, and space. Alexa AI head scientist Rohit Prasad referred to the upcoming feature as a way to remember friends and family members who have passed away.

“While AI can’t eliminate the pain of loss, it can definitely make their memories last,” Prasad said.

Prasad demonstrated the feature using a video of a child asking Alexa if his grandmother could finish reading him a story. In its regular Alexa voice, the smart speaker obliged; then the grandmother’s voice took over as the child flipped through his own copy of The Wizard of Oz. Though of course there’s no way for the viewer to know what the woman’s real voice actually sounds like, the grandmother’s synthesized voice admittedly sounded quite natural, speaking with the cadence of your average bedtime story reader.

A new breakthrough in biology allows scientists to grow food without sunlight

The researchers also optimized their electrolyzer to produce the highest levels of acetate ever produced in an electrolyzer to date. What’s more, they found that crop plants, including cowpea, tomato, rice, green pea, and tobacco, all have the potential to be grown in the dark using the carbon from acetate. There’s even a possibility that acetate could improve crop yields, though more research is required.

The researchers believe that by reducing the reliance on direct sunlight, artificial photosynthesis could provide an important alternative for food growth in the coming years, as the world adapts to the worst effects of climate change — including droughts, floods, and reduced land availability. “Using artificial photosynthesis approaches to produce food could be a paradigm shift for how we feed people. By increasing the efficiency of food production, less land is needed, lessening the impact agriculture has on the environment. And for agriculture in non-traditional environments, like outer space, the increased energy efficiency could help feed more crew members with less inputs,” Jinkerson explained.

5 Planets Take Center Stage as They Align in The Night Sky

A rare, five-planet alignment will peak on June 24, allowing a spectacular viewing of Mercury, Venus, Mars, Jupiter and Saturn as they line up in planetary order.

The event began at the beginning of June and has continued to get brighter and easier to see as the month has progressed, according to Diana Hannikainen, observing editor of Sky & Telescope.

A waning crescent moon will be joining the party between Venus and Mars on Friday, adding another celestial object to the lineup. The moon will represent the Earth’s relative position in the alignment, meaning this is where our planet will appear in the planetary order.