This is an excerpt from the conclusion section of, “…NASA’s Managerial and Leadership Methodology, Now Unveiled!..!” by Mr. Andres Agostini, that discusses some management theories and practices. To read the entire piece, just click the link at the end of this illustrated article and presentation:

In addition to being aware and adaptable and resilient before the driving forces reshaping the current present and the as-of-now future, there are some extra management suggestions that I concurrently practice:

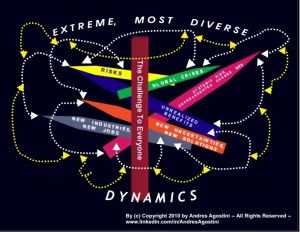

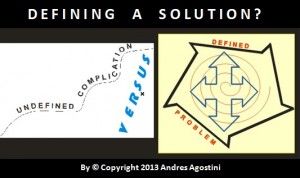

1. Given the vast amount of insidious risks, futures, challenges, principles, processes, contents, practices, tools, techniques, benefits and opportunities, there needs to be a full-bodied practical and applicable methodology (methodologies are utilized and implemented to solve complex problems and to facilitate the decision-making and anticipatory process).

The manager must always address issues with a Panoramic View and must also exercise the envisioning of both the Whole and the Granularity of Details, along with the embedded (corresponding) interrelationships and dynamics (that is, [i] interrelationships and dynamics of the subtle, [ii] interrelationships and dynamics of the overt and [iii] interrelationships and dynamics of the covert).

Both dynamic complexity and detail complexity, along with fuzzy logic, must be pervasively considered, as well.

Both dynamic complexity and detail complexity, along with fuzzy logic, must be pervasively considered, as well.

To this end, it is wisely argued, “…You can’t understand the knot without understanding the strands, but in the future, the strands need not remain tied up in the same way as they are today…”

For instance, disparate skills, talents, dexterities and expertise won’t suffice ever. A cohesive and congruent, yet proven methodology (see the one above) must be optimally implemented.

Subsequently, the Chinese proverb indicates, “…Don’t look at the waves but the currents underneath…”

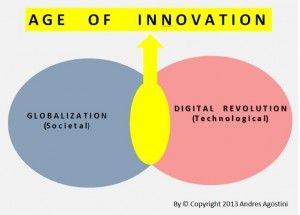

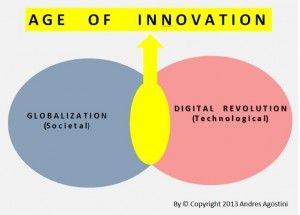

2. One must always be futurewise and technologically fluent. Don’t fight these extreme forces, just use them! One must use counter-intuitiveness (geometrically non-linearly so), insight, hindsight, foresight and far-sight in every day of the present and future (all of this in the most staggeringly exponential mode). To shed some light, I will share two quotes.

The Panchatantra (body of Eastern philosophical knowledge) establishes, “…Knowledge is the true organ of sight, not the eyes.…” And Antonio Machado argues, “… An eye is not an eye because you see it; an eye is an eye because it sees you …”

Managers always need a clear, knowledgeable vision. Did you already connect the dots stemming from the Panchatantra and Machado? Did you already integrate those dots into your big-picture vista?

As side effect, British Prime Minister W. E. Gladstone considered, “…You cannot fight against the future…”

3. In all the Manager does, he / she must observe and apply, at all times, a sine qua non maxim, “…everything is related to everything else…”

4. Always manage as if it were a “project.” Use, at all times, the “…Project Management…” approach.

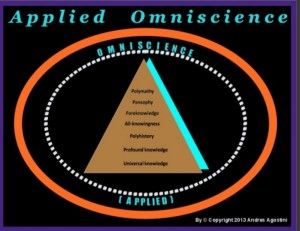

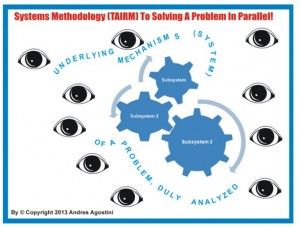

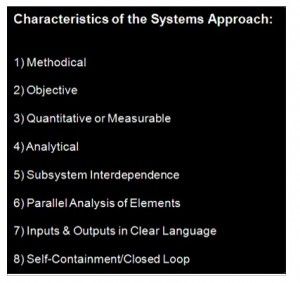

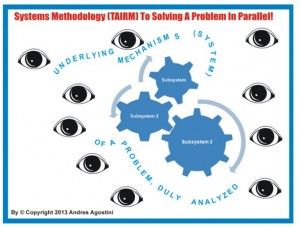

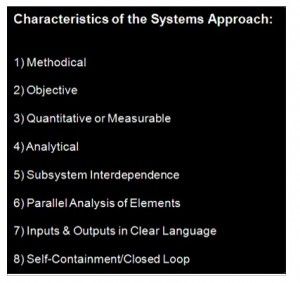

5. Always use the systems methodology with the applied omniscience perspective.

In this case, David, I mean to assert: The term “Science” equates to about a 90% of “…Exact Sciences…” and to about 10% of “…Social Sciences…” All science must be instituted with the engineering view.

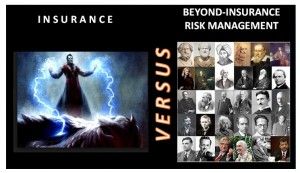

6. Always institute beyond-insurance risk management as you boldly integrate it with your futuring skill / expertise.

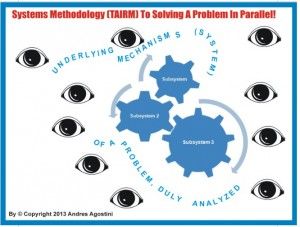

7. In my firmest opinion, the following must be complied this way (verbatim): the corporate strategic planning and execution (performing) are a function of a grander application of beyond-insurance risk management. It will never work well the other way around. Transformative and Integrative Risk Management (TAIRM) is the optimal mode to do advanced strategic planning and execution (performing).

TAIRM is not only focused on terminating, mitigating and modulating risks (expenses of treasure and losses of life), but also concentrated on bringing under control fiscally-sound, sustainable organizations and initiatives.

TAIRM underpins sensible business prosperity and sustainable growth and progress.

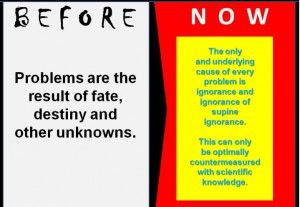

8. I also believe that we must pragmatically apply the scientific method in all we manage to the best of our capacities.

If we are “…MANAGERS…” in a Knowledge Economy and Knowledge Era (not a knowledge-driven eon because of superficial and hollow caprices of the follies and simpletons), we must do therefore extensive and intensive learning and un-learning for Life if we want to succeed and be sustainable.

As a consequence, Dr. Noel M. Tichy, PhD. argues, “…Today, intellectual assets trump physical assets in nearly every industry…”

Consequently, Alvin Toffler indicates, “…In the world of the future, THE NEW ILLITERATE WILL BE THE PERSON WHO HAS NOT LEARNED TO LEARN…”

We don’t need to be scientists to learn some basic principles of advanced science.

Accordingly, Dr. Carl Sagan, PhD. expressed, “…We live in a society exquisitely dependent on science and technology, in which hardly anyone knows about science and technology…” And Edward Teller stated, “…The science of today is the technology of tomorrow …”

And it is also crucial this quotation by Winston Churchill, “…If we are to bring the broad masses of the people in every land to the table of abundance, IT CAN ONLY BE BY THE TIRELESS IMPROVEMENT OF ALL OF OUR MEANS OF TECHNICAL PRODUCTION…”

I am not a scientist but I tirelessly support responsible scientists and science. I like scientific and technological knowledge and methodologies a great deal.

Chiefly, I am a college autodidact made by his own self and engaged into extreme practical and theoretical world-class learning for Life.

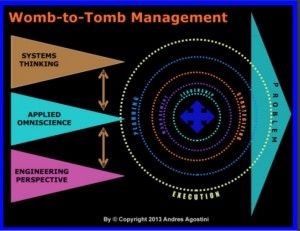

9. In any management undertaking, and given the universal volatility and rampant and uninterrupted rate of change, one must think and operate in a fluid womb-to-tomb mode.

9. In any management undertaking, and given the universal volatility and rampant and uninterrupted rate of change, one must think and operate in a fluid womb-to-tomb mode.

The manager must think and operate holistically (both systematically and systemically) at all times.

The manager must also be: i) Multidimensional, ii) Interdisciplinary, iii) Multifaceted, iv) Cross-functional, and v) Multitasking.

That is, the manager must now be an expert state-of-the-art generalist and erudite. ERGO, THIS IS THE NEWEST SPECIALIST AND SPECIALIZATION.

Managers must never manage elements, components or subsystems separately or disparately (that is, they mustn’t ever manage in series).

Managers must always manage all of the entire system at the time (that is, managing in parallel or simultaneously the totality of the whole at once).

10. In any profession, beginning with management, one must always and cleverly upgrade his / her learning and education until the last exhale.

An African proverb argues, “…Tomorrow belongs to the people who prepare for it…” And Winston Churchill established, “…The empires of the future are the empires of the mind…” And an ancient Chinese Proverb: “…It is not our feet that move us along — it is our minds…”

And Malcolm X observed, “…The future belongs to those who prepare for it today…” And Leonard I. Sweet considered, “…The future is not something we enter. The future is something we create…”

And finally, James Thomson argued, “…Great trials seem to be a necessary preparation for great duties …”

Consequently, Dr. Gary Hamel, PhD. indicates, “…What distinguishes our age from every other is not the world-flattening impact of communications, not the economic ascendance of China and India, not the degradation of our climate, and not the resurgence of ancient religious animosities. RATHER, IT IS A FRANTICALLY ACCELERATING PACE OF CHANGE…”

Please see the full presentation at http://goo.gl/8fdwUP