Pedal to the floor.

By.

(2 min)

As the dust still settles on OpenAI’s latest drama, a letter has surfaced from several staff researchers citing concerns about an AI superintelligence model under development that could potentially pose a threat to humanity, according to those close to the source. The previously undisclosed letter is understood to be the real reason behind why Sam Altman was fired from the company.

The model, known internally as Project Q*, could represent a major breakthrough in the company’s pursuit of artificial general intelligence (AGI) – a highly autonomous branch of AI superintelligence capable of cumulative learning and outperforming humans in most tasks. And you were worried about ChatGPT taking all our jobs?

With Sam Altman now firmly back at the company and a new OpenAI board in place, here are all of the details of Project Q*, as well as the potential implications of AGI in the bigger picture.

This video explores the future of the world from 2030 to 10,000 A.D. and beyond…Watch this next video about the Technological Singularity: https://youtu.be/yHEnKwSUzAE.

🎁 5 Free ChatGPT Prompts To Become a Superhuman: https://bit.ly/3Oka9FM

🤖 AI for Business Leaders (Udacity Program): https://bit.ly/3Qjxkmu.

☕ My Patreon: https://www.patreon.com/futurebusinesstech.

➡️ Official Discord Server: https://discord.gg/R8cYEWpCzK

0:00 2030

12:40 2050

39:11 2060

49:57 2070

01:04:58 2080

01:16:39 2090

01:28:38 2100

01:49:03 2200

02:05:48 2300

02:20:31 3000

02:28:18 10,000 A.D.

02:35:29 1 Million Years.

02:43:16 1 Billion Years.

SOURCES:

• https://www.futuretimeline.net.

• The Singularity Is Near: When Humans Transcend Biology (Ray Kurzweil): https://amzn.to/3ftOhXI

• The Future of Humanity (Michio Kaku): https://amzn.to/3Gz8ffA

• AI 2041: 10 Visions of Our Future (Kai-Fu Lee & Chen Qiufan): https://amzn.to/3bxWat6

• Tim Ferriss Podcast [Chris Dixon and Naval Ravikant — The Wonders of Web3, How to Pick the Right Hill to Climb, Finding the Right Amount of Crypto Regulation, Friends with Benefits, and the Untapped Potential of NFTs (542)]: https://tim.blog/2021/10/28/chris-dixon-naval-ravikant/

• https://2050.earth/

• https://research.aimultiple.com/artificial-general-intellige…ty-timing/

• https://mars.nasa.gov/mars2020/spacecraft/rover/communications/

• https://www.forbes.com/sites/tomtaulli/2020/08/14/quantum-co…3acd9f3b4c.

• https://cointelegraph.com/news/tales-from-2050-a-look-into-a-world-built-on-nfts.

• https://medium.com/theblockchainu/a-day-in-life-of-a-cryptoc…a07649f14d.

• https://botland.store/blog/story-of-the-internet-from-web-1&…b-4-0/

• https://www.analyticsinsight.net/light-based-computer-chips-…h-photons/

• https://www.wired.com/story/chip-ai-works-using-light-not-electrons/

• https://www.science.org/content/article/light-based-memory-c…store-data.

💡 Future Business Tech explores the future of technology and the world.

Examples of topics I cover include:

• Artificial Intelligence & Robotics.

• Virtual and Augmented Reality.

• Brain-Computer Interfaces.

• Transhumanism.

• Genetic Engineering.

SUBSCRIBE: https://bit.ly/3geLDGO

On Tuesday, Stability AI released Stable Video Diffusion. The first implementation for private users is now available.

The makers of the Stable Diffusion tool “ComfyUI” have added support for Stable AI’s Stable Video Diffusion models in a new update. ComfyUI is a graphical user interface for Stable Diffusion, using a graph/node interface that allows users to build complex workflows. It is an alternative to other interfaces such as AUTOMATIC1111.

According to the developers, the update can be used to create videos at 1,024 × 576 resolution with a length of 25 frames on the 7-year-old Nvidia GTX 1,080 with 8 gigabytes of VRAM. AMD users can also use the generative video AI with ComfyUI on an AMD 6,800 XT running ROCm on Linux. It takes about 3 minutes to create a video.

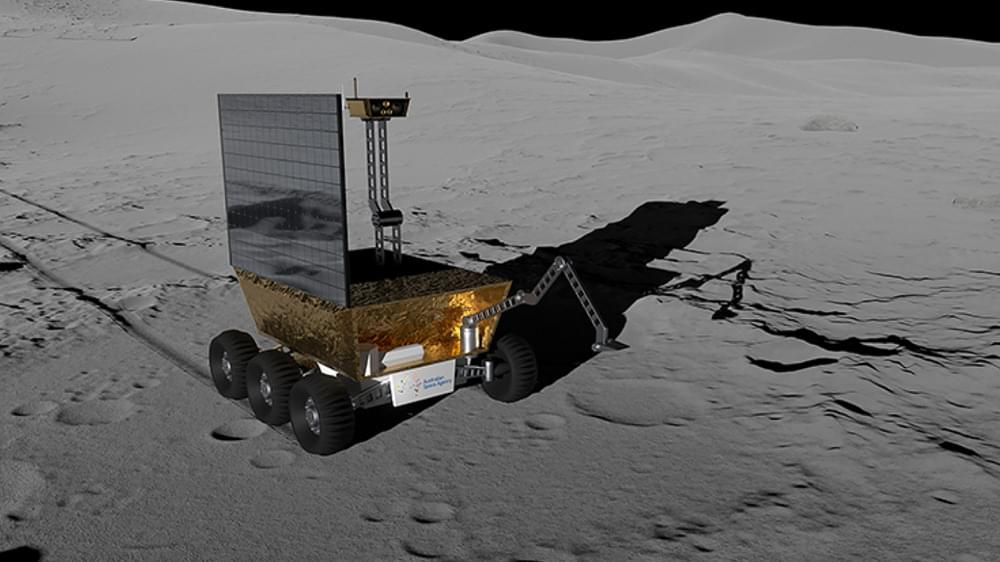

The Australian Space Agency has revealed its shortlist of names for the country’s first lunar rover — and you can help choose the winner by casting your vote.

In partnership with NASA, the agency’s Australian-made, semi-autonomous rover is slated to launch to the moon as part of a future Artemis mission by as early as 2026. The rover will have the ability to pick up lunar rocks and dust, then bring the specimens back to a moon lander operated by NASA.

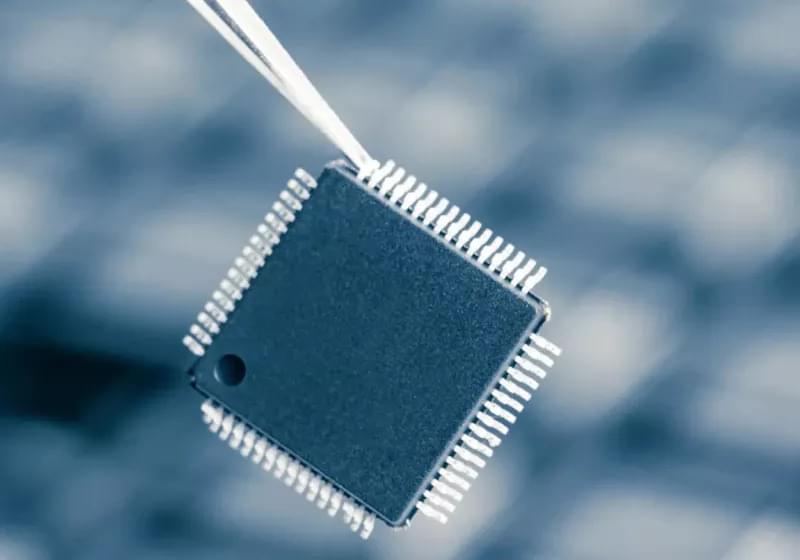

Why it matters: While AI algorithms are seemingly everywhere, processing on the most popular platforms require powerful server GPUs to provide customers with their generative services. Arm is introducing a new dedicated chip design, set to provide AI acceleration even in the most affordable IoT devices starting next year.

The Arm Cortex-M52 is the smallest and most cost-efficient processor designed for AI acceleration applications, according to the company. This latest design from the UK-based fabless firm promises to deliver “enhanced” AI capabilities to Internet of Things (IoT) devices, as Arm states, without the need for a separate computing unit.

Paul Williamson, Arm’s SVP and general manager for the company’s IoT business, emphasized the need to bring machine learning optimized processing to “even the smallest and lowest-power” endpoint devices to fully realize the potential of AI in IoT. Despite AI’s ubiquity, Williamson noted, harnessing the “intelligence” from the vast amounts of data flowing through digital devices requires IoT appliances that are smarter and more capable.

Vernor Vinge is one of the foremost thinkers about the future of artificial intelligence and the potential for a technological singularity to occur in the coming decades. He’s a science fiction writer who’s had a profound impact on a wide range of authors including: William Gibson, Charles Stross, Neal Stephenson and Dan Simmons.

Many of Vinge’s works are brilliant. Among them are some of my all-time favorites in the SF genre. And he’s been recognized with numerous awards, including seven Hugo nominations and five wins, despite writing only eight novels and 24 short stories and novellas over a span of five decades.

In this video, I discuss his early works from the 1960s to the 1980s. His later works from the 1980s onward are the subject of my next video.

0:42 What is a technological singularity?

4:51 A.I. in science fiction history.

5:38 Should we be afraid?

6:59 Who is Vernor VInge?

8:50 Short stories.

11:26 Tatja Grimm’s World (1969, 1987)

13:47 The Witling (1976)

17:40 True Names (1981)

#booktube #booktubesff #sciencefiction #scifi #singularity #artificialintelligence #sf

Exclusive: OpenAI researchers warned board of AI breakthrough ahead of CEO ouster, sources say https://www.reuters.com/technology/sam-altmans-ouster-openai…11-22/ OpenAI Made an AI Breakthrough Before Altman Firing, Stoking Excitement and Concern https://www.theinformation.com/articles/openai-made-an-ai-br…nd-concern The Bitter Lesson http://www.incompleteideas.net/IncIdeas/BitterLesson.html Get on my daily AI newsletter 🔥 https://natural20.beehiiv.com/subscribe [News, Research and Tutorials on AI] See more at: https://natural20.com/ My AI Playlist: https://www.youtube.com/playlist?list=PLb1th0f6y4XROkUAwkYhcHb7OY9yoGGZH