Software developed by Brown researchers can translate expressive and complex plain-worded instructions into behaviors a robot can carry out, all without needing thousands of hours of training data.

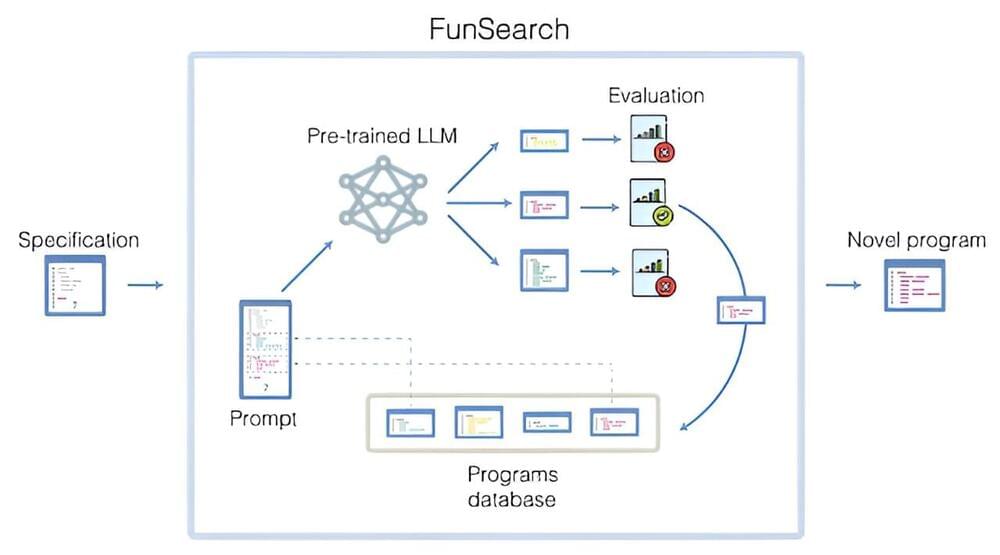

A team of computer scientists at Google’s DeepMind project in the U.K., working with a colleague from the University of Wisconsin-Madison and another from Université de Lyon, has developed a computer program that combines a pretrained large language model (LLM) with an automated “evaluator” to produce solutions to problems in the form of computer code.

In their paper published in the journal Nature, the group describes their ideas, how they were implemented and the types of output produced by the new system.

Researchers throughout the scientific community have taken note of the things people are doing with LLMs, such as ChatGPT, and it has occurred to many of them that LLMs might be used to help speed up the process of scientific discovery. But they have also noted that for that to happen, a method is required to prevent confabulations, answers that seem reasonable but are wrong—they need output that is verifiable. To address this problem, the team working in the U.K. used what they call an automated evaluator to assess the answers given by an LLM.

Barry’s prowess is evident in tests, boasting a maximum payload-to-weight ratio of 2 on flat terrain.

Aiming to solve the challenge of legged robots still being “weak, slow, inefficient, or fragile to take over tasks that involve heavy payloads,” a team of researchers from the Robotic Systems Lab at ETH Zurich has developed a promising proposition.

Meet Barry, a dynamically balancing quadruped robot optimized for high payload capabilities and efficiency, which promises to help humans tackle challenging manual work scenarios. The quadruple’s new leg design ensures that it can “handle unmodeled payloads up to 198 pounds (90 kilograms) while operating at high efficiency,” according to a study by the team.

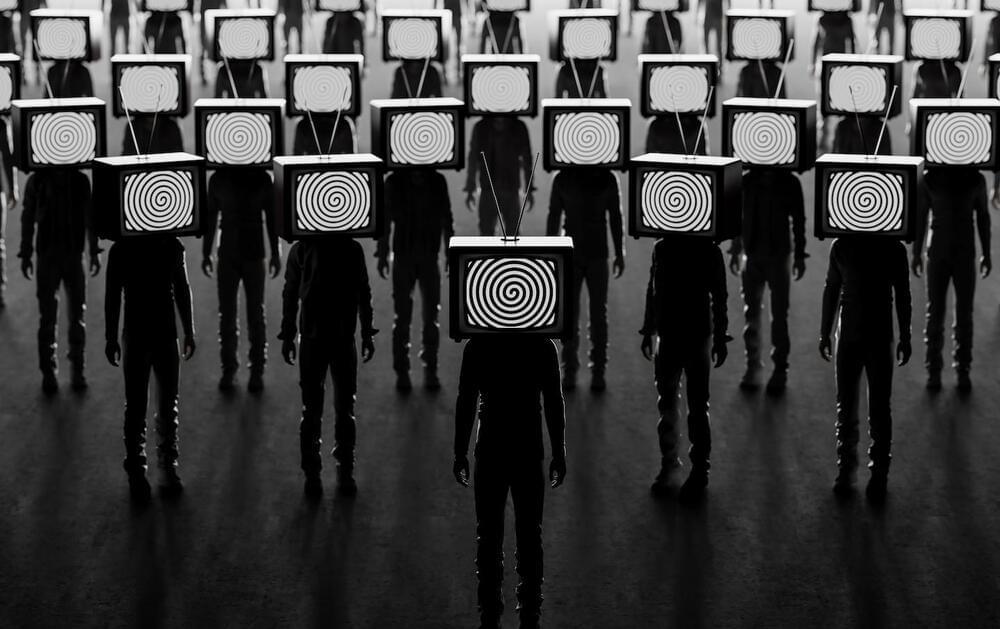

AI is making it easy for anyone to create propaganda outlets, producing content that can be hard to differentiate from real news.

Artificial intelligence is automating the creation of fake news, spurring an explosion of web content mimicking factual articles that instead disseminate false information about elections, wars and natural disasters.

Tech is not your friend. We are. Sign up for The Tech Friend newsletter. ArrowRight.

This past week, Axel Springer, the German media conglomerate that owns Politico and Business Insider, signed a “multiyear licensing deal” with OpenAI worth tens of millions of Euros.

The big deal OpenAI just inked with Axel Springer offers a glimpse of how AI and the media might eventually intersect.

ICYMI: DeepSouth uses a #neuromorphiccomputing system which mimics biological processes, using hardware to efficiently emulate large networks of spiking #neurons at 228 trillion #Synaptic operations per second — rivalling the estimated rate of operations in the human brain.

Australian researchers are putting together a supercomputer designed to emulate the world’s most efficient learning machine – a neuromorphic monster capable of the same estimated 228 trillion synaptic operations per second that human brains handle.

As the age of AI dawns upon us, it’s clear that this wild technological leap is one of the most significant in the planet’s history, and will very soon be deeply embedded in every part of our lives. But it all relies on absolutely gargantuan amounts of computing power. Indeed, on current trends, the AI servers NVIDIA sells alone will likely be consuming more energy annually than many small countries. In a world desperately trying to decarbonize, that kind of energy load is a massive drag.

But as often happens, nature has already solved this problem. Our own necktop computers are still the state of the art, capable of learning super quickly from small amounts of messy, noisy data, or processing the equivalent of a billion billion mathematical operations every second – while consuming a paltry 20 watts of energy.

Follow me on X — https://twitter.com/TeslaBoomerMama Thank you so much for watching this video, I do hope you found it enjoyable. If you would like to follow me or my other content on other platforms, you can find me here: X — https://twitter.com/TeslaBoomerMama SubStack — https://alexandramerz.substack.com LinkedIn — https://www.linkedin.com/in/merzalexandra/ Words that make this video searchable: Tesla, Tesla stock, TSLA, Elon Musk, Electric cars, Self-driving cars, Renewable energy, Innovation, Technology, Investing, Finance, Business, Market analysis, Stock market, Stock trading, Price prediction, Analyst recommendations, Short-term outlook, Long-term outlook, Risks, Opportunities, News, Events, Research, Charts, Data, ESG, Alexandra Merz, Tesla Boomer, Tesla Boomer Mama.

Tesla’s potential market size for its humanoid robot, Optimus, presents a massive opportunity worth trillions of dollars, far surpassing the impact of their electric vehicles.

Questions to inspire discussion.

What is the potential market size for Tesla’s humanoid robot, Optimus?

—The potential market size for Optimus presents a massive opportunity worth trillions of dollars, far surpassing the impact of Tesla’s electric vehicles.

If you think Tesla’s next generation bot, TeslaBot, looks good for no reason, then think again! Dr. Scott Walter and I go into the reasons that the way the new Optimus robot looks matter so much to the way it functions—and it’s pretty cool! Plus, Scott rants about the fraudulent copyright claims Univision laid on all TeslaBot videos!

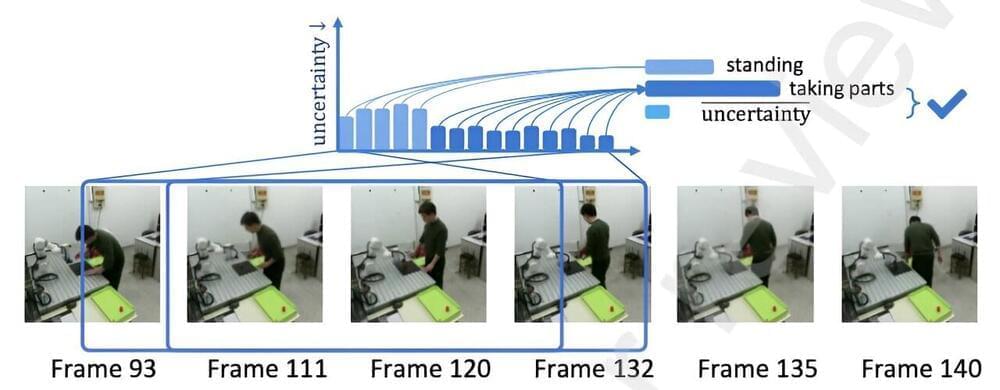

The digital twin system created by Zhang, Ji, and their colleagues creates a virtual replica of a scene in which a human and robot agent are collaborating.

Robotics systems have already been introduced in numerous real-world settings, including some industrial and manufacturing facilities. In these facilities, robots can assist human assembly line and warehouse workers, assembling some parts of products with high precision and then handing them to human agents tasked with performing additional actions.

In recent years, roboticists and computer scientists have been trying to develop increasingly advanced systems that could enhance these interactions between robots and humans in industrial settings. Some proposed solutions rely on so-called ‘digital twin’ systems, virtual models designed to accurately reproduce a physical object, such as specific products or components that are being manufactured.

Researchers at Nanjing University of Aeronautics and Astronautics in China recently introduced a new digital twin system that could improve the collaboration between human and robotic agents in manufacturing settings. This system, introduced in a paper published in Robotics and Computer-Integrated Manufacturing, can create a virtual map of real-world environments to plan and execute suitable robot behaviors as they cooperate with humans on a given task.