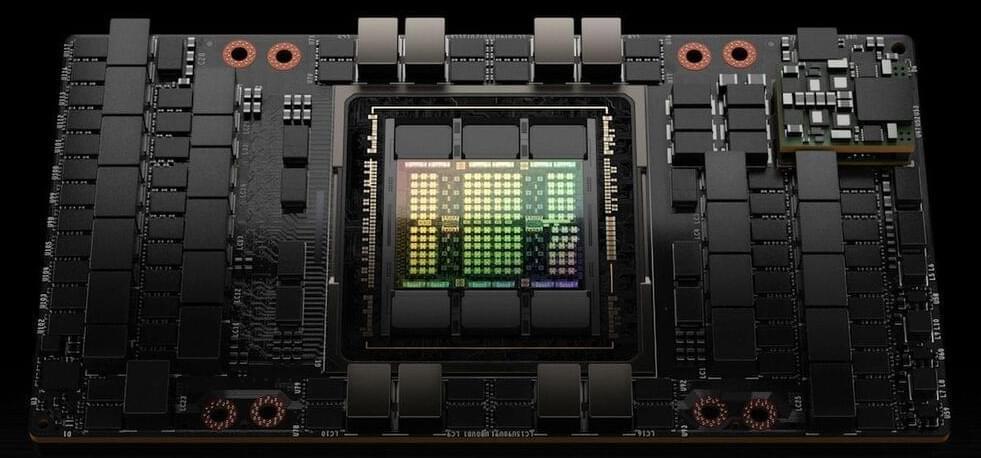

The AI gold rush has brought many market opportunities to the space tech sector, said Zainab Qasim, investor at Seraphim.

“AI’s impact on existing tech used in space will no doubt become more prevalent over the coming years allowing faster research and development execution and smarter insights for end customers,” she said.

AI plays a “heavy hand” in the development of future climate and space technologies, said Jeff Crusey, partner at early-stage fund 7percent Ventures, adding that it has “dramatically improved the efficiency of models, improving logistics, fuel savings, and ultimately the environment.”