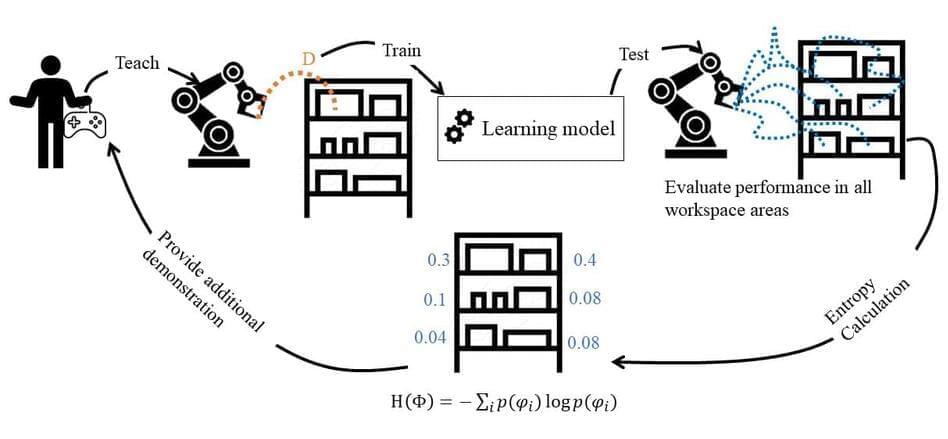

To effectively assist humans in real-world settings, robots should be able to learn new skills and adapt their actions based on what users require them to do at different times. One way to achieve this would be to design computational approaches that allow robots to learn from human demonstrations, for instance observing videos of a person washing dishes and learning to repeat the same sequence of actions.

Researchers at University of British Columbia, Carnegie Mellon University, Monash University and University of Victoria recently set out to gather more reliable data to train robots via demonstrations. Their paper, posted to the arXiv preprint server, shows that the data they gathered can significantly improve the efficiency with which robots learn from the demonstrations of human users.

“Robots can build cars, gather the items for shopping orders in busy warehouses, vacuum floors, and keep the hospital shelves stocked with supplies,” Maram Sakr, one of the researchers who carried out the study, told Tech Xplore. “Traditional robot programming systems require an expert programmer to develop a robot controller that is capable of such tasks while responding to any situation the robot may face.”