This isn’t rocket science it’s neuroscience.

Ever since the dawn of antiquity, people have strived to improve their cognitive abilities. From the advent of the wheel to the development of artificial intelligence, technology has had a profound leverage on civilization. Cognitive enhancement or augmentation of brain functions has become a trending topic both in academic and public debates in improving physical and mental abilities. The last years have seen a plethora of suggestions for boosting cognitive functions and biochemical, physical, and behavioral strategies are being explored in the field of cognitive enhancement. Despite expansion of behavioral and biochemical approaches, various physical strategies are known to boost mental abilities in diseased and healthy individuals. Clinical applications of neuroscience technologies offer alternatives to pharmaceutical approaches and devices for diseases that have been fatal, so far. Importantly, the distinctive aspect of these technologies, which shapes their existing and anticipated participation in brain augmentations, is used to compare and contrast them. As a preview of the next two decades of progress in brain augmentation, this article presents a plausible estimation of the many neuroscience technologies, their virtues, demerits, and applications. The review also focuses on the ethical implications and challenges linked to modern neuroscientific technology. There are times when it looks as if ethics discussions are more concerned with the hypothetical than with the factual. We conclude by providing recommendations for potential future studies and development areas, taking into account future advancements in neuroscience innovation for brain enhancement, analyzing historical patterns, considering neuroethics and looking at other related forecasts.

Keywords: brain 2025, brain machine interface, deep brain stimulation, ethics, non-invasive and invasive brain stimulation.

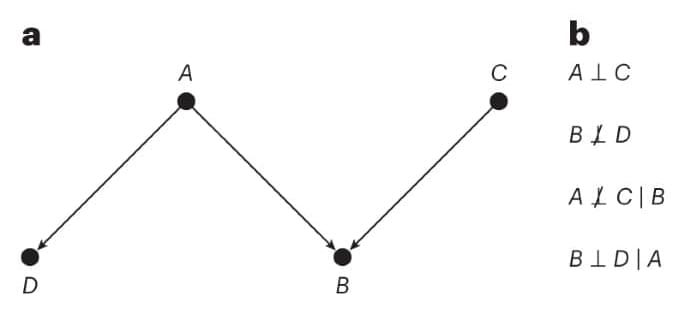

Humans have striven to increase their mental capacities since ancient times. From symbolic language, writing and the printing press to mathematics, calculators and computers, mankind has devised and employed tools to record, store, and exchange thoughts and to enhance cognition. Revolutionary changes are occurring in the health care delivery system as a result of the accelerating speed of innovation and increased employment of technology to suit society’s evolving health care needs (Sullivan and Hagen, 2002). The aim of researchers working on cognitive enhancement is to understand the neurobiological and psychological mechanisms underlying cognitive capacities while theorists are rather interested in their social and ethical implications (Dresler et al., 2019; Oxley et al., 2021).