Scientists are exploring the potential of quantum machine learning. But whether there are useful applications for the fusion of artificial intelligence and quantum computing is unclear.

Category: robotics/AI – Page 840

Meet Aloha, a housekeeping humanoid system that can cook and clean

The system also allows whole-body teleoperation, enabling simultaneous control of all degrees of freedom, including both arms and the mobile base.

The system builds on Google DeepMind’s Aloha system, emphasizing the importance of mobility and dexterity in the field of robotic learning.

Harvard’s robot exosuit aids Parkinson’s patients walk without freezing

Researchers from Harvard SEAS and Boston University reveal its transformative effects, offering newfound mobility and independence for individuals with this debilitating condition.

The wearable tech successfully eliminates a common symptom called ‘gait freezing’ to restore smooth strides for Parkinson’s disease sufferers.

Chinese firm’s first humanoid robot to take the fight to Tesla Optimus

Kepler asserts that its general-purpose Forerunner series showcases advanced capabilities in body movements, precise hand control, and sophisticated visual perception. This positions it as a formidable competitor to Tesla’s Optimus in the realm of humanoid robotics.

The firm claims that its humanoid robot aims to enhance “productivity with cutting-edge technology, hastening the arrival of a ‘three-day work week.’ The shift will enable humans to dedicate more time to meaningful endeavors, such as space exploration,” said Debo Hu, co-founder of the firm, in a statement.

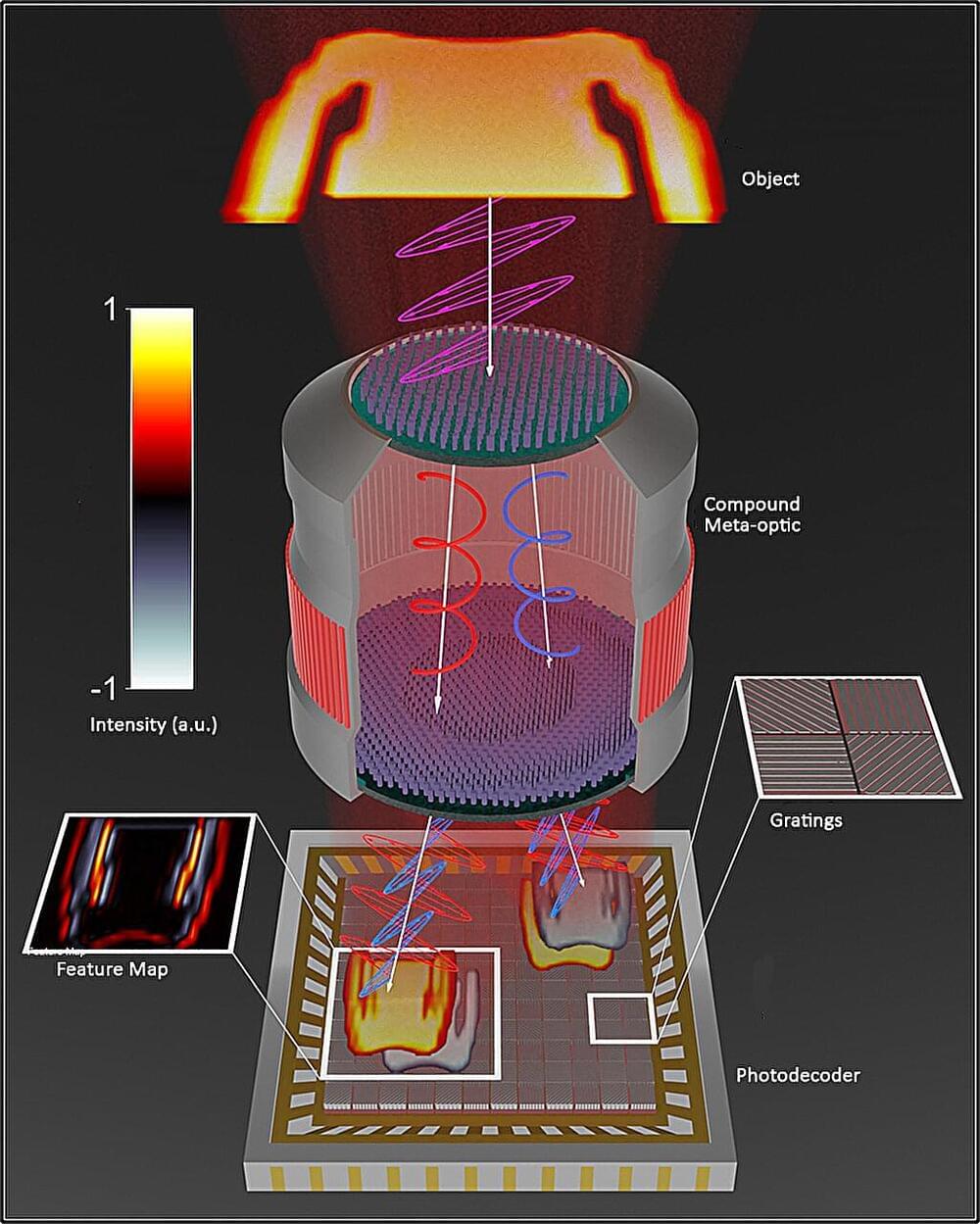

Nanostructured flat lens uses machine learning to ‘see’ more clearly, while using less power

From surveillance to defense to AI/ML virtualization, and it’s more compact and energy efficient. Oh and let’s not forget the medical imaging applications. I just wonder how long until it’s put into effect.

A front-end lens, or meta-imager, created at Vanderbilt University can potentially replace traditional imaging optics in machine-vision applications, producing images at higher speed and using less power.

The nanostructuring of lens material into a meta-imager filter reduces the typically thick optical lens and enables front-end processing that encodes information more efficiently. The imagers are designed to work in concert with a digital backend to offload computationally expensive operations into high-speed and low-power optics. The images that are produced have potentially wide applications in security systems, medical applications, and government and defense industries.

Mechanical engineering professor Jason Valentine, deputy director of the Vanderbilt Institute of Nanoscale Science and Engineering, and colleagues’ proof-of-concept meta-imager is described in a paper published in Nature Nanotechnology.

Google DeepMind has new rules to make sure AI robots behave when tidying your home

📸 Look at this post on Facebook https://www.facebook.com/share/RNevtb4ysG3HkejG/?mibextid=xfxF2i

The tech giant has introduced a set of new advancements that seek to make robots powered by AI a lot safer to deploy at home.