The current AI revolution appears to be having a ‘1995 moment’ like the early days of the internet — and Nvidia is leading that charge, analyst says.

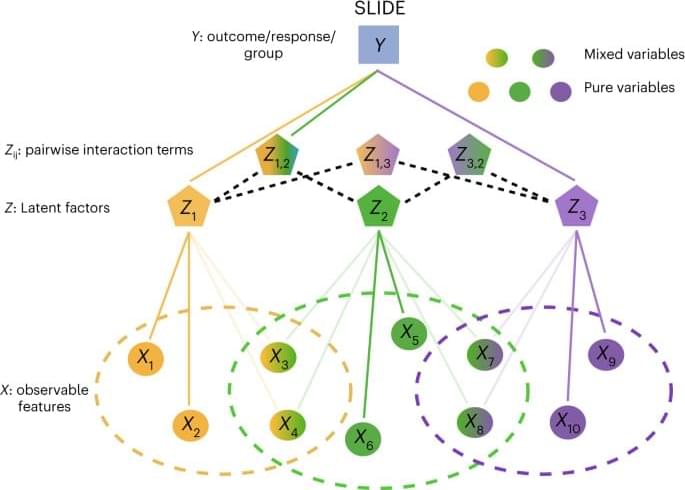

We developed Significant Latent Factor Interaction Discovery and Exploration (SLIDE), an interpretable machine learning approach that can infer hidden states (latent factors) underlying biological outcomes. These states capture the complex interplay between factors derived from multiscale, multiomic datasets across biological contexts and scales of resolution.

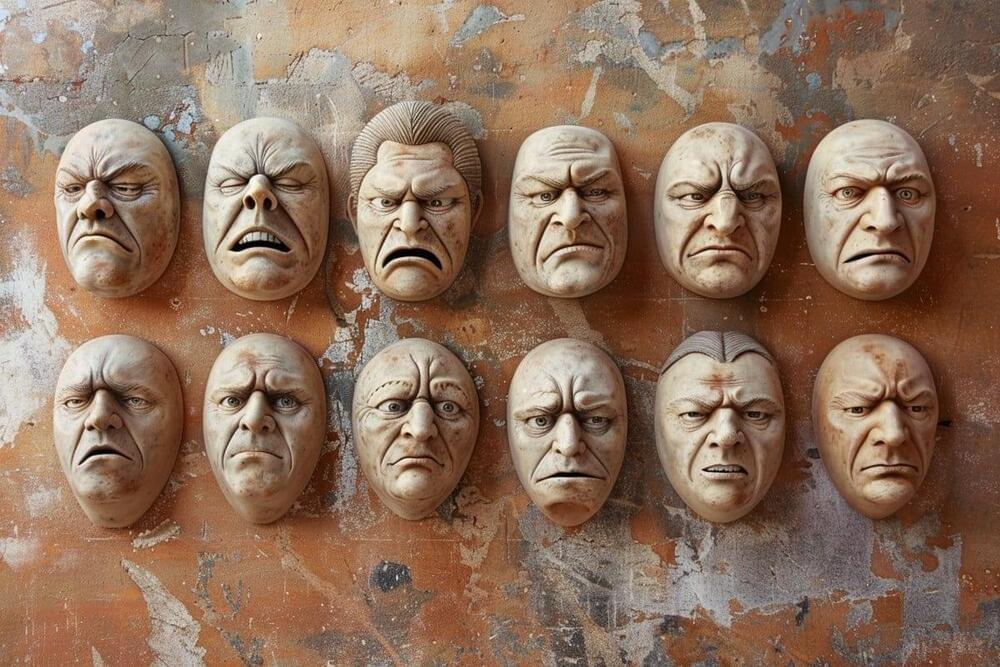

Summary: Researchers unveiled a pioneering technology capable of real-time human emotion recognition, promising transformative applications in wearable devices and digital services.

The system, known as the personalized skin-integrated facial interface (PSiFI), combines verbal and non-verbal cues through a self-powered, stretchable sensor, efficiently processing data for wireless communication.

This breakthrough, supported by machine learning, accurately identifies emotions even under mask-wearing conditions and has been applied in a VR “digital concierge” scenario, showcasing its potential to personalize user experiences in smart environments. The development is a significant stride towards enhancing human-machine interactions by integrating complex emotional data.

Soft robots inspired by animals can help to tackle real-world problems in efficient and innovative ways. Roboticists have been working to continuously broaden and improve these robots’ capabilities, as this could open new avenues for the automation of tasks in various settings.

Researchers at Nagoya University and Tokyo Institute of Technology recently introduced a soft robot inspired by inchworms that can carry loads of more than 100 g at a speed of approximately 9 mm per second. This robot, introduced in Biomimetic Intelligence and Robotics, could be used to transport objects and place them in precise locations.

“Previous research in the field provided foundational insights but also highlighted limitations, such as the slow transportation speeds and low load capacities of inchworm-inspired robots,” Yanhong Peng told Tech Xplore. “For example, existing models demonstrated capabilities for transporting objects at speeds significantly lower than the 8.54 mm/s achieved in this study, with limited ability to handle loads above 40 grams.”

The landscape of artificial intelligence (AI) applications has traditionally been dominated by the use of resource-intensive servers centralized in industrialized nations. However, recent years have witnessed the emergence of small, energy-efficient devices for AI applications, a concept known as tiny machine learning (TinyML).

We’re most familiar with consumer-facing applications such as Siri, Alexa, and Google Assistant, but the limited cost and small size of such devices allow them to be deployed in the field. For example, the technology has been used to detect mosquito wingbeats and so help prevent the spread of malaria. It’s also been part of the development of low-power animal collars to support conservation efforts.

Small size, big impact Distinguished by their small size and low cost, TinyML devices operate within constraints reminiscent of the dawn of the personal-computer era—memory is measured in kilobytes and hardware can be had for as little as US$1. This is possible because TinyML doesn’t require a laptop computer or even a mobile phone. Instead, it can instead run on simple microcontrollers that power standard electronic components worldwide. In fact, given that there are already 250 billion microcontrollers deployed globally, devices that support TinyML are already available at scale.

Gemini 1.5 Pro includes a breakthrough in long-context understanding, handling up to 1 million tokens. It can also decipher the content of videos and describe what is happening in a scene.

In the world of large language models (LLMs) like ChatGPT, so-called “tokens” are the fundamental units of text that these models process, akin to words, punctuation, or parts of words in human language.