Why is Adam the most popular optimizer in Deep Learning? Let’s understand it by diving into its math, and recreating the algorithm.

Guardian angels.

Film de Wesley Barryet Genre : Science-FictionDurée : 1h24Avec Dudley Manlove, George Milan, Don Doolittle A la suite d’une guerre nucléaire catastrophique, l’humanité a créé une race d’androïdes à la peau bleue pour l’aider à la reconstruction de la civilisation. Bientôt, les robots deviennent plus intelligents et plus humains. Afin d’enrayer leur évolution et de préserver leurs propres règles, un groupe de fanatique appelé “L’ordre de la chair et le sang” est créé. Les robots sont-ils vraiment à considérer comme l’ennemi de l’Homme ou bien sont-ils son dernier espoir?

TikTok built its empire off popular music — but now it’s losing access to a ton of it.

In a statement, Universal Music Group said it has chosen to “call time out on TikTok” to pressure the social network for better rules on artificial intelligence, online safety, and artist compensation for its roster, which includes such luminaries as Taylor Swift, Drake, and BTS.

“Today, as an indication of how little TikTok compensates artists and songwriters, despite its massive and growing user base, rapidly rising advertising revenue and increasing reliance on music-based content, TikTok accounts for only about 1 percent of our total revenue,” the statement reads.

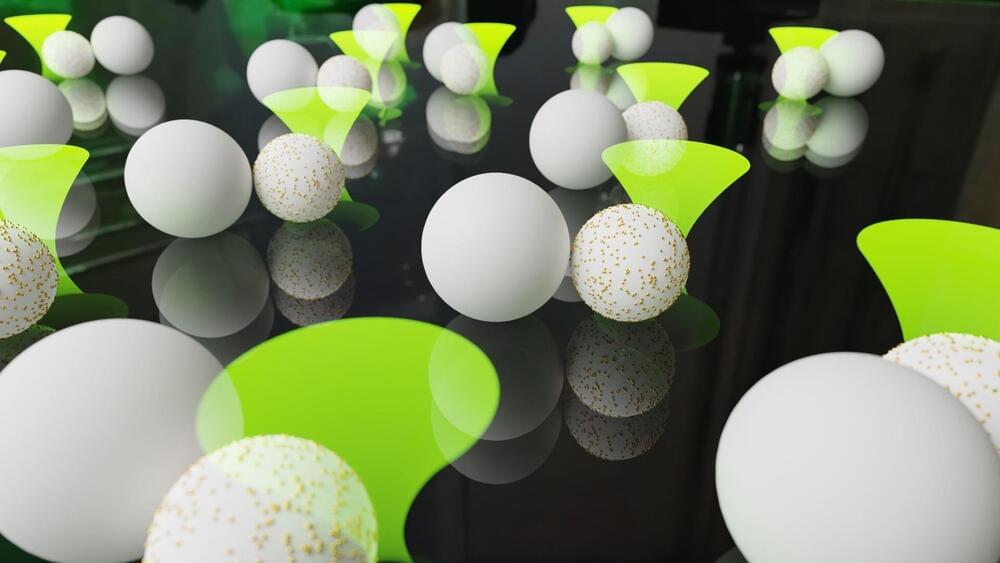

Artificial intelligence using neural networks performs calculations digitally with the help of microelectronic chips. Physicists at Leipzig University have now created a type of neural network that works not with electricity but with so-called active colloidal particles. In their publication in Nature Communications, the researchers describe how these microparticles can be used as a physical system for artificial intelligence and the prediction of time series.

“Our neural network belongs to the field of physical reservoir computing, which uses the dynamics of physical processes, such as water surfaces, bacteria or octopus tentacle models, to make calculations,” says Professor Frank Cichos, whose research group developed the network with the support of ScaDS.AI.

“In our realization, we use synthetic self-propelled particles that are only a few micrometers in size,” explains Cichos. “We show that these can be used for calculations and at the same time present a method that suppresses the influence of disruptive effects, such as noise, in the movement of the colloidal particles.” Colloidal particles are particles that are finely dispersed in their dispersion medium (solid, gas or liquid).

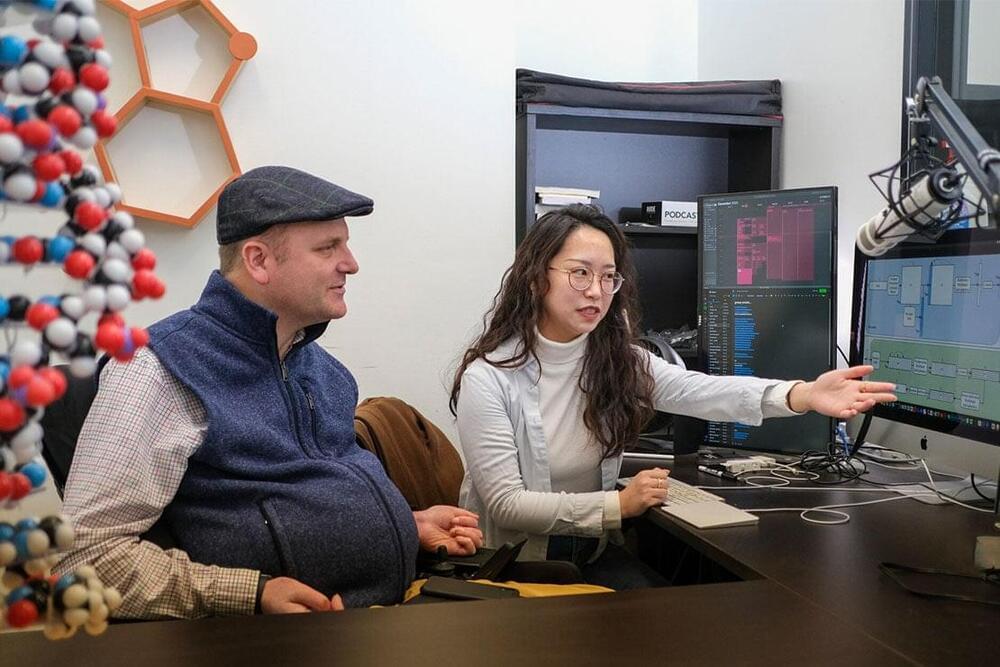

University of Toronto Engineering researchers’ AI model designs proteins to deliver gene therapy ➡️

Researchers at the University of Toronto used an artificial intelligence framework to redesign a crucial protein involved in the delivery of gene therapy.

The study, published in Nature Machine Intelligence, describes new work optimizing proteins to mitigate immune responses, thereby improving the efficacy of gene therapy and reducing side effects.

“Gene therapy holds immense promise, but the body’s pre-existing immune response to viral vectors greatly hampers its success. Our research zeroes in on hexons, a fundamental protein in adenovirus vectors, which – but for the immune problem – hold huge potential for gene therapy,” says Michael Garton, an assistant professor at the Institute of Biomedical Engineering in the Faculty of Applied Science & Engineering.

Tesla detailed its capital expenditure plans for 2024 and beyond in a 10-K it released this morning.

Tesla has routinely revealed an increase in planned capital expenditures over the past few years, and 2024 seems to be no different. In a 10-K, which was released by Tesla this morning, it expects to spend at least $10 billion this year and between $8 billion and $10 billion in 2025 and 2026.

Tesla said that its near-term capex plans are difficult to predict because “the number and breadth of our core projects at any given time, and may further be impacted by uncertainties in future global market conditions.”

Global decision-makers and the world’s leading financial body predict that artificial intelligence will result in dramatic job losses in 2024 and beyond.

During the annual meeting of the World Economic Forum in Davos, Switzerland, a survey of CEOs revealed that a quarter intend to cut their headcounts by at least five percent “due to generative AI,” per a press release from PwC, the firm that conducted it.

Translation: 25 percent of CEOs are aiming to replace human workers with AI because they think it’ll be cheaper. Vive la future!

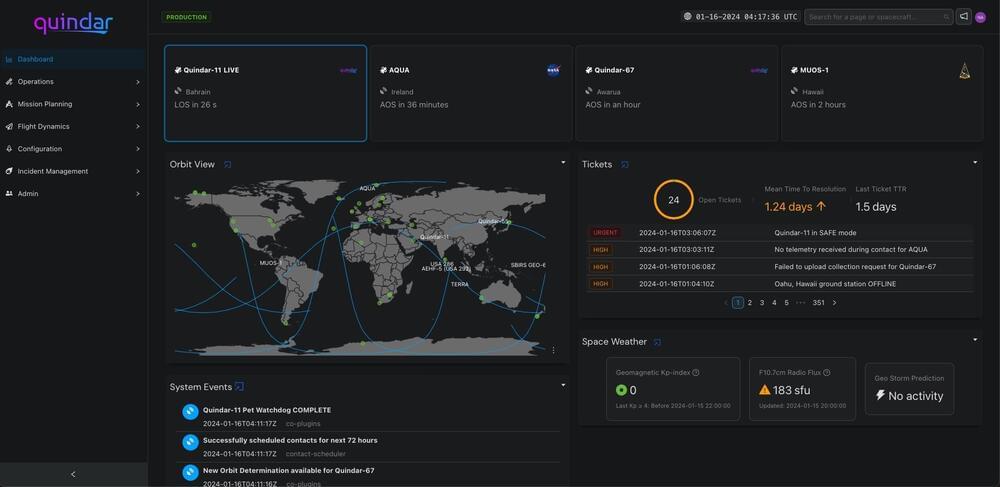

WASHINGTON — Quindar has raised an additional $6 million to further development of software to automate operations of satellite constellations.

The company announced Jan. 30 that it closed $6 million in funding as an extension to a $2.5 million seed round it announced a year ago. Venture capital firm Fuse led the round with participation from existing investors Y Combinator and Founders Fund.

Quindar has developed software designed to automate satellite operations. The company says it has validated that system with an unnamed customer who is using it to manage a growing fleet of spacecraft.

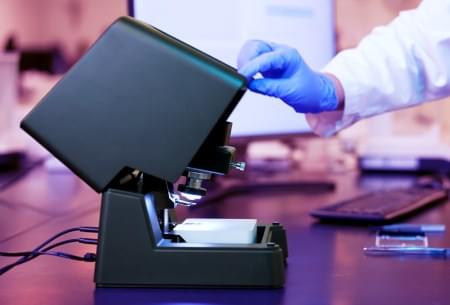

ICSPI, a leader in benchtop nanoscale imaging instruments, has announced the launch of its new Redux AFM, an automated atomic force microscope (AFM) designed to allow scientists and engineers to effortlessly collect 3-dimensional data at the nanoscale.

ICSPI’s mission is to expand access to nanoscale measurement with powerful, automated and intuitive imaging tools. Building on the success of its nGauge AFM, of which hundreds of units are in operation in over 30 countries, ICSPI is excited to introduce the Redux AFM and elevate the user experience of nanoscale imaging with automation.

Traditional AFM instruments, while powerful for nanoscale surface imaging, are often hindered by complex and time-consuming setup processes which are rooted in technology developed in the 1980s. Recognizing this challenge, ICSPI revolutionized the landscape with its unique AFM-on-a-chip technology. The Redux AFM, harnessing this breakthrough technology, makes nanoscale imaging effortless. By integrating multiple components onto a single chip, the Redux eliminates the cumbersome aspects of traditional AFM, such as silicon probe exchange, cantilever alignment, tip crashes, tip-sample approach, and controller tuning.