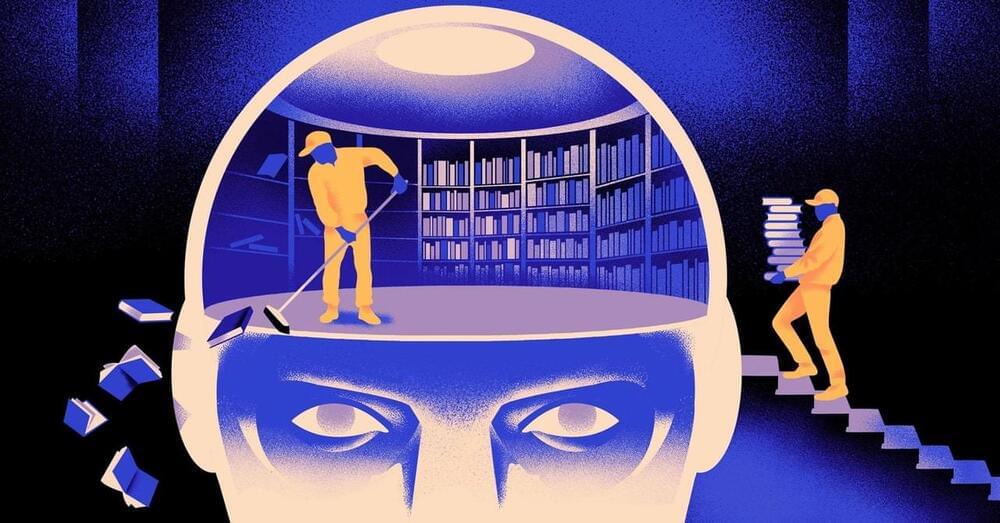

Meta’s latest brainchild, Llama 3, is rewriting the rules of digital communication. This powerhouse AI model doesn’t just compete—it surpasses OpenAI’s GPT-4 and Google’s Gemini. Brace yourself for enhanced responsiveness, multimodal magic, and a dash of ethical finesse.

Under Zuckerberg’s visionary helm, Llama 3 dances with context, reads between the lines and delivers nuanced interactions across platforms. It’s not just about AI; it’s about redefining how we connect. 🚀🤖

https://www.reuters.com/technology/me…

https://www.theverge.com/2024/2/28/24…

#artificialintelligence #ai #meta #llama #artificialgeneralintelligence