By pulling together several different generative models into an easy-to-use package controlled with the push of a button, Lore Machine heralds the arrival of one-click AI.

Generative AI is quickly transforming the way we do things in almost every facet of life, including the evolving landscape of data management and cybersecurity. Cohesity, a company focused on AI-powered data management and security, launched Cohesity Gaia to apply generative AI in a unique way designed to enable customers to access, analyze, and interact with their data.

Cohesity Gaia is a generative AI-powered conversational search assistant. Cohesity blends Large Language Models with an enterprise’s own data and provides organizations with a tool to interact with and extract value from their information repositories. The platform is designed to enable natural language interactions, making it easier for users to query their data without needing to navigate complex databases or understand specialized query languages.

At its heart, Cohesity Gaia leverages generative AI to facilitate conversational interactions with data. Instead of searching through files or databases in the traditional manner, users can engage in a dialogue with the data, asking questions and receiving contextually relevant, accurate answers.

Multimodal #AI for better prevention and treatment of cardiometabolic diseases.

The rise of artificial intelligence (AI) has revolutionized various scientific fields, particularly in medicine, where it has enabled the modeling of complex relationships from massive datasets. Initially, AI algorithms focused on improved interpretation of diagnostic studies such as chest X-rays and electrocardiograms in addition to predicting patient outcomes and future disease onset. However, AI has evolved with the introduction of transformer models, allowing analysis of the diverse, multimodal data sources existing in medicine today.

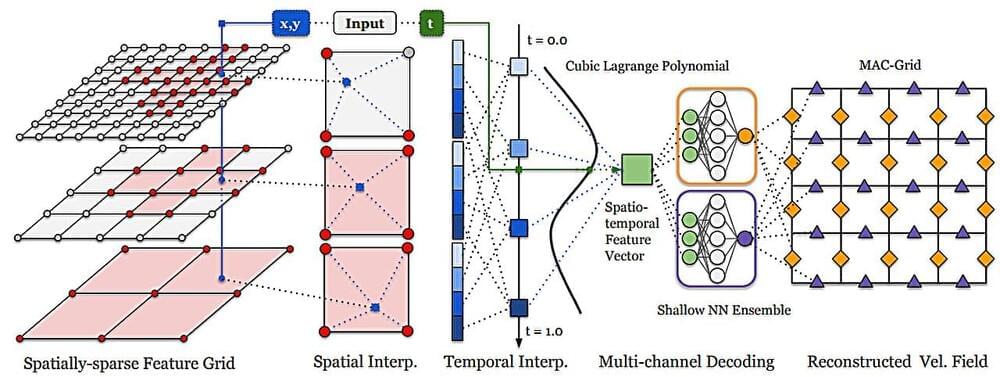

Computer graphic simulations can represent natural phenomena such as tornados, underwater, vortices, and liquid foams more accurately thanks to an advancement in creating artificial intelligence (AI) neural networks.

Working with a multi-institutional team of researchers, Georgia Tech Assistant Professor Bo Zhu combined computer graphic simulations with machine learning models to create enhanced simulations of known phenomena. The new benchmark could lead to researchers constructing representations of other phenomena that have yet to be simulated.

Zhu co-authored the paper “Fluid Simulation on Neural Flow Maps.” The Association for Computing Machinery’s Special Interest Group in Computer Graphics and Interactive Technology (SIGGRAPH) gave it a best paper award in December at the SIGGRAPH Asia conference in Sydney, Australia.

Most humanoid robots pick things up with their hands – but that’s not how we humans do it, particularly when we’re carrying something bulky. We use our chests, hips and arms as well – and that’s the idea behind Toyota’s new soft robot.

Punyo, as it’s called, is a torso-up humanoid research platform. First and foremost, it’s adorably Japanese, with a cute and approachable looking face and a cuddly, husky look reminiscent of the Baymax robot from Disney’s Big Hero 6. Adding to the cuddle factor, he appears to be wearing a big, cosy-looking sweater.

And indeed, this “sweater” is highly hug-focused. It’s made using grippy materials that provide a squishy, compliant layer over Punyo’s hard metal skeleton, and the fabric is loaded with tactile sensors that allow it to feel exactly what it’s hugging, be it a person or an item that it’s carrying.

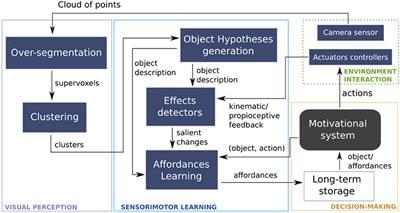

Despite major progress in Robotics and AI, robots are still basically “zombies” repeatedly achieving actions and tasks without understanding what they are doing. Deep-Learning AI programs classify tremendous amounts of data without grasping the meaning of their inputs or outputs. We still lack a genuine theory of the underlying principles and methods that would enable robots to understand their environment, to be cognizant of what they do, to take appropriate and timely initiatives, to learn from their own experience and to show that they know that they have learned and how. The rationale of this paper is that the understanding of its environment by an agent (the agent itself and its effects on the environment included) requires its self-awareness, which actual ly is itself emerging as a result of this understanding and the distinction that the agent is capable to make between its own mind-body and its environment. The paper develops along five issues: agent perception and interaction with the environment; learning actions; agent interaction with other agents–specifically humans; decision-making; and the cognitive architecture integrating these capacities.

We are interested here in robotic agents, i.e., physical machines with perceptual, computational and action capabilities. We believe we still lack a genuine theory of the underlying principles and methods that would explain how we can design robots that can understand their environment and not just build representations lacking meaning, to be cognizant about what they do and about the purpose of their actions, to take timely initiatives beyond goals set by human programmers or users, and to learn from their own experience, knowing what they have learned and how they did so.

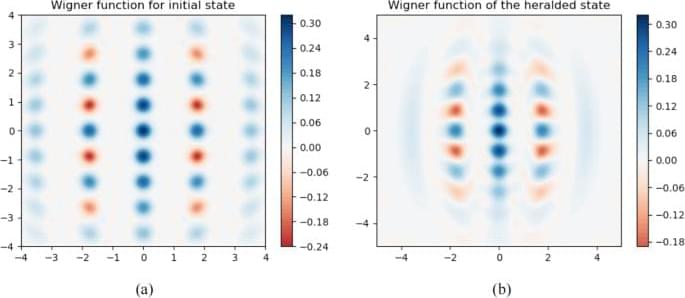

Wang, H., Xue, Y., Qu, Y. et al. Multidimensional Bose quantum error correction based on neural network decoder. npj Quantum Inf 8, 134 (2022). https://doi.org/10.1038/s41534-022-00650-z.