MIT’s Daniela Rus isn’t worried that robots will take over the world. Instead, she envisions robots and humans teaming up to achieve things that neither could do alone.

We’re still years away from seeing physical quantum computers break into the market with any scale and reliability, but don’t give up on deep tech just yet. The market for high-level quantum computer science — which applies quantum principles to manage complex computations in areas like finance and artificial intelligence — appears to be quickening its pace.

In the latest development, a startup out of San Sebastian, Spain, called Multiverse Computing has raised €25 million (or $27 million) in an equity funding round led by Columbus Venture Partners. The funding values the startup at €100 million ($108 million), and it will be used in two main areas. The startup plans to continue building out its existing business working with startups in verticals like manufacturing and finance, and it wants to forge new efforts to work more closely with AI companies building and operating large language models.

In both cases, the pitch is the same, said CEO Enrique Lizaso Olmos: “optimization.”

Generative AI is an utterly transformative technology that is already impacting how organizations and individuals work. But what does the future have in store for this incredible technology? Read on for my top predictions.

We now have generative AI tools that can see, hear, speak, read, write, or create. Increasingly, generative AIs will be able to do many of these things at once – such as being able to create text and images together. As an example, the third iteration of the text-to-image tool Dall-E is reportedly able to generate high-quality text embedded in its images, putting it ahead of rival image-generator tools. Then there was the 2023 announcement that ChatGPT can now see, hear, and speak, as well as write.

So, one of my predictions is that generative AIs will continue this move towards multi-modal AIs that can create in multiple ways – and in real-time, just like the human brain.

Generative AI is quickly transforming the way we do things in almost every facet of life, including the evolving landscape of data management and cybersecurity. Cohesity, a company focused on AI-powered data management and security, launched Cohesity Gaia to apply generative AI in a unique way designed to enable customers to access, analyze, and interact with their data.

Cohesity Gaia is a generative AI-powered conversational search assistant. Cohesity blends Large Language Models with an enterprise’s own data and provides organizations with a tool to interact with and extract value from their information repositories. The platform is designed to enable natural language interactions, making it easier for users to query their data without needing to navigate complex databases or understand specialized query languages.

At its heart, Cohesity Gaia leverages generative AI to facilitate conversational interactions with data. Instead of searching through files or databases in the traditional manner, users can engage in a dialogue with the data, asking questions and receiving contextually relevant, accurate answers.

Multimodal #AI for better prevention and treatment of cardiometabolic diseases.

The rise of artificial intelligence (AI) has revolutionized various scientific fields, particularly in medicine, where it has enabled the modeling of complex relationships from massive datasets. Initially, AI algorithms focused on improved interpretation of diagnostic studies such as chest X-rays and electrocardiograms in addition to predicting patient outcomes and future disease onset. However, AI has evolved with the introduction of transformer models, allowing analysis of the diverse, multimodal data sources existing in medicine today.

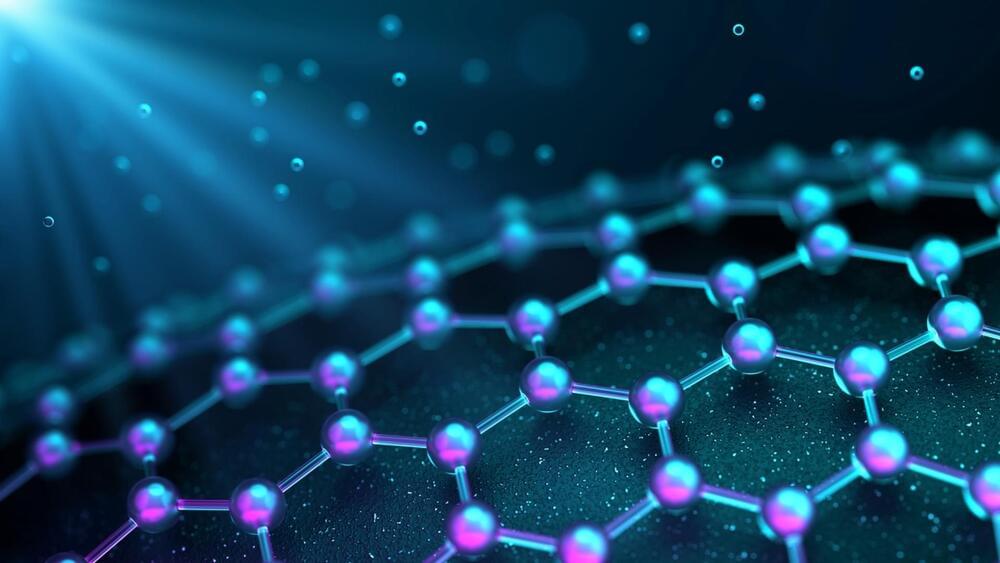

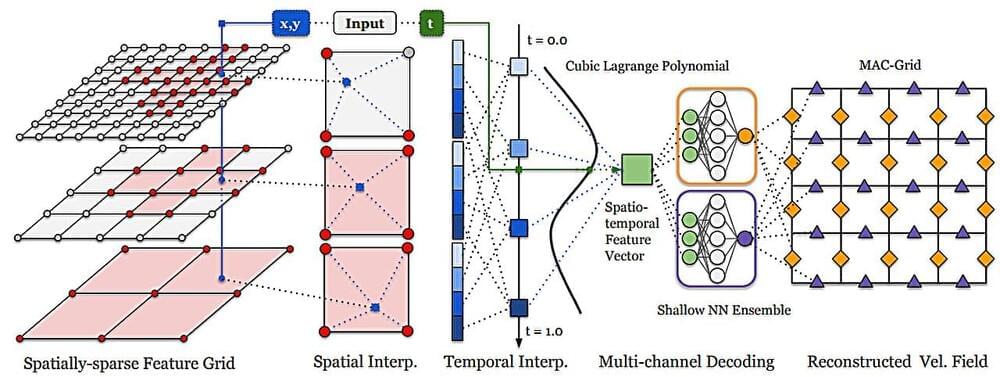

Computer graphic simulations can represent natural phenomena such as tornados, underwater, vortices, and liquid foams more accurately thanks to an advancement in creating artificial intelligence (AI) neural networks.

Working with a multi-institutional team of researchers, Georgia Tech Assistant Professor Bo Zhu combined computer graphic simulations with machine learning models to create enhanced simulations of known phenomena. The new benchmark could lead to researchers constructing representations of other phenomena that have yet to be simulated.

Zhu co-authored the paper “Fluid Simulation on Neural Flow Maps.” The Association for Computing Machinery’s Special Interest Group in Computer Graphics and Interactive Technology (SIGGRAPH) gave it a best paper award in December at the SIGGRAPH Asia conference in Sydney, Australia.