But can it graduate from the lab to the warehouse floor?

The new nonprofit Fairly Trained certifies that artificial intelligence models license copyrighted data—which often isn’t the case.

By Ben Guarino

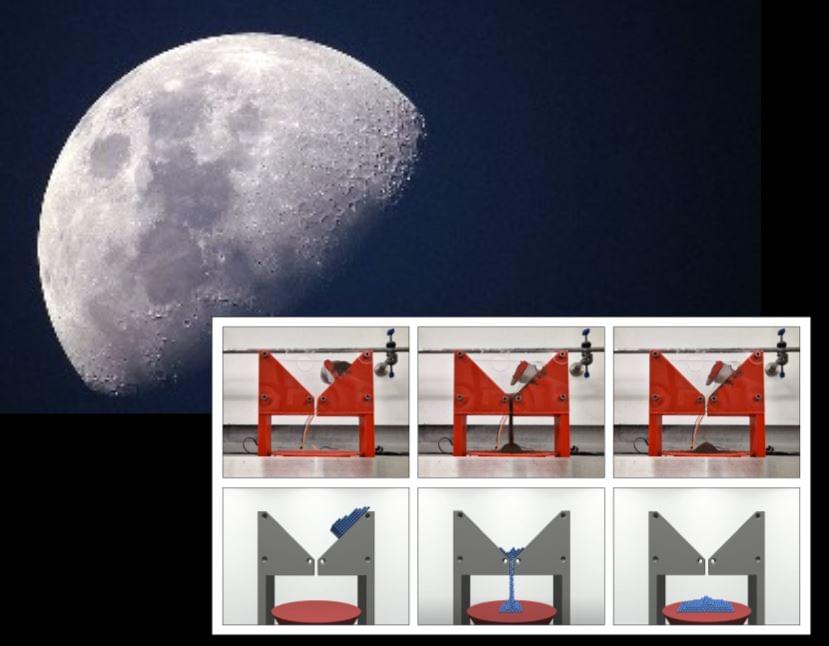

A new computer model mimics Moon dust so well that it could lead to smoother and safer Lunar robot teleoperations. The tool, developed by researchers at the University of Bristol and based at the Bristol Robotics Laboratory, could be used to train astronauts ahead of Lunar missions. Working with their industry partner, Thales Alenia Space in the UK, who has specific interest in creating working robotic systems for space applications, the team investigated a virtual version of regolith, another name for Moon dust.

Lunar regolith is of particular interest for the upcoming Lunar exploration missions planned over the next decade. From it, scientists can potentially extract valuable resources such as oxygen, rocket fuel or construction materials, to support a long-term presence on the Moon. To collect regolith, remotely operated robots emerge as a practical choice due to their lower risks and costs compared to human spaceflight.

However, operating robots over these large distances introduces large delays into the system, which make them more difficult to control. Now that the team know this simulation behaves similarly to reality, they can use it to mirror operating a robot on the Moon. This approach allows operators to control the robot without delays, providing a smoother and more efficient experience.

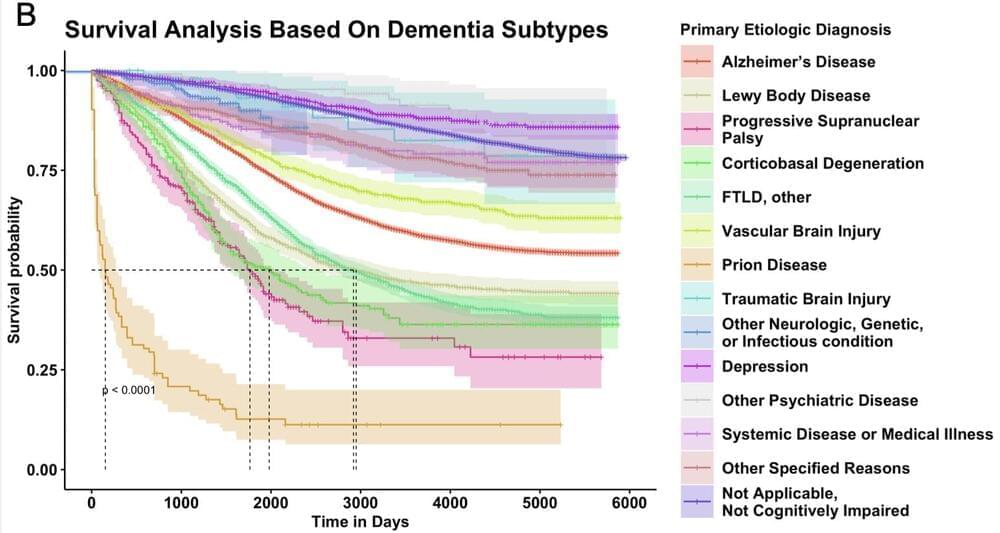

Researchers at the Icahn School of Medicine at Mount Sinai and others have harnessed the power of machine learning to identify key predictors of mortality in dementia patients.

The study, published in the February 28 online issue of Communications Medicine, addresses critical challenges in dementia care by pinpointing patients at high risk of near-term death and uncovers the factors that drive this risk.

Unlike previous studies that focused on diagnosing dementia, this research delves into predicting patient prognosis, shedding light on mortality risks and contributing factors in various kinds of dementia.

Jensen says free ‘isn’t cheap enough’ to stand a chance against the green team in data center AI.

An exploration of Frank Herbert’s implicit and explicit warnings against the unmitigated advancement and dependence on AI (Artificial Intelligence), while also examining how these fundamental concerns, leading to AI’s prohibition, consistently resonate throughout the series. One of its less explored, but equally compelling, elements is its commentary on the rise of artificial intelligence. Dune is set in the far future taking place in an interstellar empire that is devoid of thinking machines after a universal ban against computing technology that is made in the likeness of a human mind. The reasons behind this prohibition not only serve as a caution against the perils of artificial intelligence, but they also underscore broader warnings present throughout Herbert’s Dune books.

Nerd Cookies PATREON: / nerdcookies.

Nerd Cookies DISCORD: / discord.

Twitter: / nerd_cookies.

Follow me on TWITCH: / nerdcookiearcade.

Intro track: honey juice by harris heller.

All the videos, songs, images, and graphics used in the video belong to their respective owners and I or this channel does not claim any right over them.

Fair Use.

Copyright Disclaimer under section 107 of the Copyright Act of 1976, allowance is made for “fair use” for purposes such as criticism, comment, news reporting, teaching, scholarship, education and research.

All content falls under fair use: any copying of copyrighted material done for a limited and “transformative” purpose, such as to comment upon, criticize, or parody a copyrighted work. Such uses can be done without permission from the copyright owner.