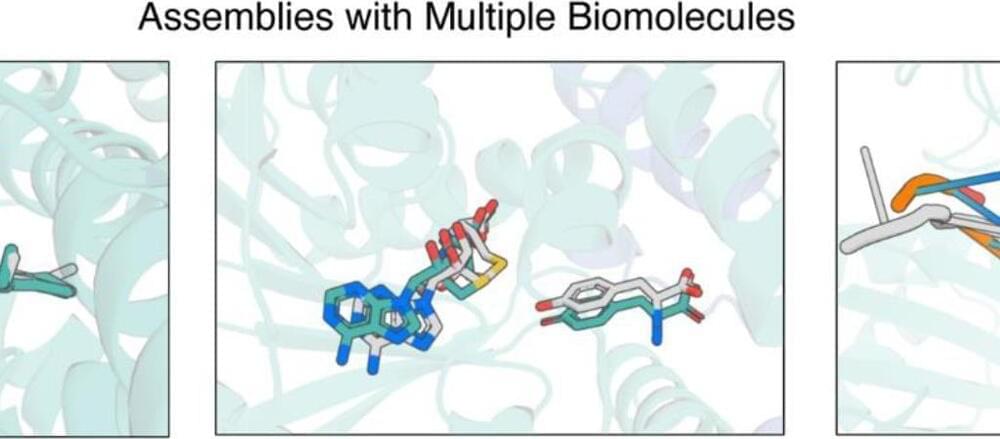

RoseTTAFold extended to predict structures of proteins bound to small molecules.

“That let us faithfully model peripheral vision the same way it is being done in human vision research,” says Harrington.

The researchers used this modified technique to generate a huge dataset of transformed images that appear more textural in certain areas, to represent the loss of detail that occurs when a human looks further into the periphery.

Whipping Up Worlds

Because the AI can learn from unlabeled online videos and is still a modest size—just 11 billion parameters—there’s ample opportunity to scale up. Bigger models trained on more information tend to improve dramatically. And with a growing industry focused on inference —the process of by which a trained AI performs tasks, like generating images or text—it’s likely to get faster.

DeepMind says Genie could help people, like professional developers, make video games. But like OpenAI—which believes Sora is about more than videos—the team is thinking bigger. The approach could go well beyond video games.

Saudi Arabia’s first male robot ‘Muhammad’ allegedly touched a woman inappropriately, sparking outrage on social media platforms with one user calling it a “pervert”. Recently, Muhammad was unveiled during the second edition of DeepFast in Riyadh.

Video of the incident went viral on social media forums. It shows the robot stretching its right hand toward a female reporter when she was giving a piece to the camera.

Some of the users have alleged that the movements of the robot looked intentional when the female reporter, identified as Rawya Kassem, was talking about it.

Radical Plan to Stop ‘Doomsday Glacier’ Melting to Cost $50 Billion.

Working on these next-gen intelligent AIs must be a freaky experience. As Anthropic announces the smartest model ever tested across a range of benchmarks, researchers recall a chilling moment when Claude 3 realized that it was being evaluated.

Anthropic, you may recall, was founded in 2021 by a group of senior OpenAI team members, who broke away because they didn’t agree with OpenAI’s decision to work closely with Microsoft. The company’s Claude and Claude 2 AIs have been competitive with GPT models, but neither Anthropic nor Claude have really broken through into public awareness.

That could well change with Claude 3, since Anthropic now claims to have surpassed GPT-4 and Google’s Gemini 1.0 model on a range of multimodal tests, setting new industry benchmarks “across a wide range of cognitive tasks.”

❤️ Check out Weights & Biases and sign up for a free demo here: https://wandb.me/papers📝 Claude 3 is available here — try it out for free (note that we are…

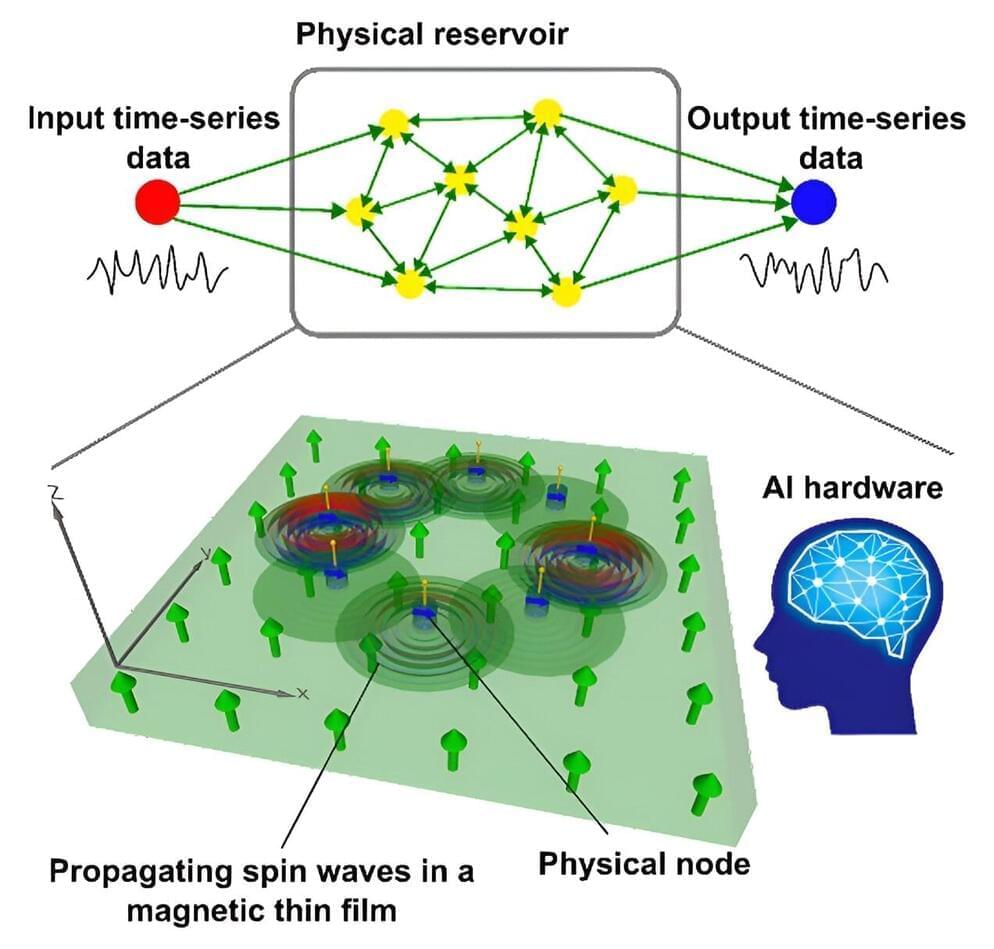

A group of Tohoku University researchers has developed a theoretical model for a high-performance spin wave reservoir computing (RC) that utilizes spintronics technology. The breakthrough moves scientists closer to realizing energy-efficient, nanoscale computing with unparalleled computational power.

Details of their findings were published in npj Spintronics on March 1, 2024.

The brain is the ultimate computer, and scientists are constantly striving to create neuromorphic devices that mimic the brain’s processing capabilities, low power consumption, and its ability to adapt to neural networks. The development of neuromorphic computing is revolutionary, allowing scientists to explore nanoscale realms, GHz speed, with low energy consumption.