AngryGF offers a perpetually enraged chatbot intended to teach men better communication skills. WIRED took it for a spin.

Tesla’s aggressive push towards autonomy and the development of self-driving technology has the potential to drastically change the automotive industry and disrupt the competition.

Questions to inspire discussion.

What is Tesla’s shift in strategy?

—Tesla is undergoing a shift in strategy towards autonomy and self-driving technology, which may be seen as reactionary to current events but also part of the company’s long-term plan.

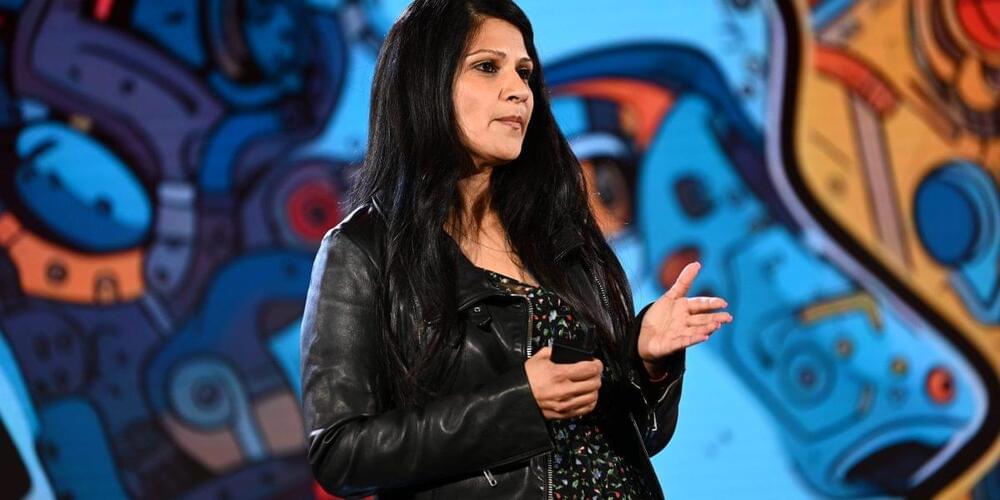

Right now generative AI has an “insatiable demand” for electricity to power the tens of thousands of compute clusters needed to operate large language models like OpenAI’s GPT-4, warned chief marketing officer Ami Badani of chip design firm Arm Holdings.

If generative AI is ever going to be able to run on every mobile device from a laptop and tablet to a smartphone, it will have to be able to scale without overwhelming the electricity grid at the same time.

“We won’t be able to continue the advancements of AI without addressing power,” Badani told the Fortune Brainstorm AI conference in London on Monday. “ChatGPT requires 15 times more energy than a traditional web search.”

Dr. Know-it-all Knows it all.

Brighter with Herbert.