ShortGPT

Layers in large language models are more redundant than you expect.

As AI is increasingly integrated into our lives, scientists building the tech are still trying to understand it, the MIT Tech Review reports.

The new hardware reimagines AI chips for modern workloads and can run powerful AI systems using much less energy than today’s most advanced semiconductors, according to Naveen Verma, professor of electrical and computer engineering. Verma, who will lead the project, said the advances break through key barriers that have stymied chips for AI, including size, efficiency and scalability.

Chips that require less energy can be deployed to run AI in more dynamic environments, from laptops and phones to hospitals and highways to low-Earth orbit and beyond. The kinds of chips that power today’s most advanced models are too bulky and inefficient to run on small devices, and are primarily constrained to server racks and large data centers.

Now, the Defense Advanced Research Projects Agency, or DARPA, has announced it will support Verma’s work, based on a suite of key inventions from his lab, with an $18.6 million grant. The DARPA funding will drive an exploration into how fast, compact and power-efficient the new chip can get.

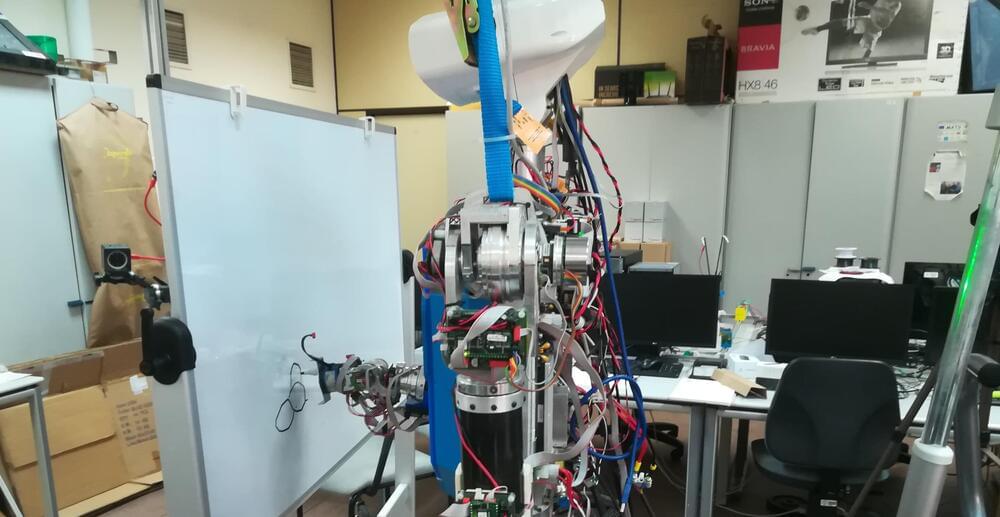

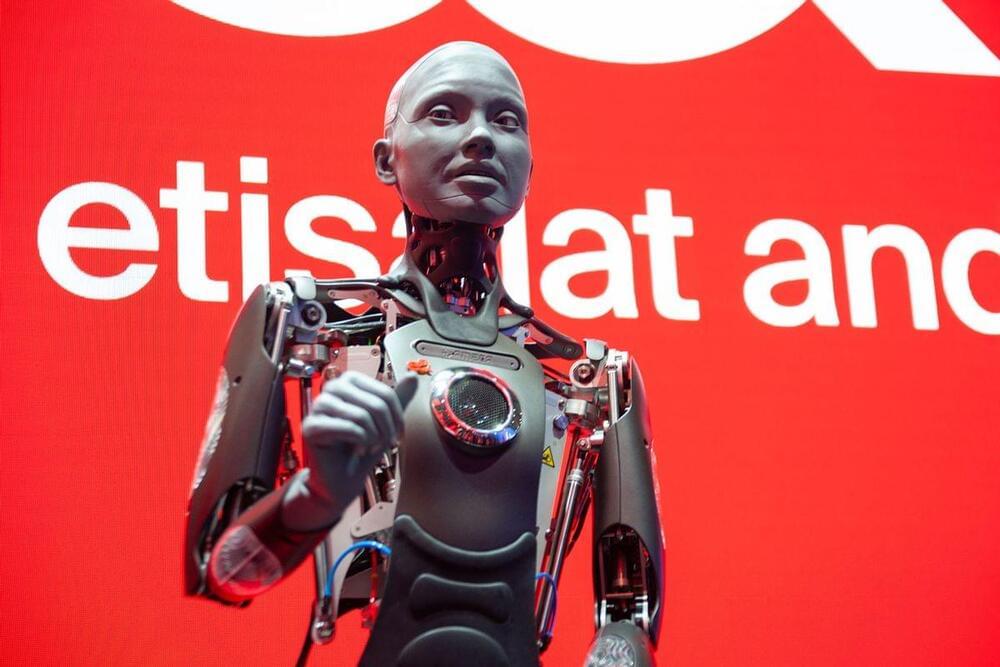

The rapid advancement of deep learning algorithms and generative models has enabled the automated production of increasingly striking AI-generated artistic content. Most of this AI-generated art, however, is created by algorithms and computational models, rather than by physical robots.

Researchers at Universidad Complutense de Madrid (UCM) and Universidad Carlos III de Madrid (UC3M) recently developed a deep learning-based model that allows a humanoid robot to sketch pictures, similarly to how a human artist would. Their paper, published in Cognitive Systems Research, offers a remarkable demonstration of how robots could actively engage in creative processes.

“Our idea was to propose a robot application that could attract the scientific community and the general public,” Raúl Fernandez-Fernandez, co-author of the paper, told Tech Xplore. “We thought about a task that could be shocking to see a robot performing, and that was how the concept of doing art with a humanoid robot came to us.”

AI isn’t nearly as popular with the global populace as its boosters would have you believe.

As Axios reports based on a new poll of 32,000 global respondents from the consultancy firm Edelman, public trust is already eroding less than 18 months into the so-called “AI revolution” that popped off with OpenAI’s release of ChatGPT in November 2022.

“Trust is the currency of the AI era, yet, as it stands, our innovation account is dangerously overdrawn,” Justin Westcott, the global technology chair for the firm, told Axios. “Companies must move beyond the mere mechanics of AI to address its true cost and value — the ‘why’ and ‘for whom.’”

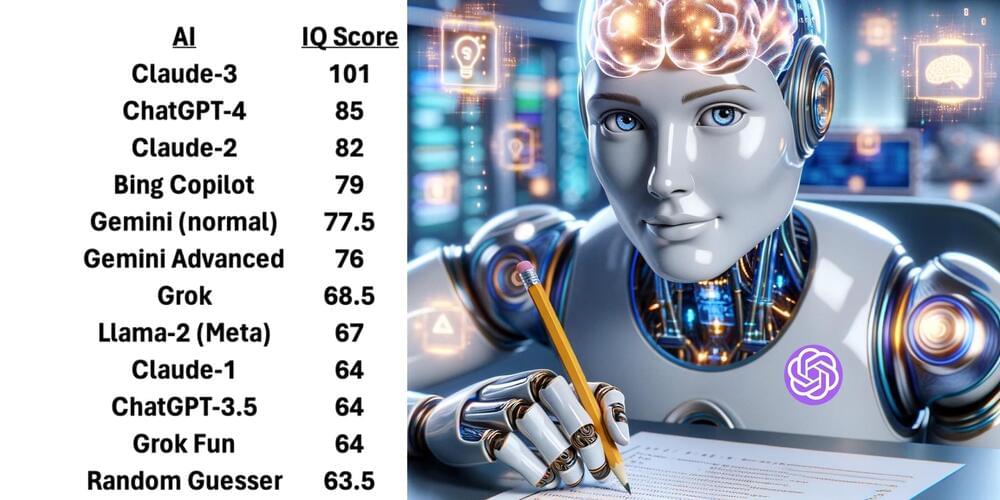

When will AI match and surpass human capability? In short, when will we have AGI, or artificial general intelligence… the kind of intelligence that should teach itself and grow itself to vastly larger intellect than an individual human?

According to Ben Goertzel, CEO of SingularityNet, that time is very close: only 3 to 8 years away. In this TechFirst, I chat with Ben as we approach the Beneficial AGI conference in Panama City, Panama.

We discuss the diverse possibilities of human and post-human existence, from cyborg enhancements to digital mind uploads, and the varying timelines for when we might achieve AGI. We talk about the role of current AI technologies, like LLMs, and how they fit into the path towards AGI, highlighting the importance of combining multiple AI methods to mirror human intelligence complexity.

We also explore the societal and ethical implications of AGI development, including job obsolescence, data privacy, and the potential geopolitical ramifications, emphasizing the critical period of transition towards a post-singularity world where AI could significantly improve human life. Finally, we talk about ownership and decentralization of AI, comparing it to the internet’s evolution, and envisages the role of humans in a world where AI surpasses human intelligence.

00:00 Introduction to the Future of AI

01:28 Predicting the Timeline of Artificial General Intelligence.

02:06 The Role of LLMs in the Path to AGI

05:23 The Impact of AI on Jobs and Economy.

06:43 The Future of AI Development.

10:35 The Role of Humans in a World with AGI

35:10 The Diverse Future of Human and Post-Human Minds.

36:51 The Challenges of Transitioning to a World with AGI

39:34 Conclusion: The Future of AGI.

A massive Microsoft data center in Goodyear, Arizona is guzzling the desert town’s water supply to support its cloud computing and AI efforts, The Atlantic reports.

A source familiar with Microsoft’s Goodyear facility told the Atlantic that it was specifically designed for use by Microsoft and the heavily Micosoft-funded OpenAI. In response to this allegation, both companies declined to comment.

Powering AI demands an incredible amount of energy. Worsening AI’s massive environmental footprint is the fact that it also consumes a mind-boggling amount of water. AI pulls enough electricity from data centers that they risk overheating, so to mitigate that risk, engineers use water to cool the servers back down.

and this means humans will use brain computer interface to transact, but where will the AGI’s economy take shape, and how will you take part?

AI Marketplace: https://taimine.com/

Deep Learning AI Specialization: https://imp.i384100.net/GET-STARTED

AI news timestamps:

0:00 Everyone’s wrong.

1:57 Future of money.

3:04 The economy of AGI

5:35 Brain computer interface transactions.

6:26 Methods of income.

#ai #technology #tech

Brooks himself is among the philosophers who have previously said giving AI sensory and motor skills to engage with the world may be the only way to create true artificial intelligence. A good deal of human creativity, after all, comes from physical self-preservation — a caveman need only cut himself once on sharpened bone to see its use in hunting. And what is art if not a hope that our body-informed memories may outlive the body with which we formed them?

If you want to get even more mind-bent, consider thinkers like Lars Ludwig, who proposed that memory isn’t even something we can hold exclusively in our bodies anyway. Rather, to be human always meant sharing consciousness with technology to “extend artificial memory” — from a handprint on a cave wall, to the hard drive in your laptop. Thus, human cognition and memory could be considered to take place not just in the human brain, nor just in human bodily instinct, but also in the physical environment itself.